D1 read replication makes read-only copies of your database available in multiple regions across Cloudflare鈥檚 network.聽 For busy, read-heavy applications like e-commerce websites, content management tools, and mobile apps:

D1 read replication lowers average latency by routing user requests to read replicas in nearby regions.

D1 read replication increases overall throughput by offloading read queries to read replicas, allowing the primary database to handle more write queries.

The main copy of your database is called the primary database and the read-only copies are called read replicas.聽 When you enable replication for a D1 database, the D1 service automatically creates and maintains read replicas of your primary database.聽 As your users make requests, D1 routes those requests to an appropriate copy of the database (either the primary or a replica) based on performance heuristics, the type of queries made in those requests, and the query consistency needs as expressed by your application.

All of this global replica creation and request routing is handled by Cloudflare at no additional cost.

To take advantage of read replication, your Worker needs to use the new D1 Sessions API. Click the button below to run a Worker using D1 read replication with this code example to see for yourself!

D1鈥檚 read replication feature is built around the concept of database sessions.聽 A session encapsulates all the queries representing one logical session for your application. For example, a session might represent all requests coming from a particular web browser or all requests coming from a mobile app used by one of your users. If you use sessions, your queries will use the appropriate copy of the D1 database that makes the most sense for your request, be that the primary database or a nearby replica.

The sessions implementation ensures sequential consistency for all queries in the session, no matter what copy of the database each query is routed to.聽 The sequential consistency model has important properties like "read my own writes" and "writes follow reads," as well as a total ordering of writes. The total ordering of writes means that every replica will see transactions committed in the same order, which is exactly the behavior we want in a transactional system.聽 Said another way, sequential consistency guarantees that the reads and writes are executed in the order in which you write them in your code.

Some examples of consistency implications in real-world applications:

You are using an online store and just placed an order (write query), followed by a visit to the account page to list all your orders (read query handled by a replica). You want the newly placed order to be listed there as well.

You are using your bank鈥檚 web application and make a transfer to your electricity provider (write query), and then immediately navigate to the account balance page (read query handled by a replica) to check the latest balance of your account, including that last payment.

Why do we need the Sessions API? Why can we not just query replicas directly?

Applications using D1 read replication need the Sessions API because D1 runs on Cloudflare鈥檚 global network and there鈥檚 no way to ensure that requests from the same client get routed to the same replica for every request. For example, the client may switch from WiFi to a mobile network in a way that changes how their requests are routed to Cloudflare. Or the data center that handled previous requests could be down because of an outage or maintenance.

D1鈥檚 read replication is asynchronous, so it鈥檚 possible that when you switch between replicas, the replica you switch to lags behind the replica you were using. This could mean that, for example, the new replica hasn鈥檛 learned of the writes you just completed.聽 We could no longer guarantee useful properties like 鈥渞ead your own writes鈥?聽 In fact, in the presence of shifty routing, the only consistency property we could guarantee is that what you read had been committed at some point in the past (read committed consistency), which isn鈥檛 very useful at all!

Since we can鈥檛 guarantee routing to the same replica, we flip the script and use the information we get from the Sessions API to make sure whatever replica we land on can handle the request in a sequentially-consistent manner.

Here鈥檚 what the Sessions API looks like in a Worker:

export default {

async fetch(request: Request, env: Env) {

// A. Create the session.

// When we create a D1 session, we can continue where we left off from a previous

// session if we have that session's last bookmark or use a constraint.

const bookmark = request.headers.get('x-d1-bookmark') ?? 'first-unconstrained'

const session = env.DB.withSession(bookmark)

// Use this session for all our Workers' routes.

const response = await handleRequest(request, session)

// B. Return the bookmark so we can continue the session in another request.

response.headers.set('x-d1-bookmark', session.getBookmark())

return response

}

}

async function handleRequest(request: Request, session: D1DatabaseSession) {

const { pathname } = new URL(request.url)

if (request.method === "GET" && pathname === '/api/orders') {

// C. Session read query.

const { results } = await session.prepare('SELECT * FROM Orders').all()

return Response.json(results)

} else if (request.method === "POST" && pathname === '/api/orders') {

const order = await request.json<Order>()

// D. Session write query.

// Since this is a write query, D1 will transparently forward it to the primary.

await session

.prepare('INSERT INTO Orders VALUES (?, ?, ?)')

.bind(order.orderId, order.customerId, order.quantity)

.run()

// E. Session read-after-write query.

// In order for the application to be correct, this SELECT statement must see

// the results of the INSERT statement above.

const { results } = await session

.prepare('SELECT * FROM Orders')

.all()

return Response.json(results)

}

return new Response('Not found', { status: 404 })

}To use the Session API, you first need to create a session using the withSession method (step A).聽 The withSession method takes a bookmark as a parameter, or a constraint.聽 The provided constraint instructs D1 where to forward the first query of the session. Using first-unconstrained allows the first query to be processed by any replica without any restriction on how up-to-date it is. Using first-primary ensures that the first query of the session will be forwarded to the primary.

// A. Create the session.

const bookmark = request.headers.get('x-d1-bookmark') ?? 'first-unconstrained'

const session = env.DB.withSession(bookmark)Providing an explicit bookmark instructs D1 that whichever database instance processes the query has to be at least as up-to-date as the provided bookmark (in case of a replica; the primary database is always up-to-date by definition).聽 Explicit bookmarks are how we can continue from previously-created sessions and maintain sequential consistency across user requests.

Once you鈥檝e created the session, make queries like you normally would with D1.聽 The session object ensures that the queries you make are sequentially consistent with regards to each other.

// C. Session read query.

const { results } = await session.prepare('SELECT * FROM Orders').all()For example, in the code example above, the session read query for listing the orders (step C) will return results that are at least as up-to-date as the bookmark used to create the session (step A).

More interesting is the write query to add a new order (step D) followed by the read query to list all orders (step E). Because both queries are executed on the same session, it is guaranteed that the read query will observe a database copy that includes the write query, thus maintaining sequential consistency.

// D. Session write query.

await session

.prepare('INSERT INTO Orders VALUES (?, ?, ?)')

.bind(order.orderId, order.customerId, order.quantity)

.run()

// E. Session read-after-write query.

const { results } = await session

.prepare('SELECT * FROM Orders')

.all()Note that we could make a single batch query to the primary including both the write and the list, but the benefit of using the new Sessions API is that you can use the extra read replica databases for your read queries and allow the primary database to handle more write queries.

The session object does the necessary bookkeeping to maintain the latest bookmark observed across all queries executed using that specific session, and always includes that latest bookmark in requests to D1. Note that any query executed without using the session object is not guaranteed to be sequentially consistent with the queries executed in the session.

When possible, we suggest continuing sessions across requests by including bookmarks in your responses to clients (step B), and having clients passing previously received bookmarks in their future requests.

// B. Return the bookmark so we can continue the session in another request.

response.headers.set('x-d1-bookmark', session.getBookmark())This allows all of a client鈥檚 requests to be in the same session. You can do this by grabbing the session鈥檚 current bookmark at the end of the request (session.getBookmark()) and sending the bookmark in the response back to the client in HTTP headers, in HTTP cookies, or in the response body itself.

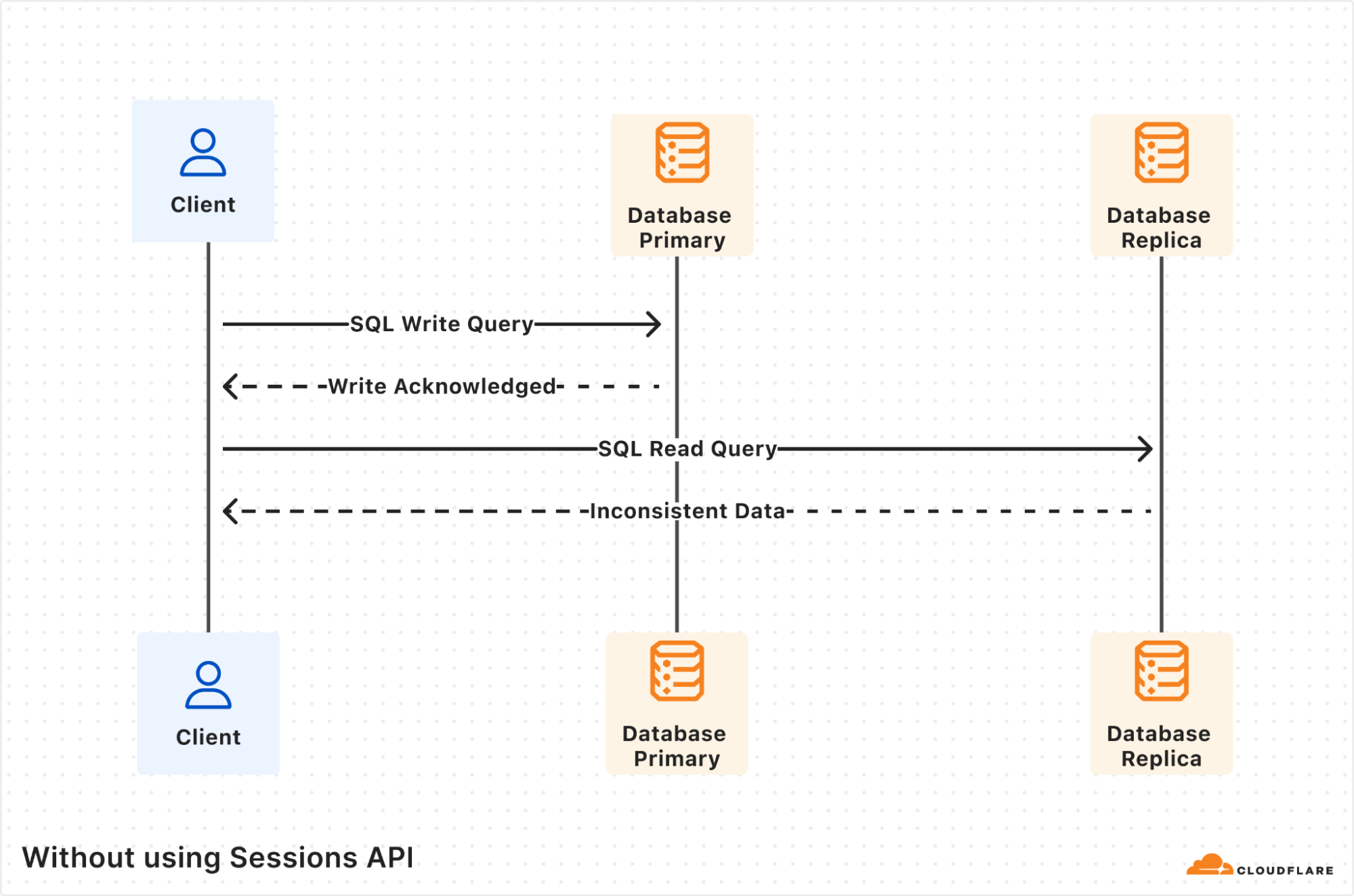

In this section, we will explore the classic scenario of a read-after-write query to showcase how using the new D1 Sessions API ensures that we get sequential consistency and avoid any issues with inconsistent results in our application.

The Client, a user Worker, sends a D1 write query that gets processed by the database primary and gets the results back. However, the subsequent read query ends up being processed by a database replica. If the database replica is lagging far enough behind the database primary, such that it does not yet include the first write query, then the returned results will be inconsistent, and probably incorrect for your application business logic.

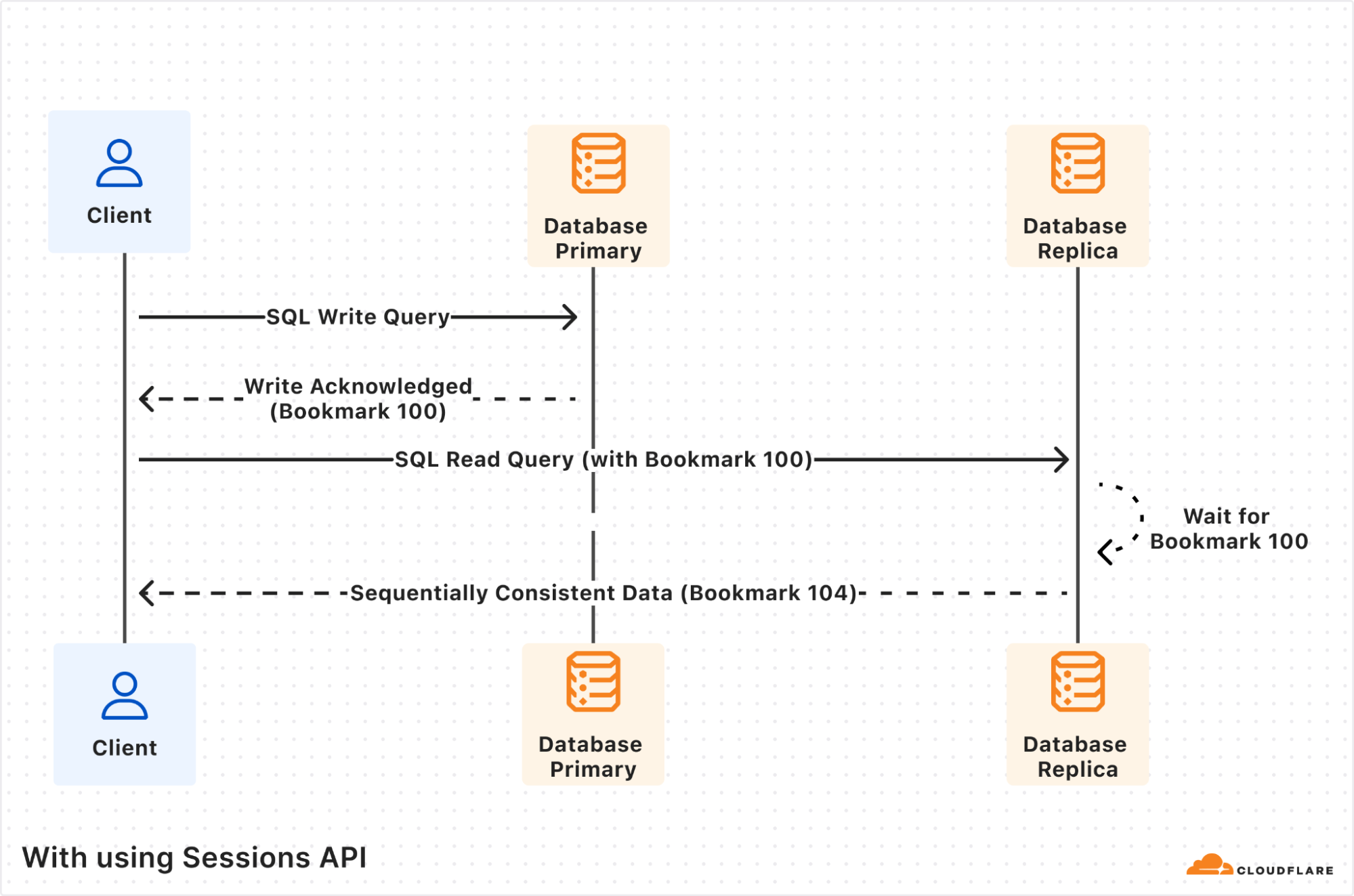

Using the Sessions API fixes the inconsistency issue. The first write query is again processed by the database primary, and this time the response includes 鈥?b>Bookmark 100鈥? The session object will store this bookmark for you transparently.

The subsequent read query is processed by database replica as before, but now since the query includes the previously received 鈥?b>Bookmark 100鈥? the database replica will wait until its database copy is at least up-to-date as 鈥?b>Bookmark 100鈥? Only once it鈥檚 up-to-date, the read query will be processed and the results returned, including the replica鈥檚 latest bookmark 鈥?b>Bookmark 104鈥?

Notice that the returned bookmark for the read query is 鈥?b>Bookmark 104鈥? which is different from the one passed in the query request. This can happen if there were other writes from other client requests that also got replicated to the database replica in-between the two queries our own client executed.

To start using D1 read replication:

Update your Worker to use the D1 Sessions API to tell D1 what queries are part of the same database session. The Sessions API works with databases that do not have read replication enabled as well, so it鈥檚 safe to ship this code even before you enable replicas. Here鈥檚 an example.

Enable replicas for your database via Cloudflare dashboard > Select D1 database > Settings.

D1 read replication is built into D1, and you don鈥檛 pay extra storage or compute costs for replicas. You incur the exact same D1 usage with or without replicas, based on rows_read and rows_written by your queries. Unlike other traditional database systems with replication, you don鈥檛 have to manually create replicas, including where they run, or decide how to route requests between the primary database and read replicas. Cloudflare handles this when using the Sessions API while ensuring sequential consistency.

Since D1 read replication is in beta, we recommend trying D1 read replication on a non-production database first, and migrate to your production workloads after validating read replication works for your use case.

If you don鈥檛 have a D1 database and want to try out D1 read replication, create a test database in the Cloudflare dashboard.

Once you鈥檝e enabled D1 read replication, read queries will start to be processed by replica database instances. The response of each query includes information in the nested meta object relevant to read replication, like served_by_region and served_by_primary. The first denotes the region of the database instance that processed the query, and the latter will be true if-and-only-if your query was processed by the primary database instance.

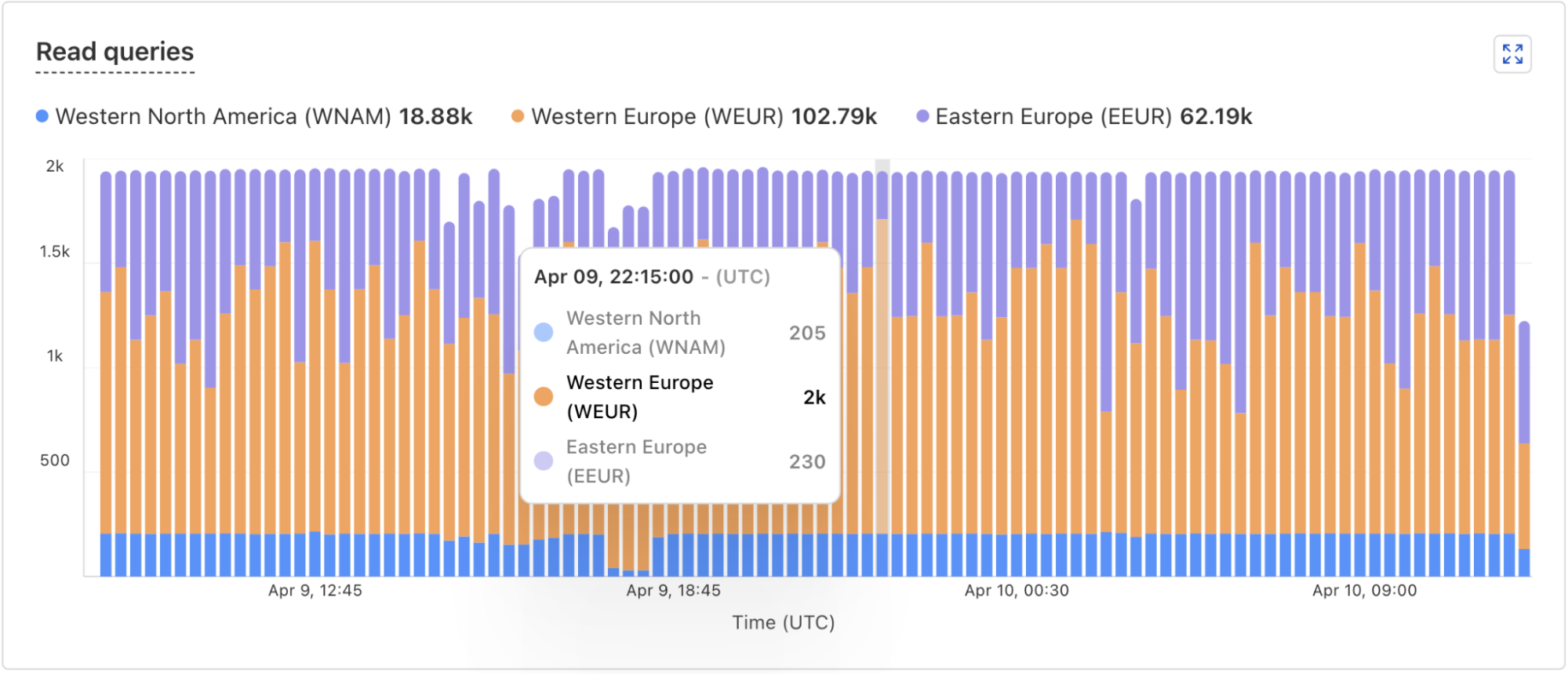

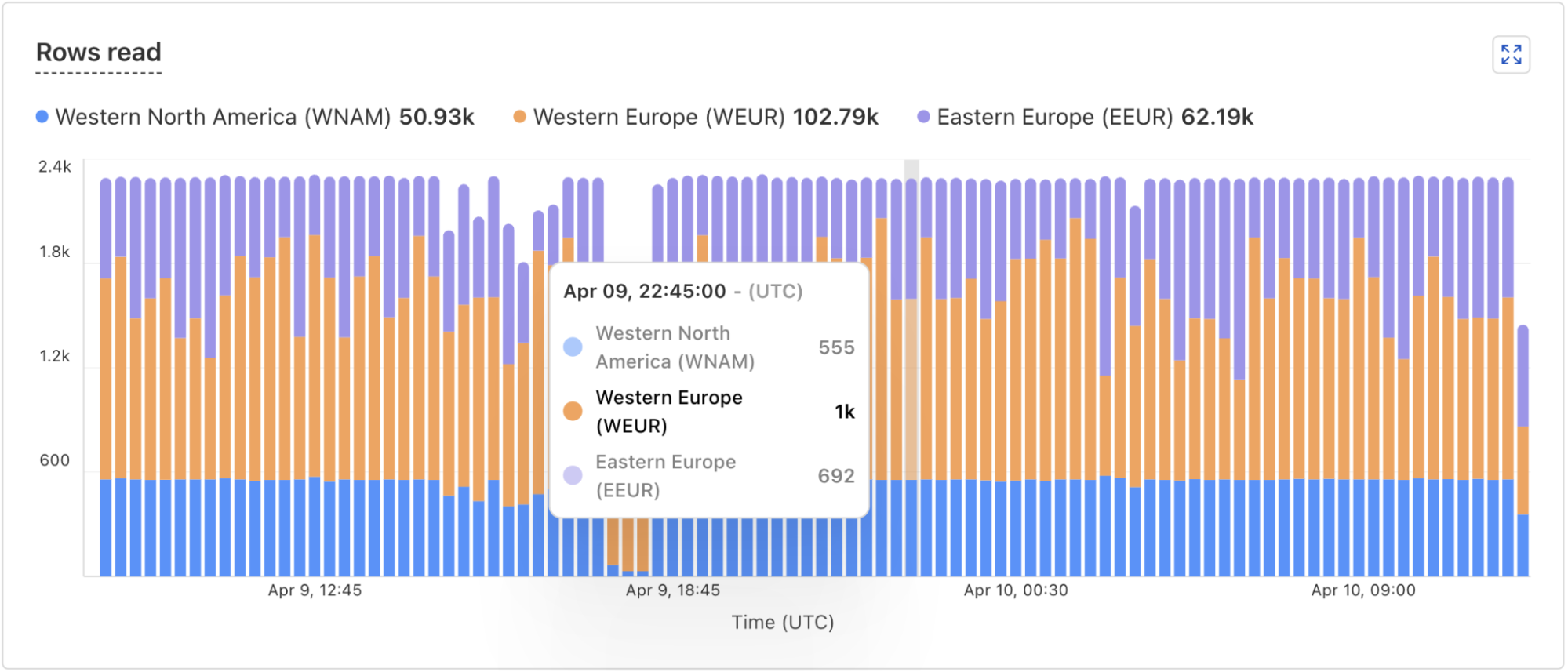

In addition, the D1 dashboard overview for a database now includes information about the database instances handling your queries. You can see how many queries are handled by the primary instance or by a replica, and a breakdown of the queries processed by region. The example screenshots below show graphs displaying the number of queries executed and number of rows read by each region.

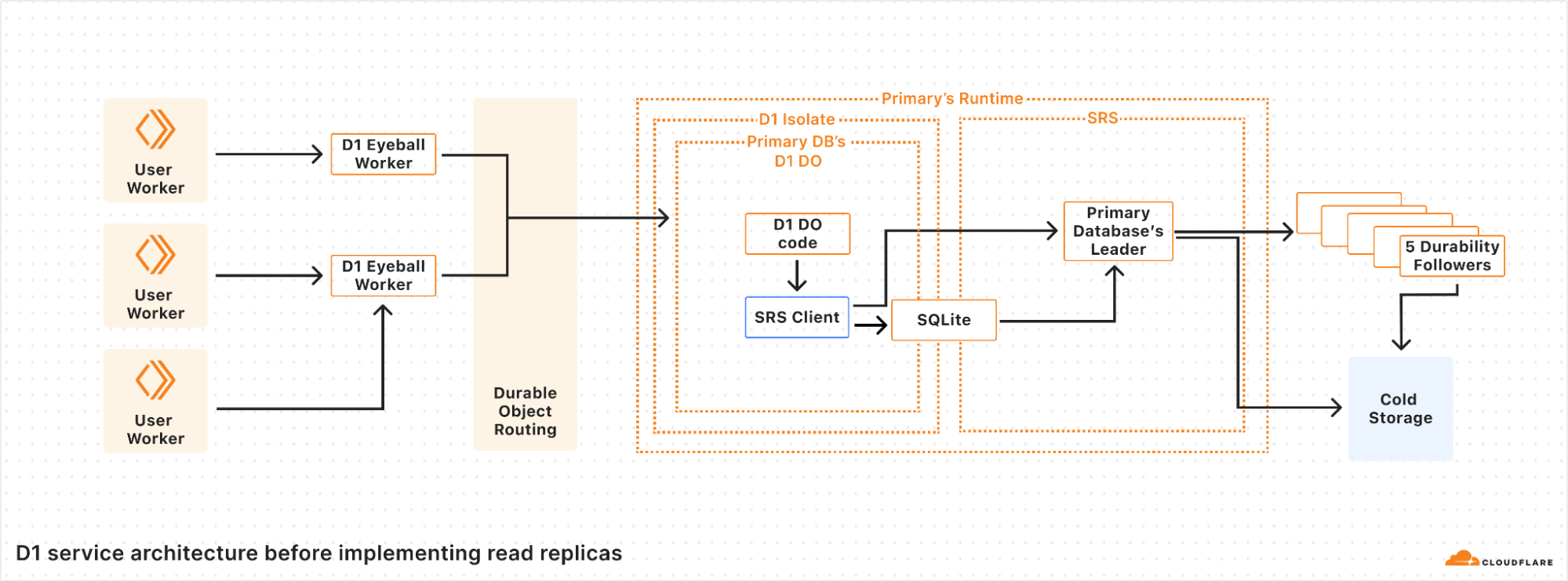

D1 is implemented on top of SQLite-backed Durable Objects running on top of Cloudflare鈥檚 Storage Relay Service.

D1 is structured with a 3-layer architecture.聽 First is the binding API layer that runs in the customer鈥檚 Worker.聽 Next is a stateless Worker layer that routes requests based on database ID to a layer of Durable Objects that handle the actual SQL operations behind D1.聽 This is similar to how most applications using Cloudflare Workers and Durable Objects are structured.

For a non-replicated database, there is exactly one Durable Object per database.聽 When a user鈥檚 Worker makes a request with the D1 binding for the database, that request is first routed to a D1 Worker running in the same location as the user鈥檚 Worker.聽 The D1 Worker figures out which D1 Durable Object backs the user鈥檚 D1 database and fetches an RPC stub to that Durable Object.聽 The Durable Objects routing layer figures out where the Durable Object is located, and opens an RPC connection to it.聽 Finally, the D1 Durable Object then handles the query on behalf of the user鈥檚 Worker using the Durable Objects SQL API.

In the Durable Objects SQL API, all queries go to a SQLite database on the local disk of the server where the Durable Object is running.聽 Durable Objects run SQLite in WAL mode.聽 In WAL mode, every write query appends to a write-ahead log (the WAL).聽 As SQLite appends entries to the end of the WAL file, a database-specific component called the Storage Relay Service leader synchronously replicates the entries to 5 durability followers on servers in different datacenters.聽 When a quorum (at least 3 out of 5) of the durability followers acknowledge that they have safely stored the data, the leader allows SQLite鈥檚 write queries to commit and opens the Durable Object鈥檚 output gate, so that the Durable Object can respond to requests.

Our implementation of WAL mode allows us to have a complete log of all of the committed changes to the database. This enables a couple of important features in SQLite-backed Durable Objects and D1:

We identify each write with a Lamport timestamp we call a bookmark.

We construct databases anywhere in the world by downloading all of the WAL entries from cold storage and replaying each WAL entry in order.

We implement Point-in-time recovery (PITR) by replaying WAL entries up to a specific bookmark rather than to the end of the log.

Unfortunately, having the main data structure of the database be a log is not ideal.聽 WAL entries are in write order, which is often neither convenient nor fast.聽 In order to cut down on the overheads of the log, SQLite checkpoints the log by copying the WAL entries back into the main database file.聽 Read queries are serviced directly by SQLite using files on disk 鈥?either the main database file for checkpointed queries, or the WAL file for writes more recent than the last checkpoint.聽 Similarly, the Storage Relay Service snapshots the database to cold storage so that we can replay a database by downloading the most recent snapshot and replaying the WAL from there, rather than having to download an enormous number of individual WAL entries.

WAL mode is the foundation for implementing read replication, since we can stream writes to locations other than cold storage in real time.

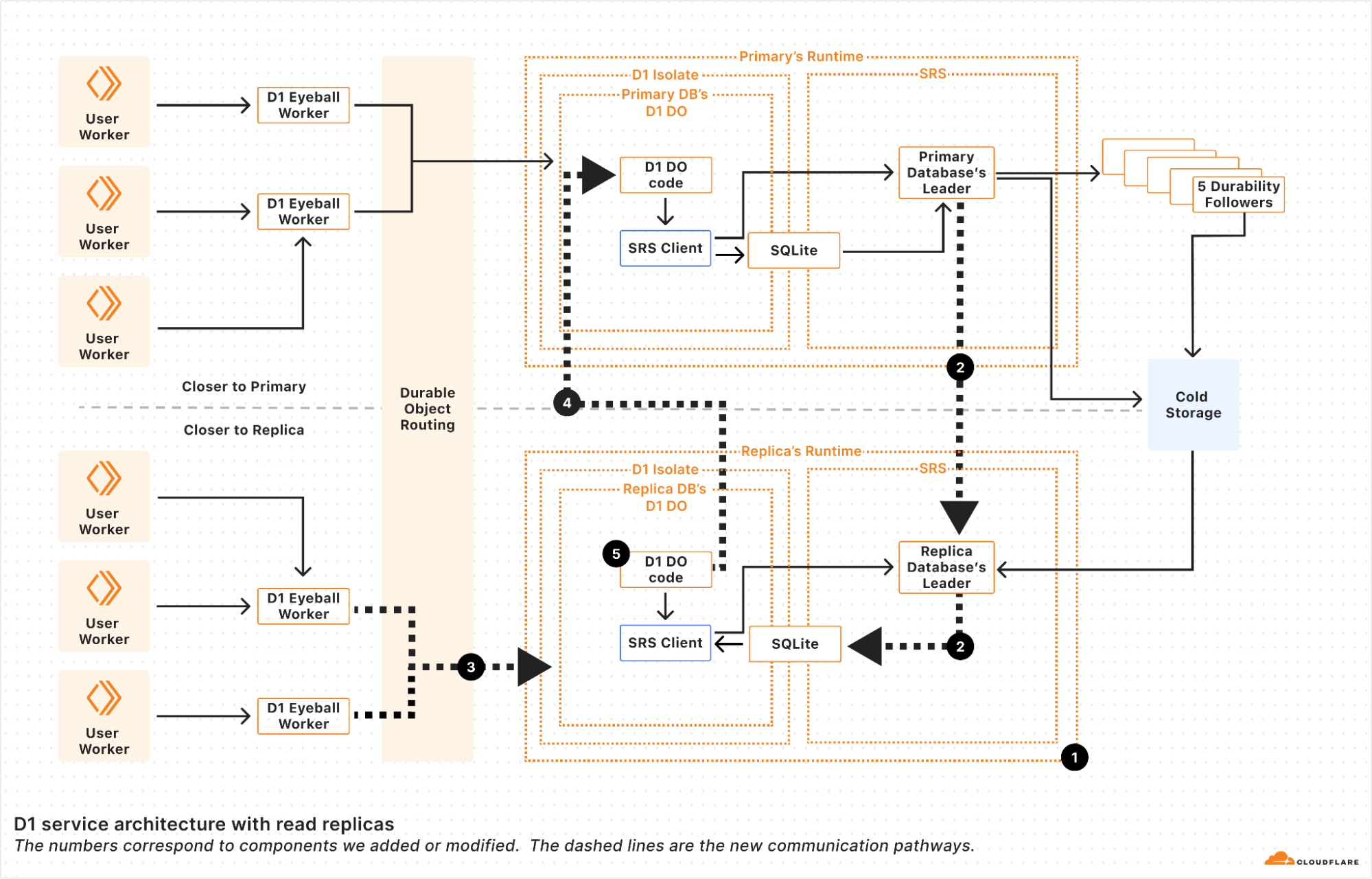

We implemented read replication in 5 major steps.

First, we made it possible to make replica Durable Objects with a read-only copy of the database.聽 These replica objects boot by fetching the latest snapshot and replaying the log from cold storage to whatever bookmark primary database鈥檚 leader last committed. This basically gave us point-in-time replicas, since without continuous updates, the replicas never updated until the Durable Object restarted.

Second, we registered the replica leader with the primary鈥檚 leader so that the primary leader sends the replicas every entry written to the WAL at the same time that it sends the WAL entries to the durability followers.聽 Each of the WAL entries is marked with a bookmark that uniquely identifies the WAL entry in the sequence of WAL entries.聽 We鈥檒l use the bookmark later.

Note that since these writes are sent to the replicas before a quorum of durability followers have confirmed them, the writes are actually unconfirmed writes, and the replica leader must be careful to keep the writes hidden from the replica Durable Object until they are confirmed.聽 The replica leader in the Storage Relay Service does this by implementing enough of SQLite鈥檚 WAL-index protocol, so that the unconfirmed writes coming from the primary leader look to SQLite as though it鈥檚 just another SQLite client doing unconfirmed writes.聽 SQLite knows to ignore the writes until they are confirmed in the log.聽 The upshot of this is that the replica leader can write WAL entries to the SQLite WAL immediately, and then 鈥渃ommit鈥?them when the primary leader tells the replica that the entries have been confirmed by durability followers.

One neat thing about this approach is that writes are sent from the primary to the replica as quickly as they are generated by the primary, helping to minimize lag between replicas.聽 In theory, if the write query was proxied through a replica to the primary, the response back to the replica will arrive at almost the same time as the message that updates the replica.聽 In such a case, it looks like there鈥檚 no replica lag at all!

In practice, we find that replication is really fast.聽 Internally, we measure confirm lag, defined as the time from when a primary confirms a change to when the replica confirms a change.聽 The table below shows the confirm lag for two D1 databases whose primaries are in different regions.

|

|

Database A (Primary region: ENAM) |

Database B |

|

ENAM |

N/A |

30 ms |

|

WNAM |

45 ms |

N/A |

|

WEUR |

55 ms |

75 ms |

|

EEUR |

67 ms |

75 ms |

Confirm lag for 2 replicated databases.聽 N/A means that we have no data for this combination.聽 The region abbreviations are the same ones used for Durable Object location hints.

The table shows that confirm lag is correlated with the network round-trip time between the data centers hosting the primary databases and their replicas.聽 This is clearly visible in the difference between the confirm lag for the European replicas of the two databases.聽 As airline route planners know, EEUR is appreciably further away from ENAM than WEUR is, but from WNAM, both European regions (WEUR and EEUR) are about equally as far away.聽 We see that in our replication numbers.

The exact placement of the D1 database in the region matters too.聽 Regions like ENAM and WNAM are quite large in themselves.聽 Database A鈥檚 placement in ENAM happens to be further away from most data centers in WNAM compared to database B鈥檚 placement in WNAM relative to the ENAM data centers.聽 As such, database B sees slightly lower confirm lag.

Try as we might, we can鈥檛 beat the speed of light!

Third, we updated the Durable Object routing system to be aware of Durable Object replicas.聽 When read replication is enabled on a Durable Object, two things happen.聽 First, we create a set of replicas according to a replication policy.聽 The current replication policy that D1 uses is simple: a static set of replicas in every region that D1 supports.聽 Second, we turn on a routing policy for the Durable Object.聽 The current policy that D1 uses is also simple: route to the Durable Object replica in the region close to where the user request is.聽 With this step, we have updateable read-only replicas, and can route requests to them!

Fourth, we updated D1鈥檚 Durable Object code to handle write queries on replicas. D1 uses SQLite to figure out whether a request is a write query or a read query.聽 This means that the determination of whether something is a read or write query happens after the request is routed.聽 Read replicas will have to handle write requests!聽 We solve this by instantiating each replica D1 Durable Object with a reference to its primary.聽 If the D1 Durable Object determines that the query is a write query, it forwards the request to the primary for the primary to handle. This happens transparently, keeping the user code simple.

As of this fourth step, we can handle read and write queries at every copy of the D1 Durable Object, whether it's a primary or not.聽 Unfortunately, as outlined above, if a user's requests get routed to different read replicas, they may see different views of the database, leading to a very weak consistency model.聽 So the last step is to implement the Sessions API across the D1 Worker and D1 Durable Object.聽 Recall that every WAL entry is marked with a bookmark.聽 These bookmarks uniquely identify a point in (logical) time in the database.聽 Our bookmarks are strictly monotonically increasing; every write to a database makes a new bookmark with a value greater than any other bookmark for that database.

Using bookmarks, we implement the Sessions API with the following algorithm split across the D1 binding implementation, the D1 Worker, and D1 Durable Object.

First up in the D1 binding, we have code that creates the D1DatabaseSession object and code within the D1DatabaseSession object to keep track of the latest bookmark.

// D1Binding is the binding code running within the user's Worker

// that provides the existing D1 Workers API and the new withSession method.

class D1Binding {

// Injected by the runtime to the D1 Binding.

d1Service: D1ServiceBinding

function withSession(initialBookmark) {

return D1DatabaseSession(this.d1Service, this.databaseId, initialBookmark);

}

}

// D1DatabaseSession holds metadata about the session, most importantly the

// latest bookmark we know about for this session.

class D1DatabaseSession {

constructor(d1Service, databaseId, initialBookmark) {

this.d1Service = d1Service;

this.databaseId = databaseId;

this.bookmark = initialBookmark;

}

async exec(query) {

// The exec method in the binding sends the query to the D1 Worker

// and waits for the the response, updating the bookmark as

// necessary so that future calls to exec use the updated bookmark.

var resp = await this.d1Service.handleUserQuery(databaseId, query, bookmark);

if (isNewerBookmark(this.bookmark, resp.bookmark)) {

this.bookmark = resp.bookmark;

}

return resp;

}

// batch and other SQL APIs are implemented similarly.

}The binding code calls into the D1 stateless Worker (d1Service in the snippet above), which figures out which Durable Object to use, and proxies the request to the Durable Object.

class D1Worker {

async handleUserQuery(databaseId, query) {

var doId = /* look up Durable Object for databaseId */;

return await this.D1_DO.get(doId).handleWorkerQuery(query, bookmark)

}

}Finally, we reach the Durable Objects layer, which figures out how to actually handle the request.

class D1DurableObject {

async handleWorkerQuery(queries, bookmark) {

var bookmark = bookmark ?? "first-primary";

var results = {};

if (this.isPrimaryDatabase()) {

// The primary always has the latest data so we can run the

// query without checking the bookmark.

var result = /* execute query directly */;

bookmark = getCurrentBookmark();

results = result;

} else {

// This is running on a replica.

if (bookmark === "first-primary" || isWriteQuery(query)) {

// The primary must handle this request, so we'll proxy the

// request to the primary.

var resp = await this.primary.handleWorkerQuery(query, bookmark);

bookmark = resp.bookmark;

results = resp.results;

} else {

// The replica can handle this request, but only after the

// database is up-to-date with the bookmark.

if (bookmark !== "first-unconstrained") {

await waitForBookmark(bookmark);

}

var result = /* execute query locally */;

bookmark = getCurrentBookmark();

results = result;

}

}

return { results: results, bookmark: bookmark };

}

}The D1 Durable Object first figures out if this instance can handle the query, or if the query needs to be sent to the primary.聽 If the Durable Object can execute the query, it ensures that we execute the query with a bookmark at least as up-to-date as the bookmark requested by the binding.

The upshot is that the three pieces of code work together to ensure that all of the queries in the session see the database in a sequentially consistent order, because each new query will be blocked until it has seen the results of previous queries within the same session.

D1鈥檚 new read replication feature is a significant step towards making globally distributed databases easier to use without sacrificing consistency. With automatically provisioned replicas in every region, your applications can now serve read queries faster while maintaining strong sequential consistency across requests, and keeping your application Worker code simple.

We鈥檙e excited for developers to explore this feature and see how it improves the performance of your applications. The public beta is just the beginning鈥攚e鈥檙e actively refining and expanding D1鈥檚 capabilities, including evolving replica placement policies, and your feedback will help shape what鈥檚 next.

Note that the Sessions API is only available through the D1 Worker Binding for now, and support for the HTTP REST API will follow soon.

Try out D1 read replication today by clicking the 鈥淒eploy to Cloudflare" button, check out documentation and examples, and let us know what you build in the D1 Discord channel!

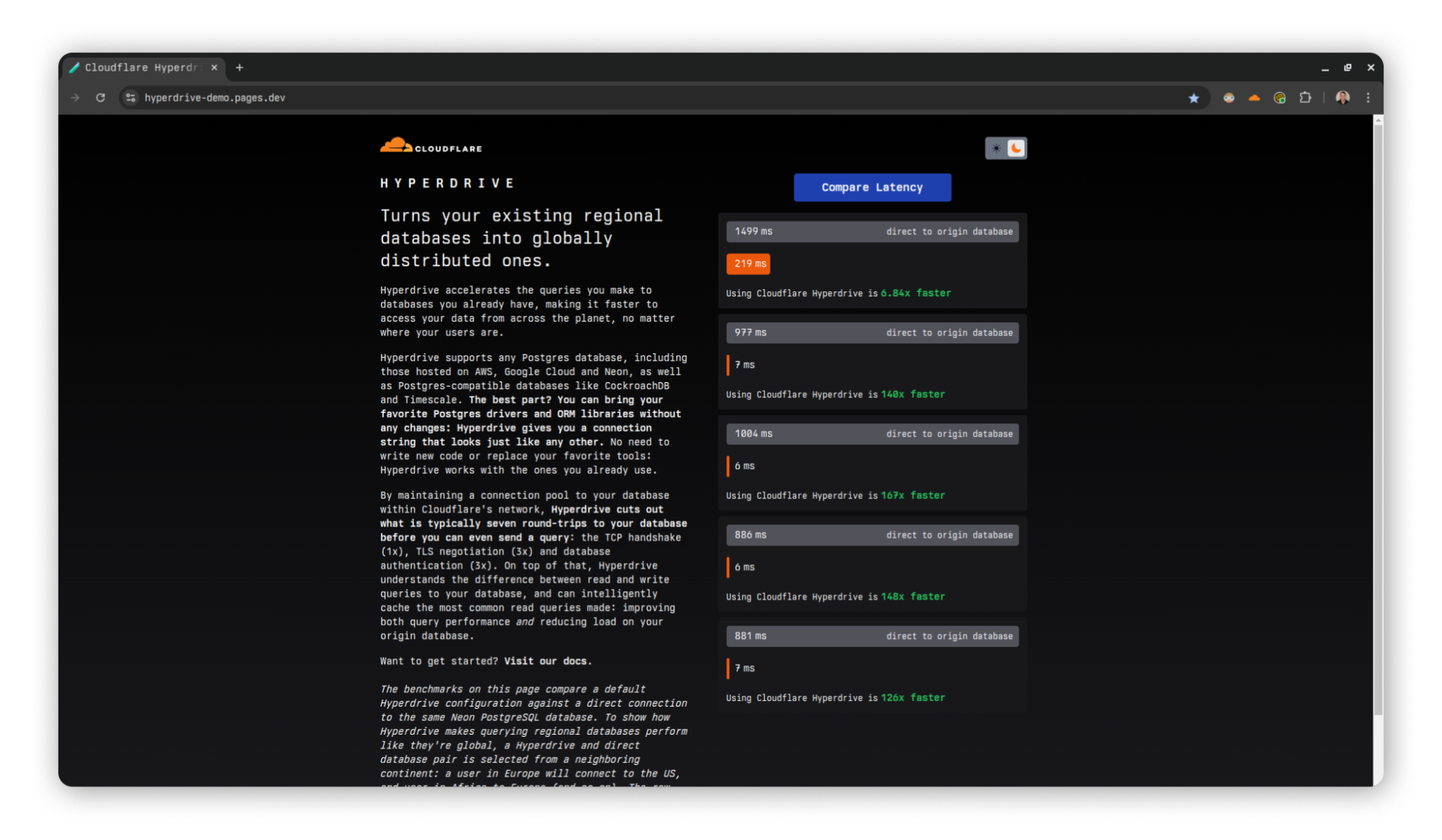

In acknowledgement of its pivotal role in building distributed applications that rely on regional databases, we鈥檙e making Hyperdrive available on the free plan of Cloudflare Workers!

Hyperdrive enables you to build performant, global apps on Workers with your existing SQL databases. Tell it your database connection string, bring your existing drivers, and Hyperdrive will make connecting to your database faster. No major refactors or convoluted configuration required.

Over the past year, Hyperdrive has become a key service for teams that want to build their applications on Workers and connect to SQL databases. This includes our own engineering teams, with Hyperdrive serving as the tool of choice to connect from Workers to our own Postgres clusters for many of the control-plane actions of our billing, D1, R2, and Workers KV teams (just to name a few).聽

This has highlighted for us that Hyperdrive is a fundamental building block, and it solves a common class of problems for which there isn鈥檛 a great alternative. We want to make it possible for everyone building on Workers to connect to their database of choice with the best performance possible, using the drivers and frameworks they already know and love.

To illustrate how much Hyperdrive can improve your application鈥檚 performance, let鈥檚 write the world鈥檚 simplest benchmark. This is obviously not production code, but is meant to be reflective of a common application you鈥檇 bring to the Workers platform. We鈥檙e going to use a simple table, a very popular OSS driver (postgres.js), and run a standard OLTP workload from a Worker. We鈥檙e going to keep our origin database in London, and query it from Chicago (those locations will come back up later, so keep them in mind).

// This is the test table we're using

// CREATE TABLE IF NOT EXISTS test_data(userId bigint, userText text, isActive bool);

import postgres from 'postgres';

let direct_conn = '<direct connection string here!>';

let hyperdrive_conn = env.HYPERDRIVE.connectionString;

async function measureLatency(connString: string) {

let beginTime = Date.now();

let sql = postgres(connString);

await sql`INSERT INTO test_data VALUES (${999}, 'lorem_ipsum', ${true})`;

await sql`SELECT userId, userText, isActive FROM test_data WHERE userId = ${999}`;

let latency = Date.now() - beginTime;

ctx.waitUntil(sql.end());

return latency;

}

let directLatency = await measureLatency(direct_conn);

let hyperdriveLatency = await measureLatency(hyperdrive_conn);The code above

Takes a standard database connection string, and uses it to create a database connection.

Loads a user record into the database.

Queries all records for that user.

Measures how long this takes to do with a direct connection, and with Hyperdrive.

When connecting directly to the origin database, this set of queries takes an average of 1200 ms. With absolutely no other changes, just swapping out the connection string for env.HYPERDRIVE.connectionString, this number is cut down to 500 ms (an almost 60% reduction). If you enable Hyperdrive鈥檚 caching, so that the SELECT query is served from cache, this takes only 320 ms. With this one-line change, Hyperdrive will reduce the latency of this Worker by almost 75%! In addition to this speedup, you also get secure auth and transport, as well as a connection pool to help protect your database from being overwhelmed when your usage scales up. See it for yourself using our demo application.

A demo application comparing latencies between Hyperdrive and direct-to-database connections.

Traditional SQL databases are familiar and powerful, but they are designed to be colocated with long-running compute. They were not conceived in the era of modern serverless applications, and have connection models that don't take the constraints of such an environment into account. Instead, they require highly stateful connections that do not play well with Workers鈥?global and stateless model. Hyperdrive solves this problem by maintaining database connections across Cloudflare鈥檚 network ready to be used at a moment鈥檚 notice, caching your queries for fast access, and eliminating round trips to minimize network latency.

With this announcement, many developers are going to be taking a look at Hyperdrive for the first time over the coming weeks and months. To help people dive in and try it out, we think it鈥檚 time to talk about how Hyperdrive actually works.

Let鈥檚 talk a bit about database connection poolers, how they work, and what problems they already solve. They are hardly a new technology, after all.聽

The point of any connection pooler, Hyperdrive or others, is to minimize the overhead of establishing and coordinating database connections. Every new database connection requires additional memory and CPU time from the database server, and this can only scale just so well as the number of concurrent connections climbs. So the question becomes, how should database connections be shared across clients?聽

There are three commonly-used approaches for doing so. These are:

Session mode: whenever a client connects, it is assigned a connection of its own until it disconnects. This dramatically reduces the available concurrency, in exchange for much simpler implementation and a broader selection of supported features

Transaction mode: when a client is ready to send a query or open a transaction, it is assigned a connection on which to do so. This connection will be returned to the pool when the query or transaction concludes. Subsequent queries during the same client session may (or may not) be assigned a different connection.

Statement mode: Like transaction mode, but a connection is given out and returned for each statement. Multi-statement transactions are disallowed.

When building Hyperdrive, we had to decide which of these modes we wanted to use. Each of the approaches implies some fairly serious tradeoffs, so what鈥檚 the right choice? For a service intended to make using a database from Workers as pleasant as possible we went with the choice that balances features and performance, and designed Hyperdrive as a transaction-mode pooler. This best serves the goals of supporting a large number of short-lived clients (and therefore very high concurrency), while still supporting the transactional semantics that cause so many people to reach for an RDBMS in the first place.

In terms of this part of its design, Hyperdrive takes its cues from many pre-existing popular connection poolers, and manages operations to allow our users to focus on designing their full-stack applications. There is a configured limit to the number of connections the pool will give out, limits to how long a connection will be held idle until it is allowed to drop and return resources to the database, bookkeeping around prepared statements being shared across pooled connections, and other traditional concerns of the management of these resources to help ensure the origin database is able to run smoothly. These are all described in our documentation.

Ok, so why build Hyperdrive then? Other poolers that solve these problems already exist 鈥?couldn鈥檛 developers using Workers just run one of those and call it a day? It turns out that connecting to regional poolers from Workers has the same major downside as connecting to regional databases: network latency and round trips.

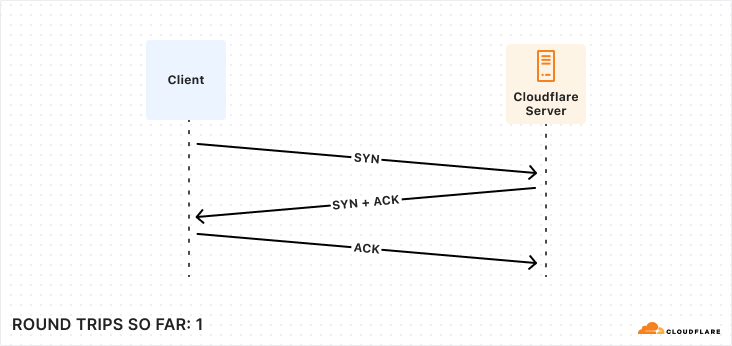

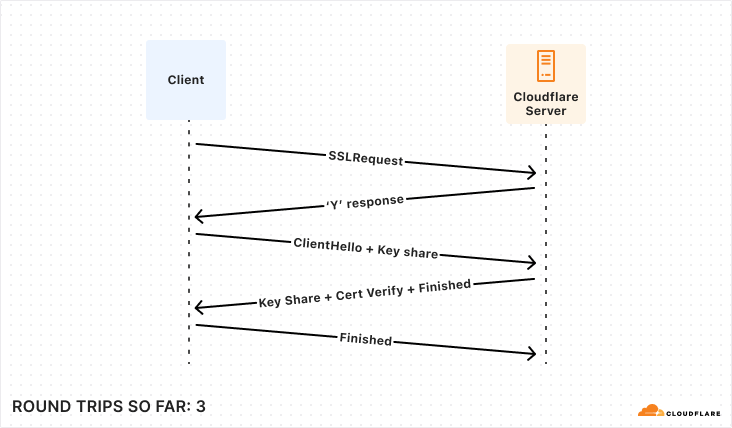

Establishing a connection, whether to a database or a pool, requires many exchanges between the client and server. While this is true for all fully-fledged client-server databases (e.g. MySQL, MongoDB), we are going to focus on the PostgreSQL connection protocol flow in this post. As we work through all of the steps involved, what we most want to keep track of is how many round trips it takes to accomplish. Note that we鈥檙e mostly concerned about having to wait around while these happen, so 鈥渉alf鈥?round trips such as in the first diagram are not counted. This is because we can send off the message and then proceed without waiting.

The first step to establishing a connection between Postgres client and server is very familiar ground to anyone who鈥檚 worked much with networks: a TCP startup handshake. Postgres uses TCP for its underlying transport, and so we must have that connection before anything else can happen on top of it.

With our transport layer in place, the next step is to encrypt the connection. The TLS Handshake involves some back-and-forth in its own right, though this has been reduced to just one round trip for TLS 1.3. Below is the simplest and fastest version of this exchange, but there are certainly scenarios where it can be much more complex.

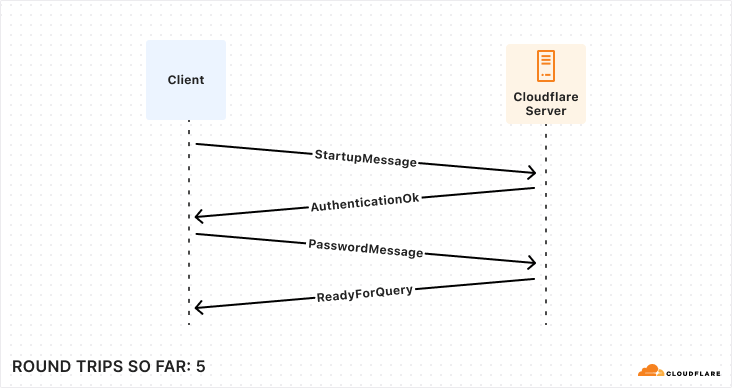

After the underlying transport is established and secured, the application-level traffic can actually start! However, we鈥檙e not quite ready for queries, the client still needs to authenticate to a specific user and database. Again, there are multiple supported approaches that offer varying levels of speed and security. To make this comparison as fair as possible, we鈥檙e again going to consider the version that offers the fastest startup (password-based authentication).

So, for those keeping score, establishing a new connection to your database takes a bare minimum of 5 round trips, and can very quickly climb from there.聽

While the latency of any given network round trip is going to vary based on so many factors that 鈥渋t depends鈥?is the only meaningful measurement available, some quick benchmarking during the writing of this post shows ~125 ms from Chicago to London. Now multiply that number by 5 round trips and the problem becomes evident: 625 ms to start up a connection is not viable in a distributed serverless environment. So how does Hyperdrive solve it? What if I told you the trick is that we do it all twice? To understand Hyperdrive鈥檚 secret sauce, we need to dive into Hyperdrive鈥檚 architecture.

The rest of this post is a deep dive into answering the question of how Hyperdrive does what it does. To give the clearest picture, we鈥檙e going to talk about some internal subsystems by name. To help keep everything straight, let鈥檚 start with a short glossary that you can refer back to if needed. These descriptions may not make sense yet, but they will by the end of the article.

Hyperdrive subsystem name | Brief description |

Client | Lives on the same server as your Worker, talks directly to your database driver. This caches query results and sends queries to Endpoint if needed. |

Endpoint | Lives in the data center nearest to your origin database, talks to your origin database. This caches query results and houses a pool of connections to your origin database. |

Edge Validator | Sends a request to a Cloudflare data center to validate that Hyperdrive can connect to your origin database at time of creation. |

Placement | Builds on top of Edge Validator to connect to your origin database from all eligible data centers, to identify which have the fastest connections. |

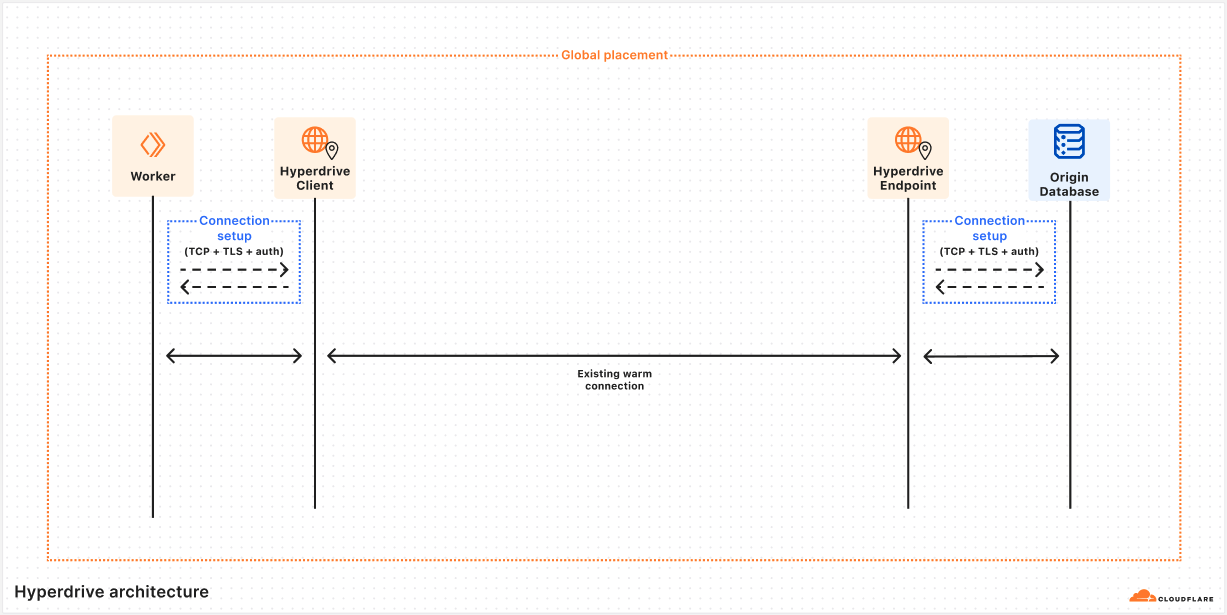

The first subsystem we want to dig into is named Client. Client鈥檚 first job is to pretend to be a database server. When a user鈥檚 Worker wants to connect to their database via Hyperdrive, they use a special connection string that the Worker runtime generates on the fly. This tells the Worker to reach out to a Hyperdrive process running on the same Cloudflare server, and direct all traffic to and from the database client to it.

import postgres from "postgres";

// Connect to Hyperdrive

const sql = postgres(env.HYPERDRIVE.connectionString);

// sql will now talk over an RPC channel to Hyperdrive, instead of via TCP to PostgresOnce this connection is established, the database driver will perform the usual handshake expected of it, with our Client playing the role of a database server and sending the appropriate responses. All of this happens on the same Cloudflare server running the Worker, and we observe that the p90 for all this is 4 ms (p50 is 2 ms). Quite a bit better than 625 ms, but how does that help? The query still needs to get to the database, right?

Client鈥檚 second main job is to inspect the queries sent from a Worker, and decide whether they can be served from Cloudflare鈥檚 cache. We鈥檒l talk more about that later on. Assuming that there are no cached query results available, Client will need to reach out to our second important subsystem, which we call Endpoint.

Before we dig into the role Endpoint plays, it鈥檚 worth talking more about how the Client鈫扙ndpoint connection works, because it鈥檚 a key piece of our solution. We have already talked a lot about the price of network round trips, and how a Worker might be quite far away from the origin database, so how does Hyperdrive handle the long trip from the Client running alongside their Worker to the Endpoint running near their database without expensive round trips?

This is accomplished with a very handy bit of Cloudflare鈥檚 networking infrastructure. When Client gets a cache miss, it will submit a request to our networking platform for a connection to whichever data center Endpoint is running on. This platform keeps a pool of ready TCP connections between all of Cloudflare鈥檚 data centers, such that we don鈥檛 need to do any preliminary handshakes to begin sending application-level traffic. You might say we put a connection pooler in our connection pooler.

Over this TCP connection, we send an initialization message that includes all of the buffered query messages the Worker has sent to Client (the mental model would be something like a SYN and a payload all bundled together). Endpoint will do its job processing this query, and respond by streaming the response back to Client, leaving the streaming channel open for any followup queries until Client disconnects. This approach allows us to send queries around the world with zero wasted round trips.

Endpoint has a couple different jobs it has to do. Its first job is to pretend to be a database client, and to do the client half of the handshake shown above. Second, it must also do the same query processing that Client does with query messages. Finally, Endpoint will make the same determination on when it needs to reach out to the origin database to get uncached query results.

When Endpoint needs to query the origin database, it will attempt to take a connection out of a limited-size pool of database connections that it keeps. If there is an unused connection available, it is handed out from the pool and used to ferry the query to the origin database, and the results back to Endpoint. Once Endpoint has these results, the connection is immediately returned to the pool so that another Client can use it. These warm connections are usable in a matter of microseconds, which is obviously a dramatic improvement over the round trips from one region to another that a cold startup handshake would require.

If there are no currently unused connections sitting in the pool, it may start up a new one (assuming the pool has not already given out as many connections as it is allowed to). This set of handshakes looks exactly the same as the one Client does, but it happens across the network between a Cloudflare data center and wherever the origin database happens to be. These are the same 5 round trips as our original example, but instead of a full Chicago鈫扡ondon path on every single trip, perhaps it鈥檚 Virginia鈫扡ondon, or even London鈫扡ondon. Latency here will depend on which data center Endpoint is being housed in.

Earlier, we mentioned that Hyperdrive is a transaction-mode pooler. This means that when a driver is ready to send a query or open a transaction it must get a connection from the pool to use. The core challenge for a transaction-mode pooler is in aligning the state of the driver with the state of the connection checked out from the pool. For example, if the driver thinks it鈥檚 in a transaction, but the database doesn鈥檛, then you might get errors or even corrupted results.

Hyperdrive achieves this by ensuring all connections are in the same state when they鈥檙e checked out of the pool: idle and ready for a query. Where Hyperdrive differs from other transaction-mode poolers is that it does this dance of matching up the states of two different connections across machines, such that there鈥檚 no need to share state between Client and Endpoint! Hyperdrive can terminate the incoming connection in Client on the same machine running the Worker, and pool the connections to the origin database wherever makes the most sense.

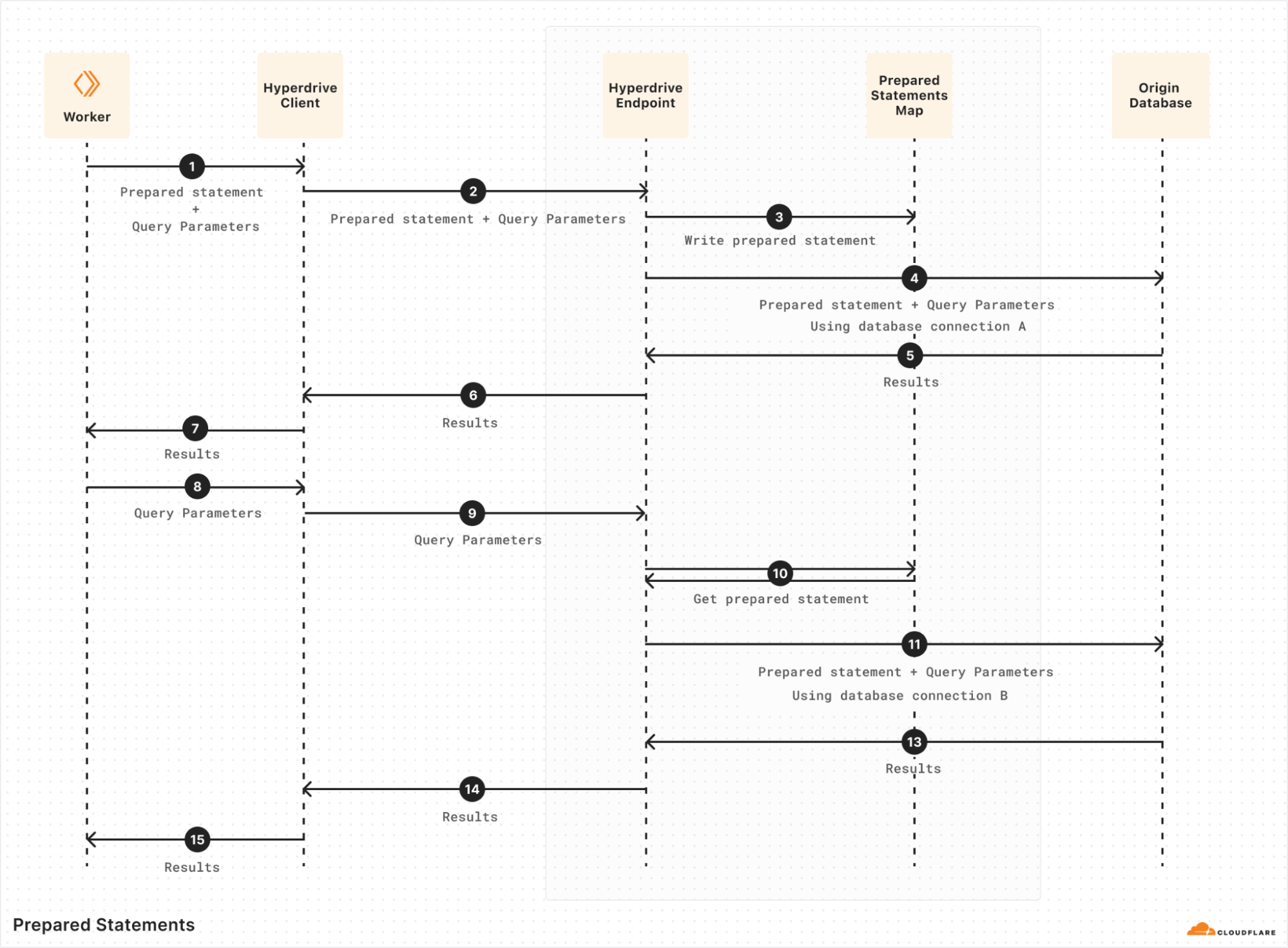

The job of a transaction-mode pooler is a hard one. Database connections are fundamentally stateful and keeping track of that state is important to maintain our guise when impersonating either a database client or a server. As an example, one of the trickier pieces of state to manage are prepared statements. When a user creates a new prepared statement, the prepared statement is only created on whichever database connection happened to be checked out at that time. Once the user finishes the transaction or query they are processing, the connection holding that statement is returned to the pool. From the user鈥檚 perspective they鈥檙e still connected using the same database connection, so a new query or transaction can reasonably expect to use that previously prepared statement. If a different connection is handed out for the next query and the query wants to make use of this resource, the pooler has to do something about it. We went into some depth on this topic in a previous blog post when we released this feature, but in sum, the process looks like this:

Hyperdrive implements this by keeping track of what statements have been prepared by a given client, as well as what statements have been prepared on each origin connection in the pool. When a query comes in expecting to re-use a particular prepared statement (#8 above), Hyperdrive checks if it鈥檚 been prepared on the checked-out origin connection. If it hasn鈥檛, Hyperdrive will replay the wire-protocol message sequence to prepare it on the newly-checked-out origin connection (#10 above) before sending the query over it. Many little corrections like this are necessary to keep the client鈥檚 connection to Hyperdrive and Hyperdrive鈥檚 connection to the origin database lined up so that both sides see what they expect.

This 鈥渟plit connection鈥?approach is the founding innovation of Hyperdrive, and one of the most vital aspects of it is how it affects starting up new connections. While the same 5+ round trips must always happen on startup, the actual time spent on the round trips can be dramatically reduced by conducting them over the smallest possible distances. This impact of distance can be so big that there is still a huge latency reduction even though the startup round trips must now happen twice (once each between the Worker and Client, and Endpoint and your origin database). So how do we decide where to run everything, to lean into that advantage as much as possible?

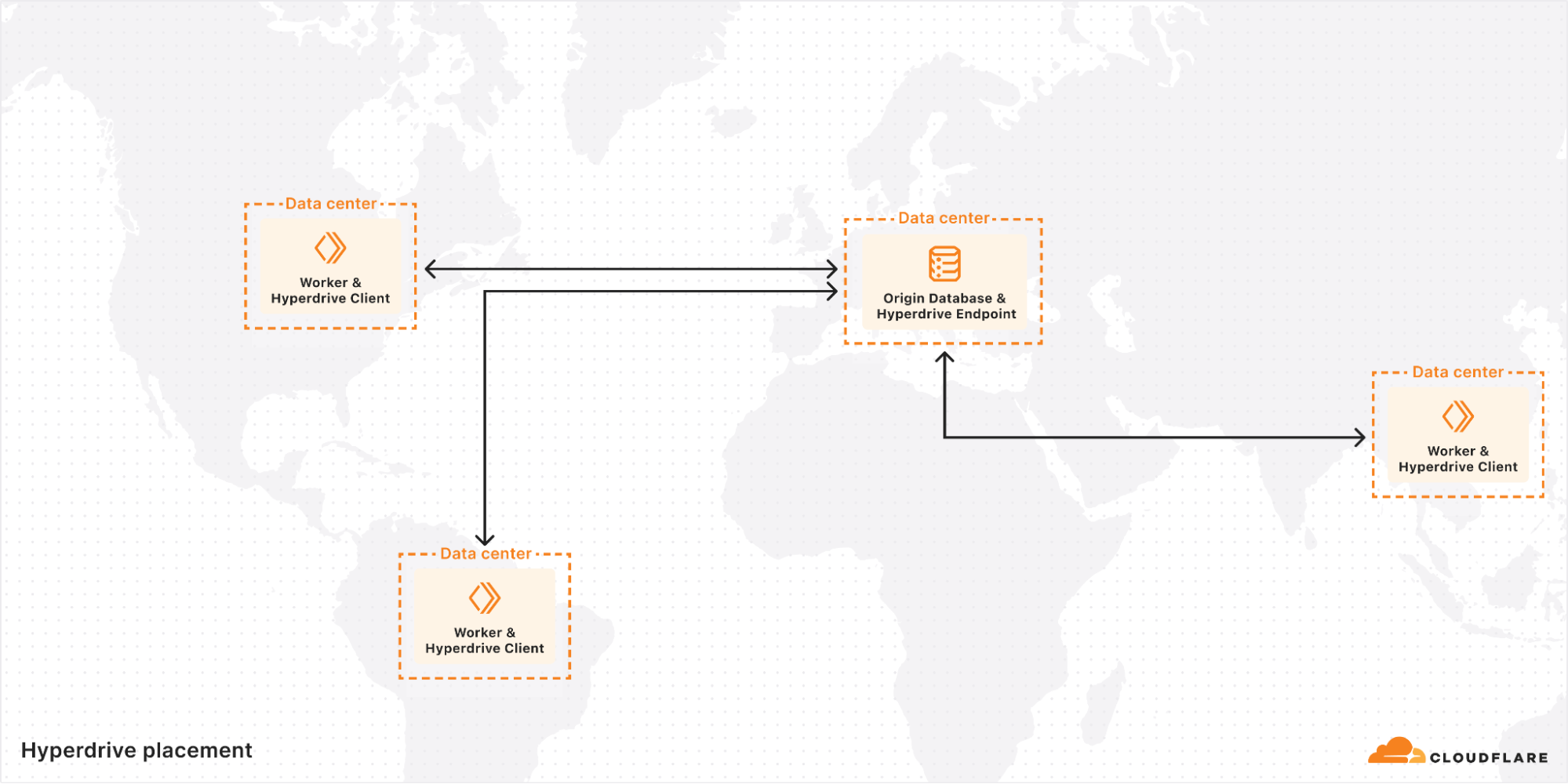

The placement of Client has not really changed since the original design of Hyperdrive. Sharing a server with the Worker sending the queries means that the Worker runtime can connect directly to Hyperdrive with no network hop needed. While there is always room for microoptimizations, it鈥檚 hard to do much better than that from an architecture perspective.聽 By far the bigger piece of the latency puzzle is where to run Endpoint.

Hyperdrive keeps a list of data centers that are eligible to house Endpoints, requiring that they have sufficient capacity and the best routes available for pooled connections to use. The key challenge to overcome here is that a database connection string does not tell you where in the world a database actually is. The reality is that reliably going from a hostname to a precise (enough) geographic location is a hard problem, even leaving aside the additional complexity of doing so within a private network. So how do we pick from that list of eligible data centers?

For much of the time since its launch, Hyperdrive solved this with a regional pool approach. When a Worker connected to Hyperdrive, the location of the Worker was used to infer what region the end user was connecting from (e.g. ENAM, WEUR, APAC, etc. 鈥?see a rough breakdown here). Data centers to house Endpoints for any given Hyperdrive were deterministically selected from that region鈥檚 list of eligible options using rendezvous hashing, resulting in one pool of connections per region.

This approach worked well enough, but it had some severe shortcomings. The first and most obvious is that there鈥檚 no guarantee that the data center selected for a given region is actually closer to the origin database than the user making the request. This means that, while you鈥檙e getting the benefit of the excellent routing available on Cloudflare's network, you may be going significantly out of your way to do so. The second downside is that, in the scenario where a new connection must be created, the round trips to do so may be happening over a significantly larger distance than is necessary if the origin database is in a different region than the Endpoint housing the regional connection pool. This increases latency and reduces throughput for the query that needs to instantiate the connection.

The final key downside here is an unfortunate interaction with Smart Placement, a feature of Cloudflare Workers that analyzes the duration of your Worker requests to identify the data center to run your Worker in. With regional Endpoints, the best Smart Placement can possibly do is to put your requests close to the Endpoint for whichever region the origin database is in. Again, there may be other data centers that are closer, but Smart Placement has no way to do better than where the Endpoint is because all Hyperdrive queries must route through it.

We recently shipped some improvements to this system that significantly enhanced performance. The new system discards the concept of regional pools entirely, in favor of a single global Endpoint for each Hyperdrive that is in the eligible data center as close as possible to the origin database.

The way we solved locating the origin database such that we can accomplish this was ultimately very straightforward. We already had a subsystem to confirm, at the time of creation, that Hyperdrive could connect to an origin database using the provided information. We call this subsystem our Edge Validator.

It鈥檚 bad user experience to allow someone to create a Hyperdrive, and then find out when they go to use it that they mistyped their password or something. Now they鈥檙e stuck trying to debug with extra layers in the way, with a Hyperdrive that can鈥檛 possibly work. Instead, whenever a Hyperdrive is created, the Edge Validator will send a request to an arbitrary data center to use its instance of Hyperdrive to connect to the origin database. If this connection fails, the creation of the Hyperdrive will also fail, giving immediate feedback to the user at the time it is most helpful.

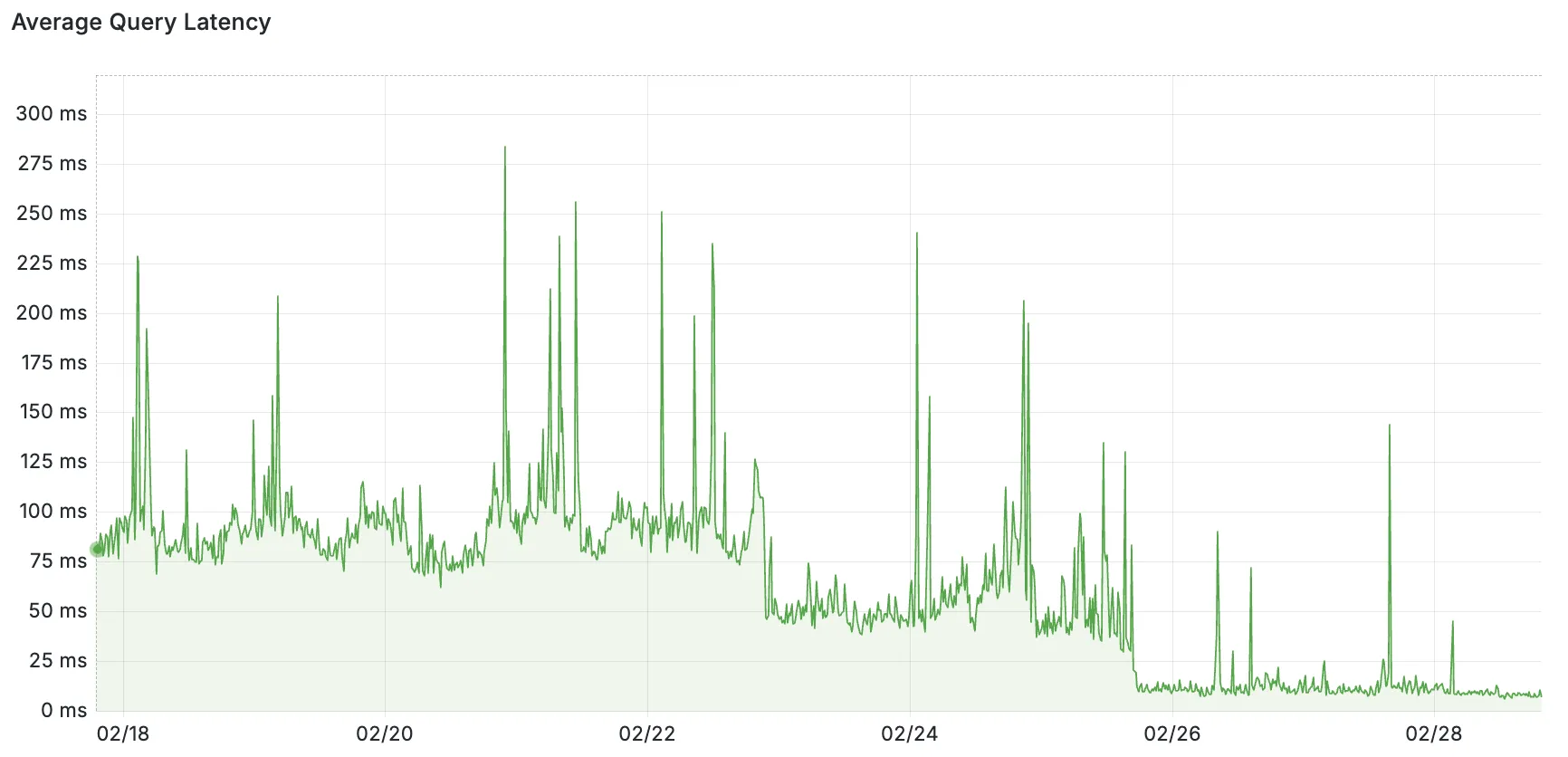

With our new subsystem, affectionately called Placement, we now have a solution to the geolocation problem. After Edge Validator has confirmed that the provided information works and the Hyperdrive is created, an extra step is run in the background. Placement will perform the exact same connection routine, except instead of being done once from an arbitrary data center, it is run a handful of times from every single data center that is eligible to house Endpoints. The latency of establishing these connections is collected, and the average is sent back to a central instance of Placement. The data centers that can connect to the origin database the fastest are, by definition, where we want to run Endpoint for this Hyperdrive. The list of these is saved, and at runtime is used to select the Endpoint best suited to housing the pool of connections to the origin database.

Given that the secret sauce of Hyperdrive is in managing and minimizing the latency of establishing these connections, moving Endpoints right next to their origin databases proved to be pretty impactful.

Pictured: query latency as measured from Endpoint to origin databases. The backfill of Placement to existing customers was done in stages on 02/22 and 02/25.

While we went in a different direction, it鈥檚 worth acknowledging that other teams have solved this same problem with a very different approach. Custom database drivers, usually called 鈥渟erverless drivers鈥? have made several optimization efforts to reduce both the number of round trips and how quickly they can be conducted, while still connecting directly from your client to your database in the traditional way. While these drivers are impressive, we chose not to go this route for a couple of reasons.

First off, a big part of the appeal of using Postgres is its vibrant ecosystem. Odds are good you鈥檝e used Postgres before, and it can probably help solve whichever problem you鈥檙e tackling with your newest project. This familiarity and shared knowledge across projects is an absolute superpower. We wanted to lean into this advantage by supporting the most popular drivers already in this ecosystem, instead of fragmenting it by adding a competing one.

Second, Hyperdrive also functions as a cache for individual queries (a bit of trivia: its name while still in Alpha was actually sql-query-cache). Doing this as effectively as possible for distributed users requires some clever positioning of where exactly the query results should be cached. One of the unique advantages of running a distributed service on Cloudflare鈥檚 network is that we have a lot of flexibility on where to run things, and can confidently surmount challenges like those. If we鈥檙e going to be playing three-card monte with where things are happening anyway, it makes the most sense to favor that route for solving the other problems we鈥檙e trying to tackle too.

As we鈥檝e talked about in the past, Hyperdrive buffers protocol messages until it has enough information to know whether a query can be served from cache. In a post about how Hyperdrive works it would be a shame to skip talking about how exactly we cache query results, so let鈥檚 close by diving into that.

First and foremost, Hyperdrive uses Cloudflare's cache, because when you have technology like that already available to you, it鈥檇 be silly not to use it. This has some implications for our architecture that are worth exploring.

The cache exists in each of Cloudflare鈥檚 data centers, and by default these are separate instances. That means that a Client operating close to the user has one, and an Endpoint operating close to the origin database has one. However, historically we weren鈥檛 able to take full advantage of that, because the logic for interacting with cache was tightly bound to the logic for managing the pool of connections.

Part of our recent architecture refactoring effort, where we switched to global Endpoints, was to split up this logic such that we can take advantage of Client鈥檚 cache too. This was necessary because, with Endpoint moving to a single location for each Hyperdrive, users from other regions would otherwise have gotten cache hits served from almost as far away as the origin.

With the new architecture, the role of Client during active query handling transitioned from that of a 鈥渄umb pipe鈥?to more like what Endpoint had always been doing. It now buffers protocol messages, and serves results from cache if possible. In those scenarios, Hyperdrive鈥檚 traffic never leaves the data center that the Worker is running in, reducing query latencies from 20-70 ms to an average of around 4 ms. As a side benefit, it also substantially reduces the network bandwidth Hyperdrive uses to serve these queries. A win-win!

In the scenarios where query results can鈥檛 be served from the cache in Client鈥檚 data center, all is still not lost. Endpoint may also have cached results for this query, because it can field traffic from many different Clients around the world. If so, it will provide these results back to Client, along with how much time is remaining before they expire, such that Client can both return them and store them correctly into its own cache. Likewise, if Endpoint does need to go to the origin database for results, they will be stored into both Client and Endpoint caches. This ensures that followup queries from that same Client data center will get the happy path with single-digit ms response times, and also reduce load on the origin database from any other Client鈥檚 queries. This functions similarly to how Cloudflare's Tiered Cache works, with Endpoint鈥檚 cache functioning as a final layer of shielding for the origin database.

With this announcement of a Free Plan for Hyperdrive, and newly armed with the knowledge of how it works under the hood, we hope you鈥檒l enjoy building your next project with it! You can get started with a single Wrangler command (or using the dashboard):

wrangler hyperdrive create postgres-hyperdrive

--connection-string="postgres://user:password@db-host.example.com:5432/defaultdb"We鈥檝e also included a Deploy to Cloudflare button below to let you get started with a sample Worker app using Hyperdrive, just bring your existing Postgres database! If you have any questions or ideas for future improvements, please feel free to visit our Discord channel!

At peak, we are moving 107 GiB/s of compressed data, either pushing it directly to customers or subjecting it to additional queueing and batching.

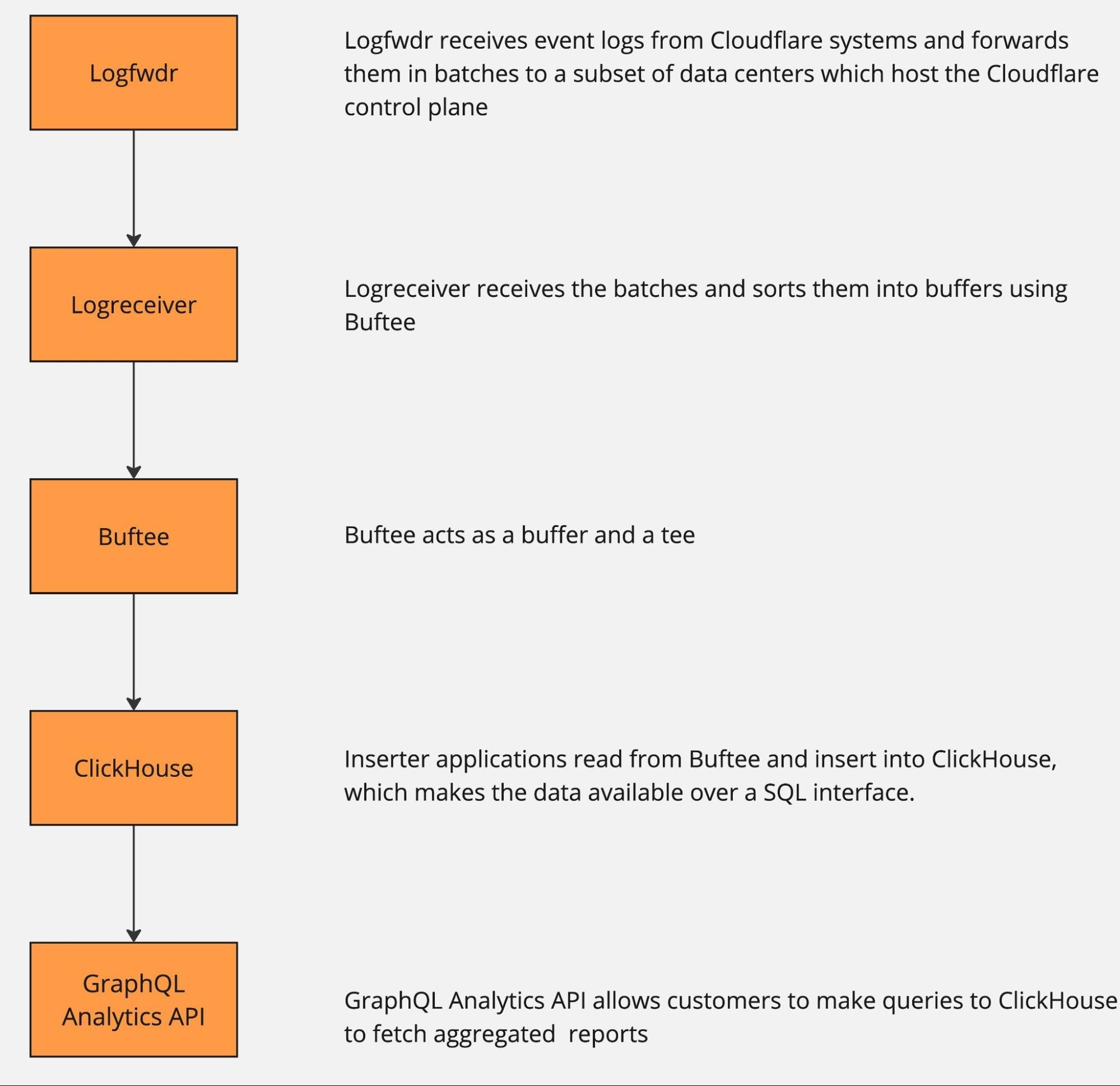

All of these data streams power things like Logs, Analytics, and billing, as well as other products, such as training machine learning models for bot detection. This blog post is focused on techniques we use to efficiently and accurately deal with the high volume of data we ingest for our Analytics products. A previous blog post provides a deeper dive into the data pipeline for Logs.聽

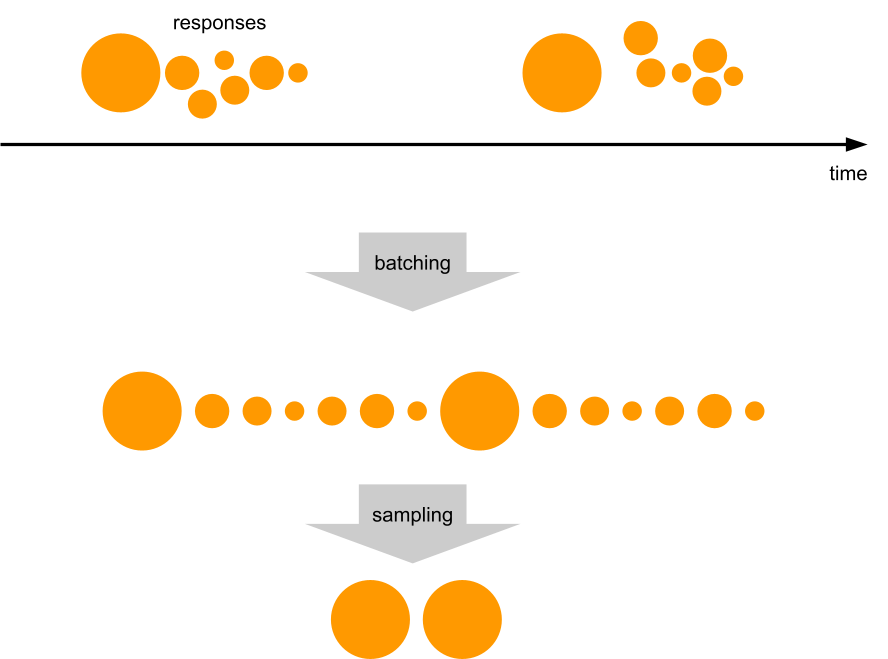

The pipeline can be roughly described by the following diagram.

The data pipeline has multiple stages, and each can and will naturally break or slow down because of hardware failures or misconfiguration. And when that happens, there is just too much data to be able to buffer it all for very long. Eventually some will get dropped, causing gaps in analytics and a degraded product experience unless proper mitigations are in place.

How does one retain valuable information from more than half a billion events per second, when some must be dropped? Drop it in a controlled way, by downsampling.

Here is a visual analogy showing the difference between uncontrolled data loss and downsampling. In both cases the same number of pixels were delivered. One is a higher resolution view of just a small portion of a popular painting, while the other shows the full painting, albeit blurry and highly pixelated.

As we noted above, any point in the pipeline can fail, so we want the ability to downsample at any point as needed. Some services proactively downsample data at the source before it even hits Logfwdr. This makes the information extracted from that data a little bit blurry, but much more useful than what otherwise would be delivered: random chunks of the original with gaps in between, or even nothing at all. The amount of "blur" is outside our control (we make our best effort to deliver full data), but there is a robust way to estimate it, as discussed in the next section.

Logfwdr can decide to downsample data sitting in the buffer when it overflows. Logfwdr handles many data streams at once, so we need to prioritize them by assigning each data stream a weight and then applying max-min fairness to better utilize the buffer. It allows each data stream to store as much as it needs, as long as the whole buffer is not saturated. Once it is saturated, streams divide it fairly according to their weighted size.

In our implementation (Go), each data stream is driven by a goroutine, and they cooperate via channels. They consult a single tracker object every time they allocate and deallocate memory. The tracker uses a max-heap to always know who the heaviest participant is and what the total usage is. Whenever the total usage goes over the limit, the tracker repeatedly sends the "please shed some load" signal to the heaviest participant, until the usage is again under the limit.

The effect of this is that healthy streams, which buffer a tiny amount, allocate whatever they need without losses. But any lagging streams split the remaining memory allowance fairly.

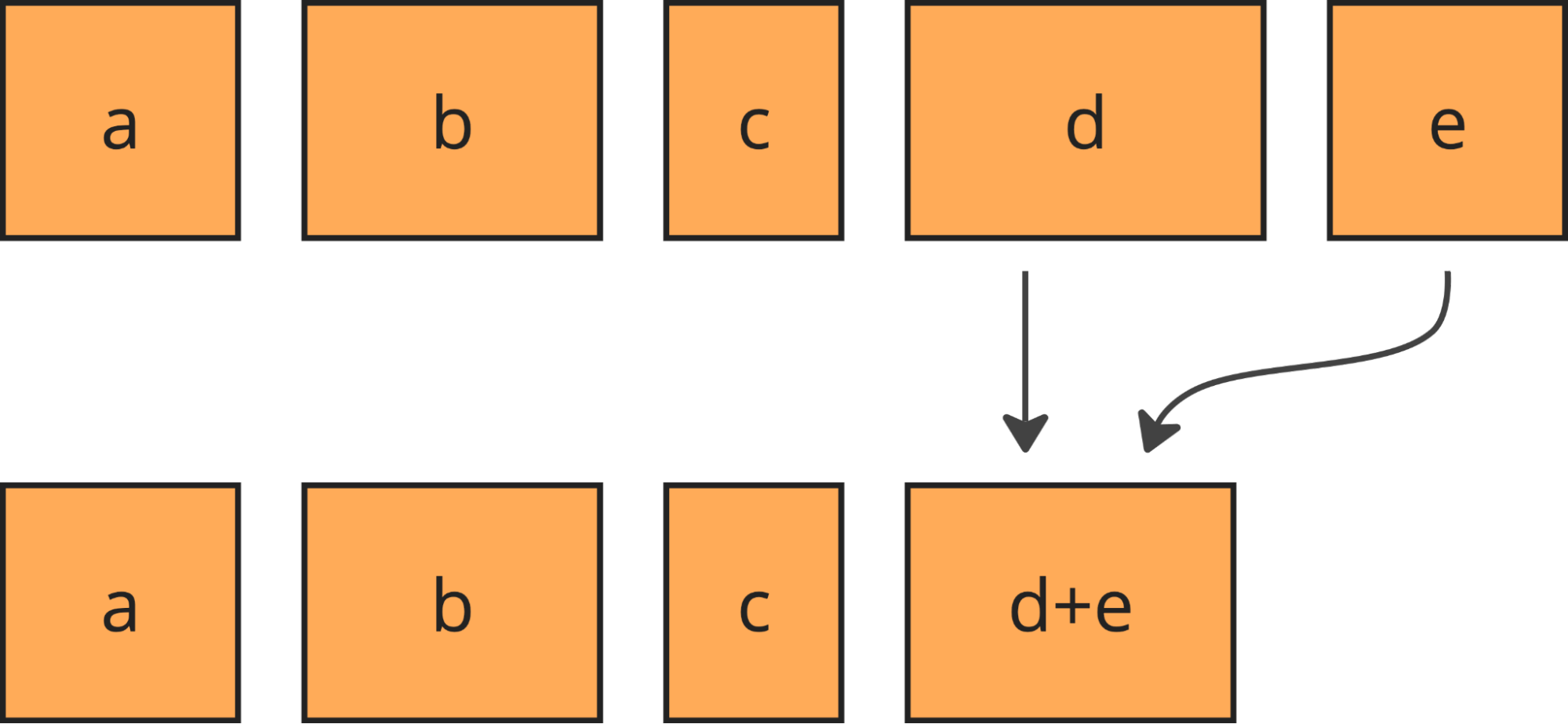

We downsample more or less uniformly, by always taking some of the least downsampled batches from the buffer (using min-heap to find those) and merging them together upon downsampling.

Merging keeps the batches roughly the same size and their number under control.

Downsampling is cheap, but since data in the buffer is compressed, it causes recompression, which is the single most expensive thing we do to the data. But using extra CPU time is the last thing you want to do when the system is under heavy load! We compensate for the recompression costs by starting to downsample the fresh data as well (before it gets compressed for the first time) whenever the stream is in the "shed the load" state.

We called this approach "bottomless buffers", because you can squeeze effectively infinite amounts of data in there, and it will just automatically be thinned out. Bottomless buffers resemble reservoir sampling, where the buffer is the reservoir and the population comes as the input stream. But there are some differences. First is that in our pipeline the input stream of data never ends, while reservoir sampling assumes it ends to finalize the sample. Secondly, the resulting sample also never ends.

Let's look at the next stage in the pipeline: Logreceiver. It sits in front of a distributed queue. The purpose of logreceiver is to partition each stream of data by a key that makes it easier for Logpush, Analytics inserters, or some other process to consume.

Logreceiver proactively performs adaptive sampling of analytics. This improves the accuracy of analytics for small customers (receiving on the order of 10 events per day), while more aggressively downsampling large customers (millions of events per second). Logreceiver then pushes the same data at multiple resolutions (100%, 10%, 1%, etc.) into different topics in the distributed queue. This allows it to keep pushing something rather than nothing when the queue is overloaded, by just skipping writing the high-resolution samples of data.

The same goes for Inserters: they can skip reading or writing high-resolution data. The Analytics APIs can skip reading high resolution data. The analytical database might be unable to read high resolution data because of overload or degraded cluster state or because there is just too much to read (very wide time range or very large customer). Adaptively dropping to lower resolutions allows the APIs to return some results in all of those cases.

Okay, we have some downsampled data in the analytical database. It looks like the original data, but with some rows missing. How do we make sense of it? How do we know if the results can be trusted?

Let's look at the math.

Since the amount of sampling can vary over time and between nodes in the distributed system, we need to store this information along with the data. With each event $x_i$ we store its sample interval, which is the reciprocal to its inclusion probability $\pi_i = \frac{1}{\text{sample interval}}$. For example, if we sample 1 in every 1,000 events, each of the events included in the resulting sample will have its $\pi_i = 0.001$, so the sample interval will be 1,000. When we further downsample that batch of data, the inclusion probabilities (and the sample intervals) multiply together: a 1 in 1,000 sample from a 1 in 1,000 sample is a 1 in 1,000,000 sample of the original population. The sample interval of an event can also be interpreted roughly as the number of original events that this event represents, so in the literature it is known as weight $w_i = \frac{1}{\pi_i}$. We rely on the Horvitz-Thompson estimator (HT, paper) in order to derive analytics about $x_i$. It gives two estimates: the analytical estimate (e.g. the population total or size) and the estimate of the variance of that estimate. The latter enables us to figure out how accurate the results are by building confidence intervals. They define ranges that cover the true value with a given probability (confidence level). A typical confidence level is 0.95, at which a confidence interval (a, b) tells that you can be 95% sure the true SUM or COUNT is between a and b.So far, we know how to use the HT estimator for doing SUM, COUNT, and AVG.

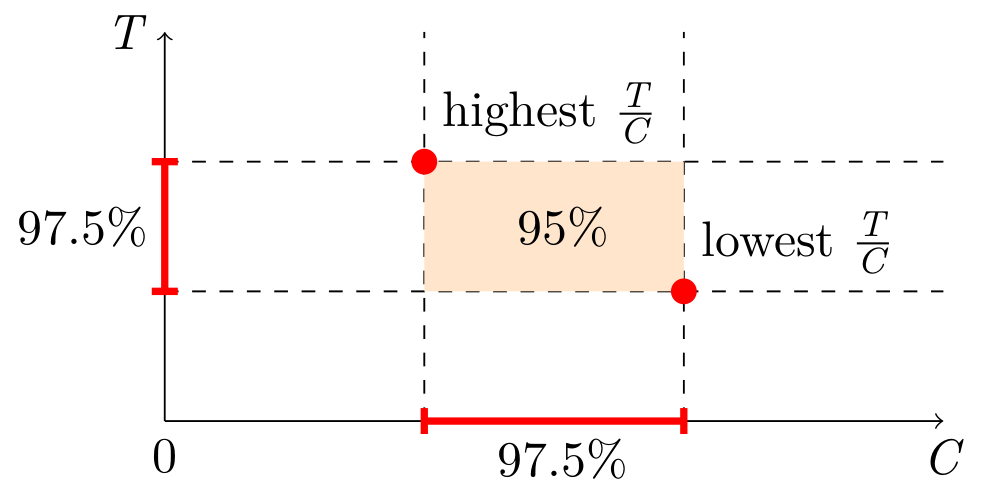

Given a sample of size $n$, consisting of values $x_i$ and their inclusion probabilities $\pi_i$, the HT estimator for the population total (i.e. SUM) would be $$\widehat{T}=\sum_{i=1}^n{\frac{x_i}{\pi_i}}=\sum_{i=1}^n{x_i w_i}.$$ The variance of $\widehat{T}$ is: $$\widehat{V}(\widehat{T}) = \sum_{i=1}^n{x_i^2 \frac{1 - \pi_i}{\pi_i^2}} + \sum_{i \neq j}^n{x_i x_j \frac{\pi_{ij} - \pi_i \pi_j}{\pi_{ij} \pi_i \pi_j}},$$ where $\pi_{ij}$ is the probability of both $i$-th and $j$-th events being sampled together. We use Poisson sampling, where each event is subjected to an independent Bernoulli trial ("coin toss") which determines whether the event becomes part of the sample. Since each trial is independent, we can equate $\pi_{ij} = \pi_i \pi_j$, which when plugged in the variance estimator above turns the right-hand sum to zero: $$\widehat{V}(\widehat{T}) = \sum_{i=1}^n{x_i^2 \frac{1 - \pi_i}{\pi_i^2}} + \sum_{i \neq j}^n{x_i x_j \frac{0}{\pi_{ij} \pi_i \pi_j}},$$ thus $$\widehat{V}(\widehat{T}) = \sum_{i=1}^n{x_i^2 \frac{1 - \pi_i}{\pi_i^2}} = \sum_{i=1}^n{x_i^2 w_i (w_i-1)}.$$ For COUNT we use the same estimator, but plug in $x_i = 1$. This gives us: $$\begin{align} \widehat{C} &= \sum_{i=1}^n{\frac{1}{\pi_i}} = \sum_{i=1}^n{w_i},\\ \widehat{V}(\widehat{C}) &= \sum_{i=1}^n{\frac{1 - \pi_i}{\pi_i^2}} = \sum_{i=1}^n{w_i (w_i-1)}. \end{align}$$ For AVG we would use $$\begin{align} \widehat{\mu} &= \frac{\widehat{T}}{N},\\ \widehat{V}(\widehat{\mu}) &= \frac{\widehat{V}(\widehat{T})}{N^2}, \end{align}$$ if we could, but the original population size $N$ is not known, it is not stored anywhere, and it is not even possible to store because of custom filtering at query time. Plugging $\widehat{C}$ instead of $N$ only partially works. It gives a valid estimator for the mean itself, but not for its variance, so the constructed confidence intervals are unusable. In all cases the corresponding pair of estimates are used as the $\mu$ and $\sigma^2$ of the normal distribution (because of the central limit theorem), and then the bounds for the confidence interval (of confidence level ) are: $$\Big( \mu - \Phi^{-1}\big(\frac{1 + \alpha}{2}\big) \cdot \sigma, \quad \mu + \Phi^{-1}\big(\frac{1 + \alpha}{2}\big) \cdot \sigma\Big).$$We do not know the N, but there is a workaround: simultaneous confidence intervals. Construct confidence intervals for SUM and COUNT independently, and then combine them into a confidence interval for AVG. This is known as the Bonferroni method. It requires generating wider (half the "inconfidence") intervals for SUM and COUNT. Here is a simplified visual representation, but the actual estimator will have to take into account the possibility of the orange area going below zero.

In SQL, the estimators and confidence intervals look like this:

WITH sum(x * _sample_interval) AS t,

sum(x * x * _sample_interval * (_sample_interval - 1)) AS vt,

sum(_sample_interval) AS c,

sum(_sample_interval * (_sample_interval - 1)) AS vc,

-- ClickHouse does not expose the erf鈦宦?function, so we precompute some magic numbers,

-- (only for 95% confidence, will be different otherwise):

-- 1.959963984540054 = 桅鈦宦?(1+0.950)/2) = 鈭? * erf鈦宦?0.950)

-- 2.241402727604945 = 桅鈦宦?(1+0.975)/2) = 鈭? * erf鈦宦?0.975)

1.959963984540054 * sqrt(vt) AS err950_t,

1.959963984540054 * sqrt(vc) AS err950_c,

2.241402727604945 * sqrt(vt) AS err975_t,

2.241402727604945 * sqrt(vc) AS err975_c

SELECT t - err950_t AS lo_total,

t AS est_total,

t + err950_t AS hi_total,

c - err950_c AS lo_count,

c AS est_count,

c + err950_c AS hi_count,

(t - err975_t) / (c + err975_c) AS lo_average,

t / c AS est_average,

(t + err975_t) / (c - err975_c) AS hi_average

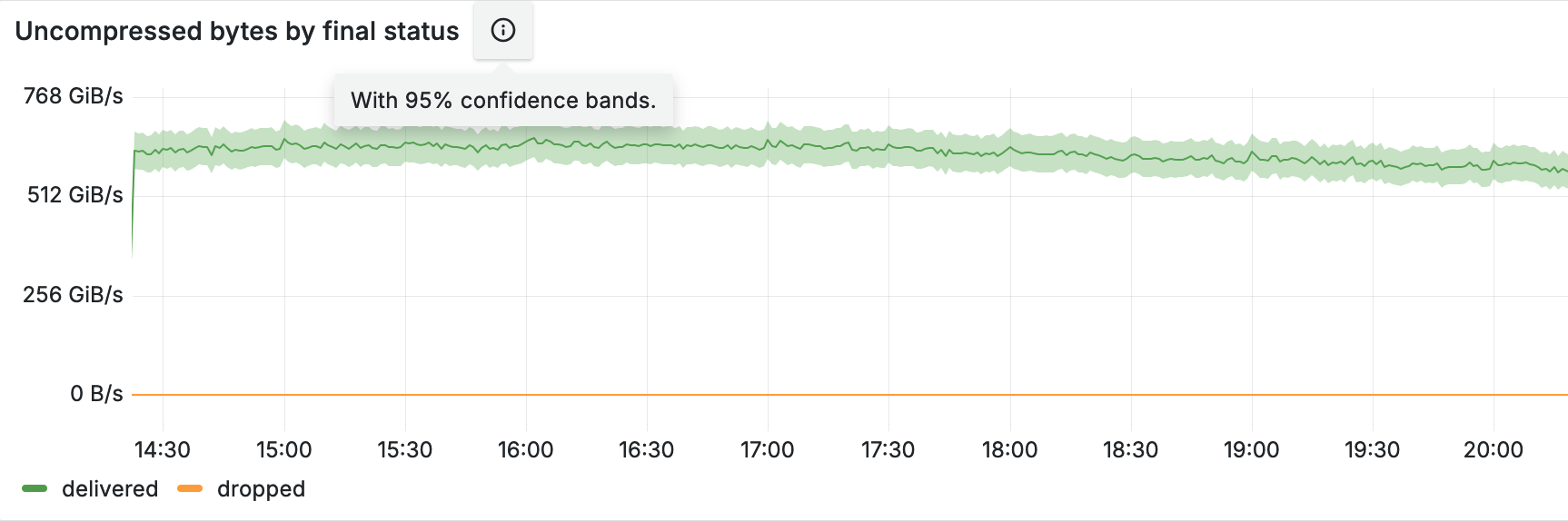

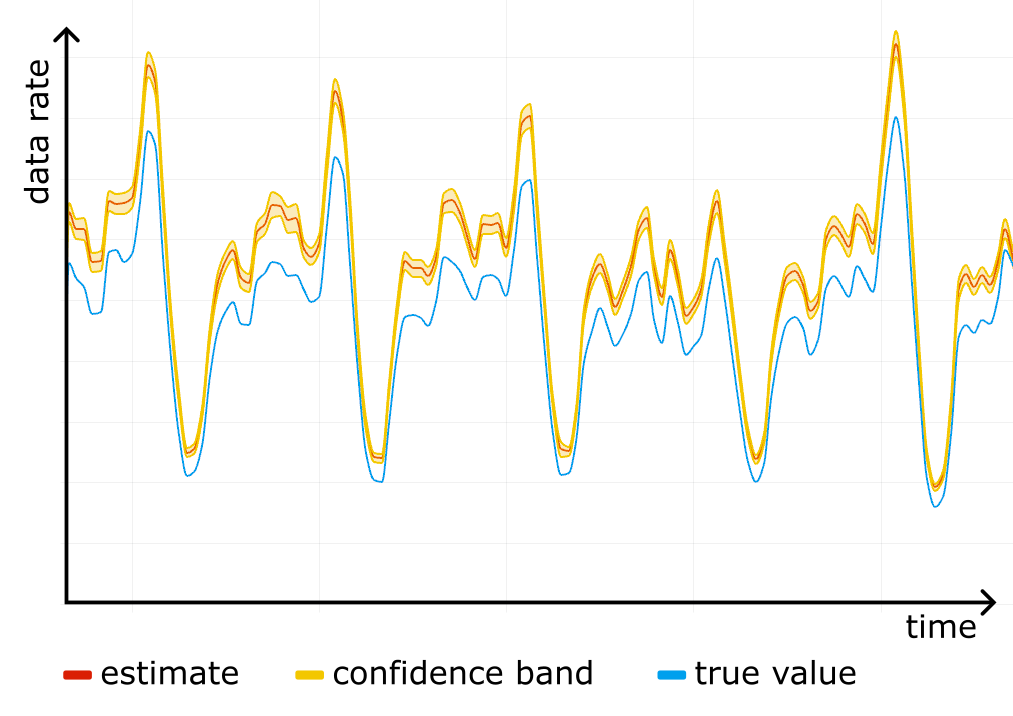

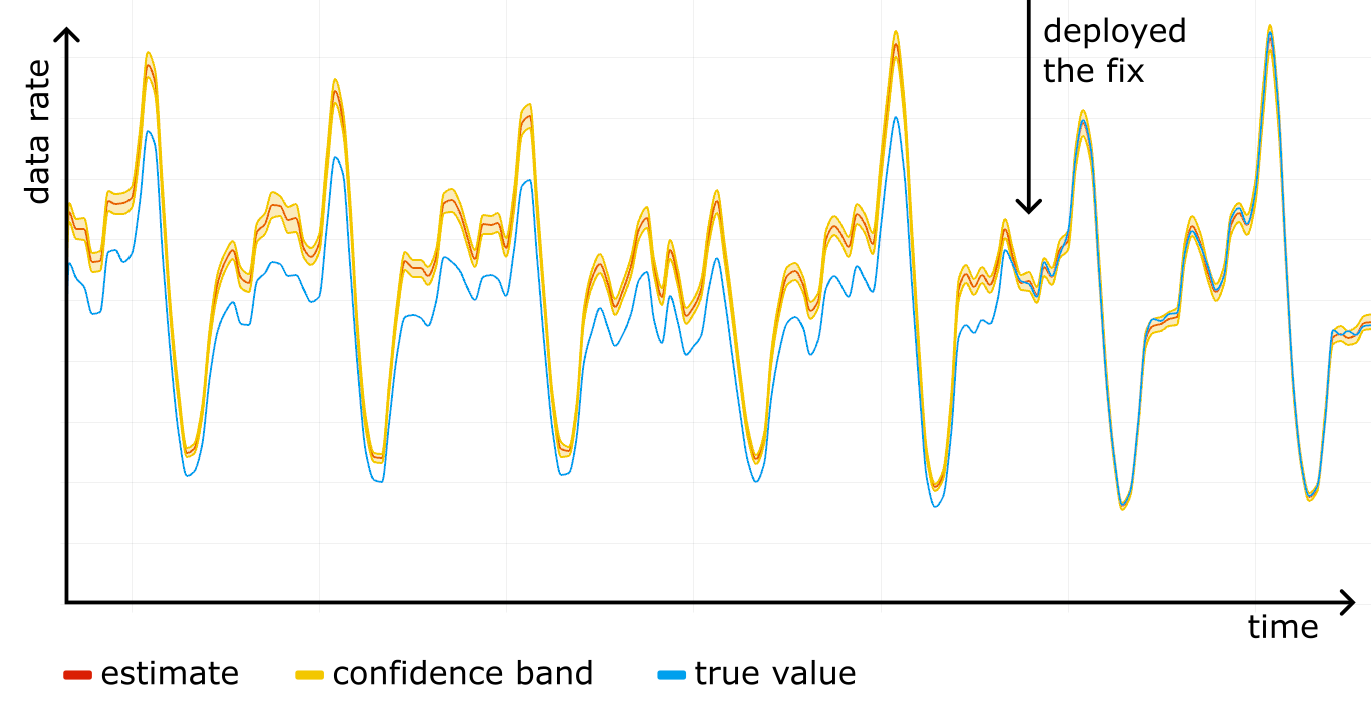

FROM ...Construct a confidence interval for each timeslot on the timeseries, and you get a confidence band, clearly showing the accuracy of the analytics. The figure below shows an example of such a band in shading around the line.

We started using confidence bands on our internal dashboards, and after a while noticed something scary: a systematic error! For one particular website the "total bytes served" estimate was higher than the true control value obtained from rollups, and the confidence bands were way off. See the figure below, where the true value (blue line) is outside the yellow confidence band at all times.

We checked the stored data for corruption, it was fine. We checked the math in the queries, it was fine. It was only after reading through the source code for all of the systems responsible for sampling that we found a candidate for the root cause.

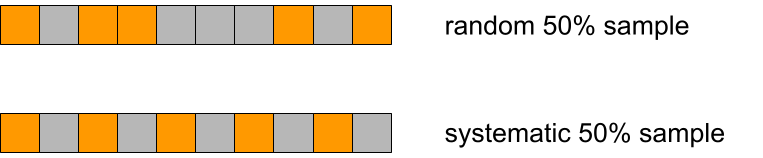

We used simple random sampling everywhere, basically "tossing a coin" for each event, but in Logreceiver sampling was done differently. Instead of sampling randomly it would perform systematic sampling by picking events at equal intervals starting from the first one in the batch.

Why would that be a problem?

There are two reasons. The first is that we can no longer claim $\pi_{ij} = \pi_i \pi_j$, so the simplified variance estimator stops working and confidence intervals cannot be trusted. But even worse, the estimator for the total becomes biased. To understand why exactly, we wrote a short repro code in Python:import itertools

def take_every(src, period):

for i, x in enumerate(src):

if i % period == 0:

yield x

pattern = [10, 1, 1, 1, 1, 1]

sample_interval = 10 # bad if it has common factors with len(pattern)

true_mean = sum(pattern) / len(pattern)

orig = itertools.cycle(pattern)

sample_size = 10000

sample = itertools.islice(take_every(orig, sample_interval), sample_size)

sample_mean = sum(sample) / sample_size

print(f"{true_mean=} {sample_mean=}")After playing with different values for pattern and sample_interval in the code above, we realized where the bias was coming from.

Imagine a person opening a huge generated HTML page with many small/cached resources, such as icons. The first response will be big, immediately followed by a burst of small responses. If the website is not visited that much, responses will tend to end up all together at the start of a batch in Logfwdr. Logreceiver does not cut batches, only concatenates them. The first response remains first, so it always gets picked and skews the estimate up.

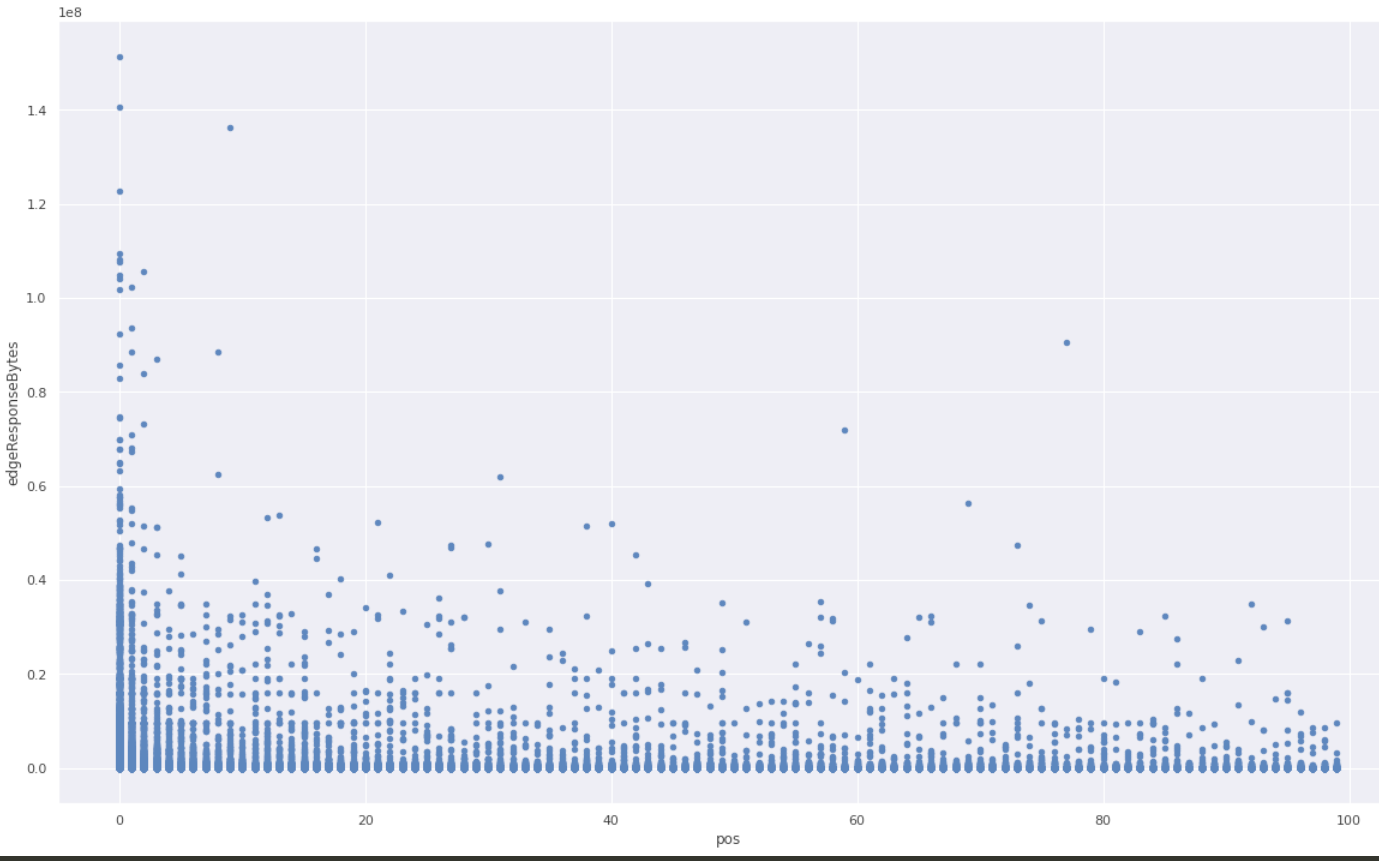

We checked the hypothesis against the raw unsampled data that we happened to have because that particular website was also using one of the Logs products. We took all events in a given time range, and grouped them by cutting at gaps of at least one minute. In each group, we ranked all events by time and looked at the variable of interest (response size in bytes), and put it on a scatter plot against the rank inside the group.

A clear pattern! The first response is much more likely to be larger than average.

We fixed the issue by making Logreceiver shuffle the data before sampling. As we rolled out the fix, the estimation and the true value converged.

Now, after battle testing it for a while, we are confident the HT estimator is implemented properly and we are using the correct sampling process.

We already power most of our analytics datasets with sampled data. For example, the Workers Analytics Engine exposes the sample interval in SQL, allowing our customers to build their own dashboards with confidence bands. In the GraphQL API, all of the data nodes that have "Adaptive" in their name are based on sampled data, and the sample interval is exposed as a field there as well, though it is not possible to build confidence intervals from that alone. We are working on exposing confidence intervals in the GraphQL API, and as an experiment have added them to the count and edgeResponseBytes (sum) fields on the httpRequestsAdaptiveGroups nodes. This is available under confidence(level: X).

Here is a sample GraphQL query:

query HTTPRequestsWithConfidence(

$accountTag: string

$zoneTag: string

$datetimeStart: string

$datetimeEnd: string

) {

viewer {

zones(filter: { zoneTag: $zoneTag }) {

httpRequestsAdaptiveGroups(

filter: {

datetime_geq: $datetimeStart

datetime_leq: $datetimeEnd

}

limit: 100

) {

confidence(level: 0.95) {

level

count {

estimate

lower

upper

sampleSize

}

sum {

edgeResponseBytes {

estimate

lower

upper

sampleSize

}

}

}

}

}

}

The query above asks for the estimates and the 95% confidence intervals for SUM(edgeResponseBytes) and COUNT. The results will also show the sample size, which is good to know, as we rely on the central limit theorem to build the confidence intervals, thus small samples don't work very well.

Here is the response from this query:

{

"data": {

"viewer": {

"zones": [

{

"httpRequestsAdaptiveGroups": [

{

"confidence": {

"level": 0.95,

"count": {

"estimate": 96947,

"lower": "96874.24",

"upper": "97019.76",

"sampleSize": 96294

},

"sum": {

"edgeResponseBytes": {

"estimate": 495797559,

"lower": "495262898.54",

"upper": "496332219.46",

"sampleSize": 96294

}

}

}

}

]

}

]

}

},

"errors": null

}

The response shows the estimated count is 96947, and we are 95% confident that the true count lies in the range 96874.24 to 97019.76. Similarly, the estimate and range for the sum of response bytes are provided.

The estimates are based on a sample size of 96294 rows, which is plenty of samples to calculate good confidence intervals.

We have discussed what kept our data pipeline scalable and resilient despite doubling in size every 1.5 years, how the math works, and how it is easy to mess up. We are constantly working on better ways to keep the data pipeline, and the products based on it, useful to our customers. If you are interested in doing things like that and want to help us build a better Internet, check out our careers page.

]]>With September鈥檚 announcement of Hyperdrive鈥檚 ability to send database traffic from Workers over Cloudflare Tunnels, we wanted to dive into the details of what it took to make this happen.

Accessing your data from anywhere in Region Earth can be hard. Traditional databases are powerful, familiar, and feature-rich, but your users can be thousands of miles away from your database. This can cause slower connection startup times, slower queries, and connection exhaustion as everything takes longer to accomplish.

Cloudflare Workers is an incredibly lightweight runtime, which enables our customers to deploy their applications globally by default and renders the cold start problem almost irrelevant. The trade-off for these light, ephemeral execution contexts is the lack of persistence for things like database connections. Database connections are also notoriously expensive to spin up, with many round trips required between client and server before any query or result bytes can be exchanged.

Hyperdrive is designed to make the centralized databases you already have feel like they鈥檙e global while keeping connections to those databases hot. We use our global network to get faster routes to your database, keep connection pools primed, and cache your most frequently run queries as close to users as possible.

For something as sensitive as your database, exposing access to the public Internet can be uncomfortable. It is common to instead host your database on a private network, and allowlist known-safe IP addresses or configure GRE tunnels to permit traffic to it. This is complex, toilsome, and error-prone.聽

On Cloudflare鈥檚 Developer Platform, we strive for simplicity and ease-of-use. We cannot expect all of our customers to be experts in configuring networking solutions, and so we went in search of a simpler solution. Being your own customer is rarely a bad choice, and it so happens that Cloudflare offers an excellent option for this scenario: Tunnels.

Cloudflare Tunnel is a Zero Trust product that creates a secure connection between your private network and Cloudflare. Exposing services within your private network can be as simple as running a cloudflared binary, or deploying a Docker container running the cloudflared image we distribute.

Integrating with Tunnels to support sending Postgres directly through them was a bit of a new challenge for us. Most of the time, when we use Tunnels internally (more on that later!), we rely on the excellent job cloudflared does of handling all of the mechanics, and we just treat them as pipes. That wouldn鈥檛 work for Hyperdrive, though, so we had to dig into how Tunnels actually ingress traffic to build a solution.

Hyperdrive handles Postgres traffic using an entirely custom implementation of the Postgres message protocol. This is necessary, because we sometimes have to alter the specific type or content of messages sent from client to server, or vice versa. Handling individual bytes gives us the flexibility to implement whatever logic any new feature might need.

An additional, perhaps less obvious, benefit of handling Postgres message traffic as just bytes is that we are not bound to the transport layer choices of some ORM or library. One of the nuances of running services in Cloudflare is that we may want to egress traffic over different services or protocols, for a variety of different reasons. In this case, being able to egress traffic via a Tunnel would be pretty challenging if we were stuck with whatever raw TCP socket a library had established for us.

The way we accomplish this relies on a mainstay of Rust: traits (which are how Rust lets developers apply logic across generic functions and types). In the Rust ecosystem, there are two traits that define the behavior Hyperdrive wants out of its transport layers: AsyncRead and AsyncWrite. There are a couple of others we also need, but we鈥檙e going to focus on just these two. These traits enable us to code our entire custom handler against a generic stream of data, without the handler needing to know anything about the underlying protocol used to implement the stream. So, we can pass around a WebSocket connection as a generic I/O stream, wherever it might be needed.

As an example, the code to create a generic TCP stream and send a Postgres startup message across it might look like this:

/// Send a startup message to a Postgres server, in the role of a PG client.

/// https://www.postgresql.org/docs/current/protocol-message-formats.html#PROTOCOL-MESSAGE-FORMATS-STARTUPMESSAGE

pub async fn send_startup<S>(stream: &mut S, user_name: &str, db_name: &str, app_name: &str) -> Result<(), ConnectionError>

where

S: AsyncWrite + Unpin,

{

let protocol_number = 196608 as i32;

let user_str = &b"user\0"[..];

let user_bytes = user_name.as_bytes();

let db_str = &b"database\0"[..];

let db_bytes = db_name.as_bytes();

let app_str = &b"application_name\0"[..];